Tag: optimization

Whats new in the latest version of R

CHANGES IN R VERSION 2.12.2: http://cran.r-project.org/src/base/NEWS SIGNIFICANT USER-VISIBLE CHANGES: • Complex arithmetic (notably z^n for complex z and integer n) gave incorrect results since R 2.10.0 on platforms without C99 complex support. This and some lesser issues in trignometric functions have been corrected. Such platforms were rare (we know of Cygwin and FreeBSD). However, because of new compiler optimizations in the way complex arguments are handled, the same code was selected on x86_64 Linux with gcc 4.5.x at the default -O2 optimization (but not at -O). • There is a workaround for crashes seen with several packages on systems using zlib 1.2.5: see the INSTALLATION section. NEW FEATURES: • PCRE has been updated to 8.12 (two bug-fix releases since 8.10). • rep(), seq(), seq.int() and seq_len() report more often when the first element is taken of an argument of incorrect length. • The Cocoa back-end for the quartz() graphics device on Mac OS X provides a way to disable event loop processing temporarily (useful, e.g., for forked instances of R). • kernel()'s default for m was not appropriate if coef was a set of coefficients. (Reported by Pierre Chausse.) • bug.report() has been updated for the current R bug tracker, which does not accept emailed submissions. • R CMD check now checks for the correct use of $(LAPACK_LIBS) (as well as $(BLAS_LIBS)), since several CRAN recent submissions have ignored ‘Writing R Extensions’. INSTALLATION: • The zlib sources in the distribution are now built with all symbols remapped: this is intended to avoid problems seen with packages such as XML and rggobi which link to zlib.so.1 on systems using zlib 1.2.5. • The default for FFLAGS and FCFLAGS with gfortran on x86_64 Linux has been changed back to -g -O2: however, setting -g -O may still be needed for gfortran 4.3.x. PACKAGE INSTALLATION: • A LazyDataCompression field in the DESCRIPTION file will be used to set the value for the --data-compress option of R CMD INSTALL. • Files R/sysdata.rda of more than 1Mb are now stored in the lazyload daabase using xz compression: this for example halves the installed size of package Imap. • R CMD INSTALL now ensures that directories installed from inst have search permission for everyone. It no longer installs files inst/doc/Rplots.ps and inst/doc/Rplots.pdf. These are almost certainly left-overs from Sweave runs, and are often large. DEPRECATED & DEFUNCT: • The ‘experimental’ alternative specification of a name space via .Export() etc is now deprecated. • zip.file.extract() is now deprecated. • Zip-ing data sets in packages (and hence R CMD INSTALL --use-zip-data and the ZipData: yes field in a DESCRIPTION file) is deprecated: using efficiently compressed .rda images and lazy-loading of data has superseded it. BUG FIXES: • identical() could in rare cases generate a warning about non-pairlist attributes on CHARSXPs. As these are used for internal purposes, the attribute check should be skipped. (Reported by Niels Richard Hansen). • If the filename extension (usually .Rnw) was not included in a call to Sweave(), source references would not work properly and the keep.source option failed. (PR#14459) • format.data.frame() now keeps zero character column names. • pretty(x) no longer raises an error when x contains solely non-finite values. (PR#14468) • The plot.TukeyHSD() function now uses a line width of 0.5 for its reference lines rather than lwd = 0 (which caused problems for some PDF and PostScript viewers). • The big.mark argument to prettyNum(), format(), etc. was inserted reversed if it was more than one character long. • R CMD check failed to check the filenames under man for Windows' reserved names. • The "Date" and "POSIXt" methods for seq() could overshoot when to was supplied and by was specified in months or years. • The internal method of untar() now restores hard links as file copies rather than symbolic links (which did not work for cross-directory links). • unzip() did not handle zip files which contained filepaths with two or more leading directories which were not in the zipfile and did not already exist. (It is unclear if such zipfiles are valid and the third-party C code used did not support them, but PR#14462 created one.) • combn(n, m) now behaves more regularly for the border case m = 0. (PR#14473) • The rendering of numbers in plotmath expressions (e.g. expression(10^2)) used the current settings for conversion to strings rather than setting the defaults, and so could be affected by what has been done before. (PR#14477) • The methods of napredict() and naresid() for na.action = na.exclude fits did not work correctly in the very rare event that every case had been omitted in the fit. (Reported by Simon Wood.) • weighted.residuals(drop0=TRUE) returned a vector when the residuals were a matrix (e.g. those of class "mlm"). (Reported by Bill Dunlap.) • Package HTML index files /html/00Index.html were generated with a stylesheet reference that was not correct for static browsing in libraries. • ccf(na.action = na.pass) was not implemented. • The parser accepted some incorrect numeric constants, e.g. 20x2. (Reported by Olaf Mersmann.) • format(*, zero.print) did not always replace the full zero parts. • Fixes for subsetting or subassignment of "raster" objects when not both i and j are specified. • R CMD INSTALL was not always respecting the ZipData: yes field of a DESCRIPTION file (although this is frequently incorrectly specified for packages with no data or which specify lazy-loading of data). R CMD INSTALL --use-zip-data was incorrectly implemented as --use-zipdata since R 2.9.0. • source(file, echo=TRUE) could fail if the file contained #line directives. It now recovers more gracefully, but may still display the wrong line if the directive gives incorrect information. • atan(1i) returned NaN+Infi (rather than 0+Infi) on platforms without C99 complex support. • library() failed to cache S4 metadata (unlike loadNamespace()) causing failures in S4-using packages without a namespace (e.g. those using reference classes). • The function qlogis(lp, log.p=TRUE) no longer prematurely overflows to Inf when exp(lp) is close to 1. • Updating S4 methods for a group generic function requires resetting the methods tables for the members of the group (patch contributed by Martin Morgan). • In some circumstances (including for package XML), R CMD INSTALL installed version-control directories from source packages. • Added PROTECT calls to some constructed expressions used in C level eval calls. • utils:::create.post() (used by bug.report() and help.request()) failed to quote arguments to the mailer, and so often failed. • bug.report() was naive about how to extract maintainer email addresses from package descriptions, so would often try mailing to incorrect addresses. • debugger() could fail to read the environment of a call to a function with a ... argument. (Reported by Charlie Roosen.) • prettyNum(c(1i, NA), drop0=TRUE) or str(NA_complex_) now work correctly.

Related Articles

- R 2.12.2 scheduled for February 25 (revolutionanalytics.com)

- Sweave Tutorial 3: Console Input and Output – Multiple Choice Test Analysis (r-bloggers.com)

Carole-Ann’s 2011 Predictions for Decision Management

For Ajay Ohri on DecisionStats.com

What were the top 5 events in 2010 in your field?

- Maturity: the Decision Management space was made up of technology vendors, big and small, that typically focused on one or two aspects of this discipline. Over the past few years, we have seen a lot of consolidation in the industry – first with Business Intelligence (BI) then Business Process Management (BPM) and lately in Business Rules Management (BRM) and Advanced Analytics. As a result the giant Platform vendors have helped create visibility for this discipline. Lots of tiny clues finally bubbled up in 2010 to attest of the increasing activity around Decision Management. For example, more products than ever were named Decision Manager; companies advertised for Decision Managers as a job title in their job section; most people understand what I do when I am introduced in a social setting!

- Boredom: unfortunately, as the industry matures, inevitably innovation slows down… At the main BRMS shows we heard here and there complaints that the technology was stalling. We heard it from vendors like Red Hat (Drools) and we heard it from bored end-users hoping for some excitement at Business Rules Forum’s vendor panel. They sadly did not get it

- Scrum: I am not thinking about the methodology there! If you have ever seen a rugby game, you can probably understand why this is the term that comes to mind when I look at the messy & confusing technology landscape. Feet blindly try to kick the ball out while superhuman forces are moving randomly the whole pack – or so it felt when I played! Business Users in search of Business Solutions are facing more and more technology choices that feel like comparing apples to oranges. There is value in all of them and each one addresses a specific aspect of Decision Management but I regret that the industry did not simplify the picture in 2010. On the contrary! Many buzzwords were created or at least made popular last year, creating even more confusion on a muddy field. A few examples: Social CRM, Collaborative Decision Making, Adaptive Case Management, etc. Don’t take me wrong, I *do* like the technologies. I sympathize with the decision maker that is trying to pick the right solution though.

- Information: Analytics have been used for years of course but the volume of data surrounding us has been growing to unparalleled levels. We can blame or thank (depending on our perspective) Social Media for that. Sites like Facebook and LinkedIn have made it possible and easy to publish relevant (as well as fluffy) information in real-time. As we all started to get the hang of it and potentially over-publish, technology evolved to enable the storage, correlation and analysis of humongous volumes of data that we could not dream of before. 25 billion tweets were posted in 2010. Every month, over 30 billion pieces of data are shared on Facebook alone. This is not just about vanity and marketing though. This data can be leveraged for the greater good. Carlos pointed to some fascinating facts about catastrophic event response team getting organized thanks to crowd-sourced information. We are also seeing, in the Decision management world, more and more applicability for those very technology that have been developed for the needs of Big Data – I’ll name for example Hadoop that Carlos (yet again) discussed in his talks at Rules Fest end of 2009 and 2010.

- Self-Organization: it may be a side effect of the Social Media movement but I must admit that I was impressed by the success of self-organizing initiatives. Granted, this last trend has nothing to do with Decision Management per se but I think it is a great evolution worth noting. Let me point to a couple of examples. I usually attend traditional conferences and tradeshows in which the content can be good but is sometimes terrible. I was pleasantly surprised by the professionalism and attendance at *un-conferences* such as P-Camp (P stands for Product – an event for Product Managers). When you think about it, it is already difficult to get a show together when people are dedicated to the tasks. How crazy is it to have volunteers set one up with no budget and no agenda? Well, people simply show up to do their part and everyone has fun voting on-site for what seems the most appealing content at the time. Crowdsourcing applied to shows: it works! Similar experience with meetups or tweetups. I also enjoyed attending some impromptu Twitter jam sessions on a given topic. Social Media is certainly helping people reach out and get together in person or virtually and that is wonderful!

What are the top three trends you see in 2011?

- Performance: I might be cheating here. I was very bullish about predicting much progress for 2010 in the area of Performance Management in your Decision Management initiatives. I believe that progress was made but Carlos did not give me full credit for the right prediction… Okay, I am a little optimistic on timeline… I admit it… If it did not fully happen in 2010, can I predict it again in 2011? I think that companies want to better track their business performance in order to correct the trajectory of course but also to improve their projections. I see that it is turning into reality already here and there. I expect it to become a trend in 2011!

- Insight: Big Data being available all around us with new technologies and algorithms will continue to propagate in 2011 leading to more widely spread Analytics capabilities. The buzz at Analytics shows on Social Network Analysis (SNA) is a sign that there is interest in those kinds of things. There is tremendous information that can be leveraged for smart decision-making. I think there will be more of that in 2011 as initiatives launches in 2010 will mature into material results.

- Collaboration: Social Media for the Enterprise is a discipline in the making. Social Media was initially seen for the most part as a Marketing channel. Over the years, companies have started experimenting with external communities and ideation capabilities with moderate success. The few strategic initiatives started in 2010 by “old fashion” companies seem to be an indication that we are past the early adopters. This discipline may very well materialize in 2011 as a core capability, well, or at least a new trend. I believe that capabilities such Chatter, offered by Salesforce, will transform (slowly) how people interact in the workplace and leverage the volumes of social data captured in LinkedIn and other Social Media sites. Collaboration is of course a topic of interest for me personally. I even signed up for Kare Anderson’s collaboration collaboration site – yes, twice the word “collaboration”: it is really about collaborating on collaboration techniques. Even though collaboration does not require Social Media, this medium offers perspectives not available until now.

Brief Bio-

Carole-Ann is a renowned guru in the Decision Management space. She created the vision for Decision Management that is widely adopted now in the industry. Her claim to fame is the strategy and direction of Blaze Advisor, the then-leading BRMS product, while she also managed all the Decision Management tools at FICO (business rules, predictive analytics and optimization). She has a vision for Decision Management both as a technology and a discipline that can revolutionize the way corporations do business, and will never get tired of painting that vision for her audience. She speaks often at Industry conferences and has conducted university classes in France and Washington DC.

Leveraging her Masters degree in Applied Mathematics / Computer Science from a “Grande Ecole” in France, she started her career building advanced systems using all kinds of technologies — expert systems, rules, optimization, dashboarding and cubes, web search, and beta version of database replication – as well as conducting strategic consulting gigs around change management.

She now tweets as @CMatignon, blogs at blog.sparklinglogic.com and interacts at community.sparklinglogic.com.

She started her career building advanced systems using all kinds of technologies — expert systems, rules, optimization, dashboarding and cubes, web search, and beta version of database replication. At Cleversys (acquired by Kurt Salmon & Associates), she also conducted strategic consulting gigs mostly around change management.

While playing with advanced software components, she found a passion for technology and joined ILOG (acquired by IBM). She developed a growing interest in Optimization as well as Business Rules. At ILOG, she coined the term BRMS while brainstorming with her Sales counterpart. She led the Presales organization for Telecom in the Americas up until 2000 when she joined Blaze Software (acquired by Brokat Technologies, HNC Software and finally FICO).

Her 360-degree experience allowed her to gain appreciation for all aspects of a software company, giving her a unique perspective on the business. Her technical background kept her very much in touch with technology as she advanced.

She also became addicted to Twitter in the process. She is active on all kinds of social media, always looking for new digital experience!

Outside of work, Carole-Ann loves spending time with her two boys. They grow fruits in their Northern California home and cook all together in the French tradition.

Related Articles

- Business Analytics Predictions from Gartner and Forrester (readwriteweb.com)

- 5 Big Themes in BI for 2011 (informationweek.com)

- Unfinished Business: Questions Social Media Must Answer In 2011 – Liza With A “Z” (lizasperling.com)

- Gartner Predicts Top 10 Technologies for 2011 (marketingtechblog.com)

- How Will Technology Disrupt the Enterprise in 2011? (readwriteweb.com)

- Social ecosystems tracked by Xeesm social graphs (wendysoucie.com)

- Social media can enhance the buying decision (customerthink.com)

- Top Ten Predictions for 2011 (enterpriseirregulars.com)

- Open Data: Why the Crowd Can Be Your Best Analytics Tool (mashable.com)

- Closing the Loop: NCDM, the Future of Customer Interactions and Maturing Social Marketing Practices (customerthink.com)

- Your 2011 Social Media Quick Start! (socialmediadudes.com)

- A Sure Sign News Teases Don’t Work in Social Media (journalistics.com)

- Social Media Strategists Look Hard At ROI This Year (webguild.org)

PAW Videos

A message from Predictive Analytics World on newly available videos. It has many free videos as well so you can check them out.

|

||||||||||||

|

Access PAW DC Session Videos Now Predictive Analytics World is pleased to announce on-demand access to the videos of PAW Washington DC, October 2010, including over 30 sessions and keynotes that you may view at your convenience. Access this leading predictive analytics content online now: View the PAW DC session videos online Register by January 18th and receive $150 off the full 2-day conference program videos (enter code PAW150 at checkout) Trial videos – view the following for no charge:

Select individual conference sessions, or recognize savings by registering for access to one or two full days of sessions. These on-demand videos deliver PAW DC right to your desk, covering hot topics and advanced methods such as:

PAW DC videos feature over 25 speakers with case studies from leading enterprises such as: CIBC, CEB, Forrester, Macy’s, MetLife, Microsoft, Miles Kimball, Monster.com, Oracle, Paychex, SunTrust, Target, UPMC, Xerox, Yahoo!, YMCA, and more. How video access works:

Sign up by January 18 for immediate video access and $150 discount |

||||||||||||

Produced by:

|

Session Gallery: Day 1 of 2

Viewing (17) Sessions of (31)

|

|

|||||||||||

|

|

|||||||||||

|

|

|||||||||||

|

|

|||||||||||

|

|

|||||||||||

|

|

|||||||||||

|

|

|||||||||||

|

|

|||||||||||

|

|

|||||||||||

|

|

|||||||||||

|

|

|||||||||||

|

|

|||||||||||

|

|

|||||||||||

|

|

|||||||||||

|

|

|||||||||||

|

|

|||||||||||

|

|

|||||||

Related Articles

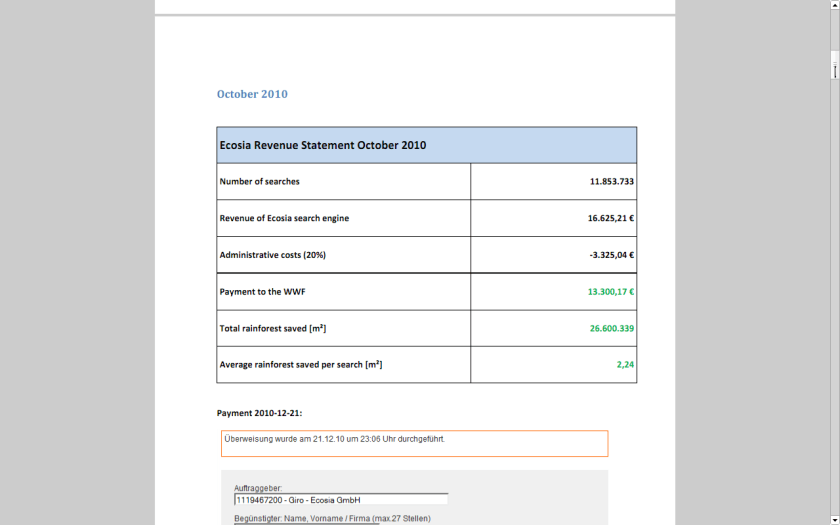

How to balance your online advertising and your offline conscience

I recently found an interesting example of a website that both makes a lot of money and yet is much more efficient than any free or non profit. It is called ECOSIA

If you see a website that wants to balance administrative costs plus have a transparent way to make the world better- this is a great example.

You search with Ecosia.

Key facts about the park:

- World’s largest tropical forest reserve (38,867 square kilometers, or about the size of Switzerland)

- Home to about 14% of all amphibian species and roughly 54% of all bird species in the Amazon – not to mention large populations of at least eight threatened species, including the jaguar

- Includes part of the Guiana Shield containing 25% of world’s remaining tropical rainforests – 80 to 90% of which are still pristine

- Holds the last major unpolluted water reserves in the Neotropics, containing approximately 20% of all of the Earth’s water

- One of the last tropical regions on Earth vastly unaltered by humans

- Significant contributor to climatic regulation via heat absorption and carbon storage

http://ecosia.org/statistics.php

They claim to have donated 141,529.42 EUR !!!

http://static.ecosia.org/files/donations.pdf

Well suppose you are the Web Admin of a very popular website like Wikipedia or etc

One way to meet server costs is to say openly hey i need to balance my costs so i need some money.

The other way is to use online advertising.

I started mine with Google Adsense.

Click per milli (or CPM) gives you a very low low conversion compared to contacting ad sponsor directly.

But its a great data experiment-

as you can monitor which companies are likely to be advertised on your site (assume google knows more about their algols than you will)

which formats -banner or text or flash have what kind of conversion rates

what are the expected pay off rates from various keywords or companies (like business intelligence software, predictive analytics software and statistical computing software are similar but have different expected returns (if you remember your eco class)

NOW- Based on above data, you know whats your minimum baseline to expect from a private advertiser than a public, crowd sourced search engine one (like Google or Bing)

Lets say if you have 100000 views monthly. and assume one out of 1000 page views will lead to a click. Say the advertiser will pay you 1 $ for every 1 click (=1000 impressions)

Then your expected revenue is $100.But if your clicks are priced at 2.5$ for every click , and your click through rate is now 3 out of 1000 impressions- (both very moderate increases that can done by basic placement optimization of ad type, graphics etc)-your new revenue is 750$.

Be a good Samaritan- you decide to share some of this with your audience -like 4 Amazon books per month ( or I free Amazon book per week)- That gives you a cost of 200$, and leaves you with some 550$.

Wait! it doesnt end there- Adam Smith‘s invisible hand moves on .

You say hmm let me put 100 $ for an annual paper writing contest of $1000, donate $200 to one laptop per child ( or to Amazon rain forests or to Haiti etc etc etc), pay $100 to your upgraded server hosting, and put 350$ in online advertising. say $200 for search engines and $150 for Facebook.

Woah!

Month 1 would should see more people visiting you for the first time. If you have a good return rate (returning visitors as a %, and low bounce rate (visits less than 5 secs)- your traffic should see atleast a 20% jump in new arrivals and 5-10 % in long term arrivals. Ignoring bounces- within three months you will have one of the following

1) An interesting case study on statistics on online and social media advertising, tangible motivations for increasing community response , and some good data for study

2) hopefully better cost management of your server expenses

3)very hopefully a positive cash flow

you could even set a percentage and share the monthly (or annually is better actions) to your readers and advertisers.

go ahead- change the world!

the key paradigms here are sharing your traffic and revenue openly to everyone

donating to a suitable cause

helping increase awareness of the suitable cause

basing fixed percentages rather than absolute numbers to ensure your site and cause are sustained for years.

Related Articles

- 3 Green Search Engines (planetsave.com)

- Social Enterprise Focus: Ecosia (clearlyso.com)

- Yahoo and Microsoft Search Advertisers May See Rate Hike of Up To 78% (dailyfinance.com)

- Return on Investment from Google Marketing (firstrate.co.nz)

- The Top 10 Paid Search Features You Might Have Missed In 2010 (searchengineland.com)

- Bing upgrades draw upon Facebook, other partners (thenewstribune.com)

- adCenter Goes Offline During Winter Storm (seroundtable.com)

- Why Bing “Likes” Facebook (technologyreview.in)

- What Offline Advertisers Can Teach Online Marketers (gabrielcatalano.com)

- The Environment friendly Search! (trak.in)

Jobs in Analytics

Here are some jobs from Vincent Granville, founder Analyticbridge. Please contact him directly- I just thought the Season of Joy should have better jobs than currently.

————————————————————————————–

Several job ads recently posted on DataShaping / AnalyticBridge, across United Sates and in Europe. Use the DataShaping search box to find more opportunities.

Job ads are posted at:

Selected opportunities:

Quantitative Modeling Consultants – Agilex (Alexandria, VA)

Sr. Software Development Engineers – Agilex (Alexandria, VA)

Actuary – FBL Financial Group (Des Moines, IA)

Relevance scientist – Yandex Labs (Palo Alto, CA)

Research Engineer, Search Ranking – Chomp (San Francisco, CA)

Mathematical Modeling and Optimization – Exxon (Clinton, NJ)

Data Analyst – DISH Network (Englewood, CO)

Sr Aviation Planning Research & Data Analyst – Port of Seattle (Seattle, WA)

Statistician / Quantitative Analyst – Indeed (Austin, TX)

Statistician – Pratt & Whitney (East Hartford, CT)

Biostatistician – The J. David Gladstone Institutes (San Francisco, CA)

Customer Service Representative (oklahoma, OK)

Program Associate – Cambridge Systematics (Washington D.C., DC)

Sr Risk Analyst – Paypal (Omaha, NE)

Sr. Actuarial Analyst – Farmers (Simi Valley, CA)

Senior Statistician, Data Services – Equifax (Alpharetta, GA)

Business Intelligence Analyst – Burbery (NYC, NY)

Fact Extraction – Amazon (Seattle, WA)

Senior Researcher – Bing (Bellevue, WA)

Senior Statistical Research Analyst – Walt Disney (Lake Buena Vista, FL)

Statistician – Capital One (Nottingham, NH)

Lead Data Analyst – Barclays (Northampton, UK)

Analytical Data Scientist – Aviagen (Huntsville, AL or Edinburgh, UK)

VP of Engineering for Analytics (Bay Area, CA)

Senior Software Engineer – Numenta (Redwood City, CA)

Numenta Internship Program – Numenta (Redwood City, CA)

Director of Analytics – Mozilla Corporation (Mountain View, CA)

Senior Sales Engineer – Statsoft (NY, NY)

Related Articles

PAWCON -This week in London

Watch out for the twitter hash news on PAWCON and the exciting agenda lined up. If your in the City- you may want to just drop in

http://www.predictiveanalyticsworld.com/london/2010/agenda.php#day1-7

Disclaimer- PAWCON has been a blog partner with Decisionstats (since the first PAWCON ). It is vendor neutral and features open source as well proprietary software, as well case studies from academia and Industry for a balanced view.

Little birdie told me some exciting product enhancements may be in the works including a not yet announced R plugin 😉 and the latest SAS product using embedded analytics and Dr Elder’s full day data mining workshop.

Citation-

http://www.predictiveanalyticsworld.com/london/2010/agenda.php#day1-7

Monday November 15, 2010

All conference sessions take place in Edward 5-7

Registration, Coffee and Danish

Room: Albert Suites

Keynote

Five Ways Predictive Analytics Cuts Enterprise Risk

All business is an exercise in risk management. All organizations would benefit from measuring, tracking and computing risk as a core process, much like insurance companies do.

Predictive analytics does the trick, one customer at a time. This technology is a data-driven means to compute the risk each customer will defect, not respond to an expensive mailer, consume a retention discount even if she were not going to leave in the first place, not be targeted for a telephone solicitation that would have landed a sale, commit fraud, or become a “loss customer” such as a bad debtor or an insurance policy-holder with high claims.

In this keynote session, Dr. Eric Siegel will reveal:

- Five ways predictive analytics evolves your enterprise to reduce risk

- Hidden sources of risk across operational functions

- What every business should learn from insurance companies

- How advancements have reversed the very meaning of fraud

- Why “man + machine” teams are greater than the sum of their parts for

enterprise decision support

Speaker: Eric Siegel, Ph.D., Program Chair, Predictive Analytics World

[ Top of this page ] [ Agenda overview ]

Platinum Sponsor Presentation

The Analytical Revolution

The algorithms at the heart of predictive analytics have been around for years – in some cases for decades. But now, as we see predictive analytics move to the mainstream and become a competitive necessity for organisations in all industries, the most crucial challenges are to ensure that results can be delivered to where they can make a direct impact on outcomes and business performance, and that the application of analytics can be scaled to the most demanding enterprise requirements.

This session will look at the obstacles to successfully applying analysis at the enterprise level, and how today’s approaches and technologies can enable the true “industrialisation” of predictive analytics.

Speaker: Colin Shearer, WW Industry Solutions Leader, IBM UK Ltd

[ Top of this page ] [ Agenda overview ]

Gold Sponsor Presentation

How Predictive Analytics is Driving Business Value

Organisations are increasingly relying on analytics to make key business decisions. Today, technology advances and the increasing need to realise competitive advantage in the market place are driving predictive analytics from the domain of marketers and tactical one-off exercises to the point where analytics are being embedded within core business processes.

During this session, Richard will share some of the focus areas where Deloitte is driving business transformation through predictive analytics, including Workforce, Brand Equity and Reputational Risk, Customer Insight and Network Analytics.

Speaker: Richard Fayers, Senior Manager, Deloitte Analytical Insight

[ Top of this page ] [ Agenda overview ]

Break / Exhibits

Room: Albert Suites

10:45am-11:35am

Healthcare

Case Study: Life Line Screening

Taking CRM Global Through Predictive Analytics

While Life Line is successfully executing a US CRM roadmap, they are also beginning this same evolution abroad. They are beginning in the UK where Merkle procured data and built a response model that is pulling responses over 30% higher than competitors. This presentation will give an overview of the US CRM roadmap, and then focus on the beginning of their strategy abroad, focusing on the data procurement they could not get anywhere else but through Merkle and the successful modeling and analytics for the UK.

Speaker: Ozgur Dogan, VP, Quantitative Solutions Group, Merkle Inc.

Speaker: Trish Mathe, Life Line Screening

[ Top of this page ] [ Agenda overview ]

11:35am-12:25pm

Open Source Analytics; Healthcare

Case Study: A large health care organization

The Rise of Open Source Analytics: Lowering Costs While Improving Patient Care

Rapidminer and R were the number 1 and 2 in this years annual KDNuggets data mining tool usage poll, followed by Knime on place 4 and Weka on place 6. So what’s going on here? Are these open source tools really that good or is their popularity strongly correlated with lower acquisition costs alone? This session answers these questions based on a real world case for a large health care organization and explains the risks & benefits of using open source technology. The final part of the session explains how these tools stack up against their traditional, proprietary counterparts.

Speaker: Jos van Dongen, Associate & Principal, DeltIQ Group

[ Top of this page ] [ Agenda overview ]

Lunch / Exhibits

Room: Albert Suites

1:25pm-2:15pm

Keynote

Thought Leader:

Case Study: Yahoo! and other large on-line e-businesses

Search Marketing and Predictive Analytics: SEM, SEO and On-line Marketing Case Studies

Search Engine Marketing is a $15B industry in the U.S. growing to double that number over the next 3 years. Worldwide the SEM market was over $50B in 2010. Not only is this a fast growing area of marketing, but it is one that has significant implications for brand and direct marketing and is undergoing rapid change with emerging channels such as mobile and social. What is unique about this area of marketing is a singularly heavy dependence on analytics:

- Large numbers of variables and options

- Real-time auctions/bids and a need to adjust strategies in real-time

- Difficult optimization problems on allocating spend across a huge number of keywords

- Fast-changing competitive terrain and heavy competition on the obvious channels

- Complicated interactions between various channels and a large choice of search keyword expansion possibilities

- Profitability and ROI analysis that are complex and often challenging

The size of the industry, its growing importance in marketing, its upcoming role in Mobile Advertising, and its uniquely heavy reliance on analytics makes it particularly interesting as an area for predictive analytics applications. In this session, not only will hear about some of the latest strategies and techniques to optimize search, you will hear case studies that illustrate the important role of analytics from industry practitioners.

Speaker: Usama Fayyad, , Ph.D., CEO, Open Insights

[ Top of this page ] [ Agenda overview ]

Platinum Sponsor Presentation

Creating a Model Factory Using in-Database Analytics

With the ever-increasing number of analytical models required to make fact-based decisions, as well as increasing audit compliance regulations, it is more important than ever that these models can be created, monitored, retuned and deployed as quickly and automatically as possible. This paper, using a case study from a major financial organisation, will show how organisations can build a model factory efficiently using the latest SAS technology that utilizes the power of in-database processing.

Speaker: John Spooner, Analytics Specialist, SAS (UK)

[ Top of this page ] [ Agenda overview ]

Session Break

Room: Albert Suites

Retail

Case Study: SABMiller

Predictive Analytics & Global Marketing Strategy

Over the last few years SABMiller plc, the second largest brewing company in the world operating in 70 countries, has been systematically segmenting its markets in different countries globally in order optimize their portfolio strategy & align it to their long term country specific growth strategy. This presentation talks about the overall methodology followed and the challenges that had to be overcome both from a technical as well as from a change management stand point in order to successfully implement a standard analytics approach to diverse markets and diverse business positions in a highly global setting.

The session explains how country specific growth strategies were converted to objective variables and consumption occasion segments were created that differentiated the market effectively by their growth potential. In addition to this the presentation will also provide a discussion on issues like:

- The dilemmas of static vs. dynamic solutions and standardization vs. adaptable solutions

- Challenges in acceptability, local capability development, overcoming implementation inertia, cost effectiveness, etc

- The role that business partners at SAB and analytics service partners at AbsolutData together play in providing impactful and actionable solutions

Speaker: Anne Stephens, SABMiller plc

Speaker: Titir Pal, AbsolutData

[ Top of this page ] [ Agenda overview ]

Retail

Case Study: Overtoom Belgium

Increasing Marketing Relevance Through Personalized Targeting

Since many years, Overtoom Belgium – a leading B2B retailer and division of the French Manutan group – focuses on an extensive use of CRM. In this presentation, we demonstrate how Overtoom has integrated Predictive Analytics to optimize customer relationships. In this process, they employ analytics to develop answers to the key question: “which product should we offer to which customer via which channel”. We show how Overtoom gained a 10% revenue increase by replacing the existing segmentation scheme with accurate predictive response models. Additionally, we illustrate how Overtoom succeeds to deliver more relevant communications by offering personalized promotional content to every single customer, and how these personalized offers positively impact Overtoom’s conversion rates.

Speaker: Dr. Geert Verstraeten, Python Predictions

[ Top of this page ] [ Agenda overview ]

Break / Exhibits

Room: Albert Suites

4:50pm-5:40pm

Uplift Modelling:

Case Study: Lloyds TSB General Insurance & US Bank

Uplift Modelling: You Should Not Only Measure But Model Incremental Response

Most marketing analysts understand that measuring the impact of a marketing campaign requires a valid control group so that uplift (incremental response) can be reported. However, it is much less widely understood that the targeting models used almost everywhere do not attempt to optimize that incremental measure. That requires an uplift model.

This session will explain why a switch to uplift modelling is needed, illustrate what can and does go wrong when they are not used and the hugely positive impact they can have when used effectively. It will also discuss a range of approaches to building and assessing uplift models, from simple basic adjustments to existing modelling processes through to full-blown uplift modelling.

The talk will use Lloyds TSB General Insurance & US Bank as a case study and also illustrate real-world results from other companies and sectors.

Speaker: Nicholas Radcliffe, Founder and Director, Stochastic Solutions

[ Top of this page ] [ Agenda overview ]

Consumer services

Case Study: Canadian Automobile Association and other B2C examples

The Diminishing Marginal Returns of Variable Creation in Predictive Analytics Solutions

Variable Creation is the key to success in any predictive analytics exercise. Many different approaches are adopted during this process, yet there are diminishing marginal returns as the number of variables increase. Our organization conducted a case study on four existing clients to explore this so-called diminishing impact of variable creation on predictive analytics solutions. Existing predictive analytics solutions were built using our traditional variable creation process. Yet, presuming that we could exponentially increase the number of variables, we wanted to determine if this added significant benefit to the existing solution.

Speaker: Richard Boire, BoireFillerGroup

[ Top of this page ] [ Agenda overview ]

Reception / Exhibits

Room: Albert Suites

Tuesday November 16, 2010

All conference sessions take place in Edward 5-7

Registration, Coffee and Danish

Room: Albert Suites

9:00am-9:55am

Keynote

Multiple Case Studies: Anheuser-Busch, Disney, HP, HSBC, Pfizer, and others

The High ROI of Data Mining for Innovative Organizations

Data mining and advanced analytics can enhance your bottom line in three basic ways, by 1) streamlining a process, 2) eliminating the bad, or 3) highlighting the good. In rare situations, a fourth way – creating something new – is possible. But modern organizations are so effective at their core tasks that data mining usually results in an iterative, rather than transformative, improvement. Still, the impact can be dramatic.

Dr. Elder will share the story (problem, solution, and effect) of nine projects conducted over the last decade for some of America’s most innovative agencies and corporations:

- Streamline:

- Cross-selling for HSBC

- Image recognition for Anheuser-Busch

- Biometric identification for Lumidigm (for Disney)

- Optimal decisioning for Peregrine Systems (now part of Hewlett-Packard)

- Quick decisions for the Social Security Administration

- Eliminate Bad:

- Tax fraud detection for the IRS

- Warranty Fraud detection for Hewlett-Packard

- Highlight Good:

- Sector trading for WestWind Foundation

- Drug efficacy discovery for Pharmacia & UpJohn (now Pfizer)

Moderator: Eric Siegel, Program Chair, Predictive Analytics World

Speaker: John Elder, Ph.D., Elder Research, Inc.

Also see Dr. Elder’s full-day workshop

[ Top of this page ] [ Agenda overview ]

Break / Exhibits

Room: Albert Suites

10:30am-11:20am

Telecommunications

Case Study: Leading Telecommunications Operator

Predictive Analytics and Efficient Fact-based Marketing

The presentation describes what are the major topics and issues when you introduce predictive analytics and how to build a Fact-Based marketing environment. The introduced tools and methodologies proved to be highly efficient in terms of improving the overall direct marketing activity and customer contact operations for the involved companies. Generally, the introduced approaches have great potential for organizations with large customer bases like Mobile Operators, Internet Giants, Media Companies, or Retail Chains.

Main Introduced Solutions:-Automated Serial Production of Predictive Models for Campaign Targeting-Automated Campaign Measurements and Tracking Solutions-Precise Product Added Value Evaluation.

Speaker: Tamer Keshi, Ph.D., Long-term contractor, T-Mobile

Speaker: Beata Kovacs, International Head of CRM Solutions, Deutsche Telekom

[ Top of this page ] [ Agenda overview ]

Session Changeover

11:25am-12:15pm

Thought Leader

Nine Laws of Data Mining

Data mining is the predictive core of predictive analytics, a business process that finds useful patterns in data through the use of business knowledge. The industry standard CRISP-DM methodology describes the process, but does not explain why the process takes the form that it does. I present nine “laws of data mining”, useful maxims for data miners, with explanations that reveal the reasons behind the surface properties of the data mining process. The nine laws have implications for predictive analytics applications: how and why it works so well, which ambitions could succeed, and which must fail.

[ Top of this page ] [ Agenda overview ]

Lunch / Exhibits

Room: Albert Suites

1:30pm-2:25pm

Expert Panel: Kaboom! Predictive Analytics Hits the Mainstream

Predictive analytics has taken off, across industry sectors and across applications in marketing, fraud detection, credit scoring and beyond. Where exactly are we in the process of crossing the chasm toward pervasive deployment, and how can we ensure progress keeps up the pace and stays on target?

This expert panel will address:

- How much of predictive analytics’ potential has been fully realized?

- Where are the outstanding opportunities with greatest potential?

- What are the greatest challenges faced by the industry in achieving wide scale adoption?

- How are these challenges best overcome?

Panelist: John Elder, Ph.D., Elder Research, Inc.

Panelist: Colin Shearer, WW Industry Solutions Leader, IBM UK Ltd

Panelist: Udo Sglavo, Global Analytic Solutions Manager, SAS

Panel moderator: Eric Siegel, Ph.D., Program Chair, Predictive Analytics World

Session Changeover

2:30pm-3:20pm

Crowdsourcing Data Mining

Case Study: University of Melbourne, Chessmetrics

Prediction Competitions: Far More Than Just a Bit of Fun

Data modelling competitions allow companies and researchers to post a problem and have it scrutinised by the world’s best data scientists. There are an infinite number of techniques that can be applied to any modelling task but it is impossible to know at the outset which will be most effective. By exposing the problem to a wide audience, competitions are a cost effective way to reach the frontier of what is possible from a given dataset. The power of competitions is neatly illustrated by the results of a recent bioinformatics competition hosted by Kaggle. It required participants to pick markers in HIV’s genetic sequence that coincide with changes in the severity of infection. Within a week and a half, the best entry had already outdone the best methods in the scientific literature. This presentation will cover how competitions typically work, some case studies and the types of business modelling challenges that the Kaggle platform can address.

Speaker: Anthony Goldbloom, Kaggle Pty Ltd

[ Top of this page ] [ Agenda overview ]

Breaks /Exhibits

Room: Albert Suites

3:50pm-4:40pm

Human Resources; e-Commerce

Case Study: Naukri.com, Jeevansathi.com

Increasing Marketing ROI and Efficiency of Candidate-Search with Predictive Analytics

InfoEdge, India’s largest and most profitable online firm with a bouquet of internet properties has been Google’s biggest customer in India. Our team used predictive modeling to double our profits across multiple fronts. For Naukri.com, India’s number 1 job portal, predictive models target jobseekers most relevant to the recruiter. Analytical insights provided a deeper understanding of recruiter behaviour and informed a redesign of this product’s recruiter search functionality. This session will describe how we did it, and also reveal how Jeevansathi.com, India’s 2nd-largest matrimony portal, targets the acquisition of consumers in the market for marriage.

[ Top of this page ] [ Agenda overview ]

Speaker: Eric Siegel, Ph.D., Program Chair, Predictive Analytics World

[ Top of this page ] [ Agenda overview ]

Wednesday November 17, 2010

Full-day Workshop

The Best and the Worst of Predictive Analytics:

Predictive Modeling Methods and Common Data Mining Mistakes

Click here for the detailed workshop description

- Workshop starts at 9:00am

- First AM Break from 10:00 – 10:15

- Second AM Break from 11:15 – 11:30

- Lunch from 12:30 – 1:15pm

- First PM Break: 2:00 – 2:15

- Second PM Break: 3:15 – 3:30

- Workshop ends at 4:30pm

Speaker: John Elder, Ph.D., CEO and Founder, Elder Research, Inc.