Tag: python

Change Python Version for Jupyter Notebook

Three ways to do it- sometimes package dependencies force analysts and developers to require older versions of Python

- use conda to downgrade Python version (if Anaconda installed already)

conda install python=3.5.0

Hat tip- http://chris35wills.github.io/conda_python_version/

https://docs.anaconda.com/anaconda/faq#how-do-i-get-the-latest-anaconda-with-python-3-5

2. you download the latest version of Anaconda and then make a Python 3.5 environment.

To create the new environment for Python 3.6, in your Terminal window or an Anaconda Prompt, run:

conda create -n py35 python=3.5 anaconda

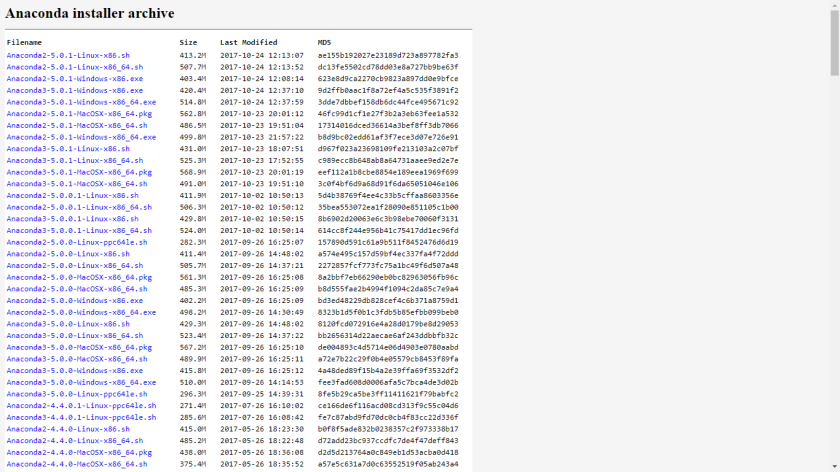

3) Uninstall Anaconda and install older version of Anaconda https://repo.continuum.io/archive/ (download the most recent Anaconda that included Python 3.5 by default, Anaconda 4.2.0)

Importing data from csv file using PySpark

There are two ways to import the csv file, one as a RDD and the other as Spark Dataframe(preferred). MLLIB is built around RDDs while ML is generally built around dataframes. https://spark.apache.org/docs/latest/mllib-clustering.html and https://spark.apache.org/docs/latest/ml-clustering.html

!pip install pyspark

from pyspark import SparkContext, SparkConf

sc =SparkContext()

A SparkContext represents the connection to a Spark cluster, and can be used to create RDD and broadcast variables on that cluster. https://spark.apache.org/docs/latest/rdd-programming-guide.html#overview

To create a SparkContext you first need to build a SparkConf object that contains information about your application.Only one SparkContext may be active per JVM. You must stop() the active SparkContext before creating a new one.

Configuration for a Spark application. Used to set various Spark parameters as key-value pairs.

dir(SparkContext)

[‘PACKAGE_EXTENSIONS’,

‘class‘,

‘delattr‘,

‘dict‘,

‘dir‘,

‘doc‘,

‘enter‘,

‘eq‘,

‘exit‘,

‘format‘,

‘ge‘,

‘getattribute‘,

‘getnewargs‘,

‘gt‘,

‘hash‘,

‘init‘,

‘init_subclass‘,

‘le‘,

‘lt‘,

‘module‘,

‘ne‘,

‘new‘,

‘reduce‘,

‘reduce_ex‘,

‘repr‘,

‘setattr‘,

‘sizeof‘,

‘str‘,

‘subclasshook‘,

‘weakref‘,

‘_active_spark_context’,

‘dictToJavaMap’,

‘_do_init’,

‘_ensure_initialized’,

‘_gateway’,

‘_getJavaStorageLevel’,

‘_initialize_context’,

‘_jvm’,

‘_lock’,

‘_next_accum_id’,

‘_python_includes’,

‘_repr_html‘,

‘accumulator’,

‘addFile’,

‘addPyFile’,

‘applicationId’,

‘binaryFiles’,

‘binaryRecords’,

‘broadcast’,

‘cancelAllJobs’,

‘cancelJobGroup’,

‘defaultMinPartitions’,

‘defaultParallelism’,

‘dump_profiles’,

’emptyRDD’,

‘getConf’,

‘getLocalProperty’,

‘getOrCreate’,

‘hadoopFile’,

‘hadoopRDD’,

‘newAPIHadoopFile’,

‘newAPIHadoopRDD’,

‘parallelize’,

‘pickleFile’,

‘range’,

‘runJob’,

‘sequenceFile’,

‘setCheckpointDir’,

‘setJobGroup’,

‘setLocalProperty’,

‘setLogLevel’,

‘setSystemProperty’,

‘show_profiles’,

‘sparkUser’,

‘startTime’,

‘statusTracker’,

‘stop’,

‘textFile’,

‘uiWebUrl’,

‘union’,

‘version’,

‘wholeTextFiles’]

# Loads data.

data = sc.textFile(“C:/Users/Ajay/Desktop/test/new_sample.csv”)

type(data)

from pyspark.sql import SparkSession

spark = SparkSession.builder \

.master(“local”) \

.appName(“Data cleaning”) \

.getOrCreate()

dataframe2 = spark.read.format(“csv”).option(“header”,”true”).option(“mode”,”DROPMALFORMED”).load(“C:/Users/Ajay/Desktop/test/new_sample.csv”)

type(dataframe2)

Is Python going to be better than R for Big Data Analytics and Data Science? #rstats #python

Uptil now the R ecosystem of package developers has mostly shrugged away the Big Data question. In a fascinating insight Hadley Wickham said this in a recent interview- shockingly it mimicks the FUD you know who has been accused of ( source

https://peadarcoyle.wordpress.com/2015/08/02/interview-with-a-data-scientist-hadley-wickham/

I think there are two particularly important transition points:

* From in-memory to disk. If your data fits in memory, it’s small data. And these days you can get 1 TB of ram, so even small data is big!

* From one computer to many computers.

R is a fantastic environment for the rapid exploration of in-memory data, but there’s no elegant way to scale it to much larger datasets. Hadoop works well when you have thousands of computers, but is incredible slow on just one machine. Fortunately, I don’t think one system needs to solve all big data problems.

To me there are three main classes of problem:

1. Big data problems that are actually small data problems, once you have the right subset/sample/summary.

2. Big data problems that are actually lots and lots of small data problems

3. Finally, there are irretrievably big problems where you do need all the data, perhaps because you fitting a complex model. An example of this type of problem is recommender systems

Ajay- One of the reasons of non development of R Big Data packages is- it takes money. The private sector in R ecosystem is a duopoly ( Revolution Analytics ( acquired by Microsoft) and RStudio (created by Microsoft Alum JJ Allaire). Since RStudio actively tries as a company to NOT step into areas Revolution Analytics works in- it has not ventured into Big Data in my opinion for strategic reasons.

Revolution Analytics project on RHadoop is actually just one consultant working on it here https://github.com/RevolutionAnalytics/RHadoop and it has not been updated since six months

We interviewed the creator of R Hadoop here https://decisionstats.com/2014/07/10/interview-antonio-piccolboni-big-data-analytics-rhadoop-rstats/

However Python developers have been trying to actually develop systems for Big Data actively. The Hadoop ecosystem and the Python ecosystem are much more FOSS friendly even in enterprise solutions.

This is where Python is innovating over R in Big Data-

http://blaze.pydata.org/en/latest/

-

Blaze: Translates NumPy/Pandas-like syntax to systems like databases.

Blaze presents a pleasant and familiar interface to us regardless of what computational solution or database we use. It mediates our interaction with files, data structures, and databases, optimizing and translating our query as appropriate to provide a smooth and interactive session.

-

Odo: Migrates data between formats.

Odo moves data between formats (CSV, JSON, databases) and locations (local, remote, HDFS) efficiently and robustly with a dead-simple interface by leveraging a sophisticated and extensible network of conversions. http://odo.pydata.org/en/latest/perf.html

odotakes two arguments, a target and a source for a data transfer.>>> from odo import odo >>> odo(source, target) # load source into target

-

Dask.array: Multi-core / on-disk NumPy arrays

Dask.arrays provide blocked algorithms on top of NumPy to handle larger-than-memory arrays and to leverage multiple cores. They are a drop-in replacement for a commonly used subset of NumPy algorithms.

-

DyND: In-memory dynamic arrays

DyND is a dynamic ND-array library like NumPy. It supports variable length strings, ragged arrays, and GPUs. It is a standalone C++ codebase with Python bindings. Generally it is more extensible than NumPy but also less mature. https://github.com/libdynd/libdynd

The core DyND developer team consists of Mark Wiebe and Irwin Zaid. Much of the funding that made this project possible came through Continuum Analytics and DARPA-BAA-12-38, part of XDATA.

LibDyND, a component of the Blaze project, is a C++ library for dynamic, multidimensional arrays. It is inspired by NumPy, the Python array programming library at the core of the scientific Python stack, but tries to address a number of obstacles encountered by some of its users. Examples of this are support for variable-sized string and ragged array types. The library is in a preview development state, and can be thought of as a sandbox where features are being tried and tweaked to gain experience with them.

C++ is a first-class target of the library, the intent is that all its features should be easily usable in the language. This has many benefits, such as that development within LibDyND using its own components is more natural than in a library designed primarily for embedding in another language.

This library is being actively developed together with its Python bindings,

http://dask.pydata.org/en/latest/

On a single machine dask increases the scale of comfortable data from fits-in-memory to fits-on-diskby intelligently streaming data from disk and by leveraging all the cores of a modern CPU.

Users interact with dask either by making graphs directly or through the dask collections which provide larger-than-memory counterparts to existing popular libraries:

- dask.array = numpy + threading

- dask.bag = map, filter, toolz + multiprocessing

- dask.dataframe = pandas + threading

Dask primarily targets parallel computations that run on a single machine. It integrates nicely with the existing PyData ecosystem and is trivial to setup and use:

conda install dask

or

pip install dask

https://github.com/cloudera/ibis

When open source fights- closed source wins. When the Jedi fight the Sith Lords will win

So will R people rise to the Big Data challenge or will they bury their heads in sands like an ostrich or a kiwi. Will Python people learn from R design philosophies and try and incorporate more of it without redesigning the wheel

Converting code from one language to another automatically?

How I wish there was some kind of automated conversion tool – that would convert a CRAN R package into a standard Python package which is pip installable

Machine learning for more machine learning anyone?

Install Package in Python from Github

You can use

pip install git+git://github.com/yhat/ggplot.git

or

pip install --upgrade https://github.com/yhat/ggplot/tarball/master

Top 15 functions for Analytics in Python #python #rstats #analytics

Here is a list of top ten fifteen functions for analysis in Python

- import (imports a particular package library in Python)

- getcwd (from os library) – get current working directory

- chdir (from os) -change directory

- listdir (from os ) -list files in the specified directory

-

read_csv(from pandas) reads in a csv file

- objectname.info (like proc contents in SAS or str in R , it describes the object called objectname)

- objectname.columns (like proc contents in SAS or names in R , it describes the object variable names of the object called objectname)

- objectname.head (like head in R , it prints the first few rows in the object called objectname)

- objectname.tail (like tail in R , it prints the last few rows in the object called objectname)

- len (length)

-

objectname.ix[rows] (here if rows is a list of numbers this will give those rows (or index) for the object called objectname)

-

groupby -group by a categorical variable -

crosstab -cross tab between two categorical variables - describe – data analysis exploratory of numerical variables

- corr – correlation between numerical variables

import pandas as pd #importing packages

import os as os

os.getcwd() #current working directory

os.chdir('/home/ajay/Downloads') #changes the working directory

os.getcwd()

a=os.getcwd()

os.listdir(a) #lists all the files in a directory

diamonds=pd.read_csv("diamonds.csv")

#note header =0 means we take the first row as a header (default) else we can specify header=None

diamonds.info()

diamonds.head()

diamonds.tail(10)

diamonds.columns

b=len(diamonds) #this is the total population size

print(b)

import numpy as np

rows = np.random.choice(diamonds.index.values, 0.0001*b)

print(rows)

sampled_df = diamonds.ix[rows]

sampled_df

diamonds.describe()

cut=diamonds.groupby("cut")

cut.count()

cut.mean()

cut.median()

pd.crosstab(diamonds.cut, diamonds.color)

diamonds.corr()

Polyglots for Data Science #python #sas #r #stats #spss #matlab #julia #octave

In the future I think analysts need to be polyglots- you will need to know more than one language for crunching data.

SAS, Python, R, Julia,SPSS,Matlab- Pick Any Two 😉 or Any Three.

No, you can’t count C or Java as a statistical language 🙂 🙂

Efforts to promote Polyglots in Statistical Software are-

1) R for SAS and SPSS Users (free or book)

- JMP and R reference http://www.jmp.com/support/help/Working_with_R.shtml

2) R for Stata Users (book)

4) Using Python and R together

- Accessing R from Python (Rpy2) http://www.bytemining.com/wp-content/uploads/2010/10/rpy2.pdf

- Big Data with R and Python (though these have been made separately)

- Python for Data Analysis is a book .

Python for Data Analysis by Wes McKinney

Probably we need a Python and R for Data Analysis book- just like we have for SAS and R books.

- The RPy2 documentation is handy http://rpy.sourceforge.net/rpy2/doc-2.1/html/introduction.html

- A nice tutorial is also here – also the inspiration to writing this post http://files.meetup.com/1225993/Laurent%20Gautier_R_toPython_bridge_to_R.pdf#!

5) Matlab and R

Reference (http://mathesaurus.sourceforge.net/matlab-python-xref.pdf ) includes Python

5) Octave and R

package http://cran.r-project.org/web/packages/RcppOctave/vignettes/RcppOctave.pdf includes Matlab

reference http://cran.r-project.org/doc/contrib/R-and-octave.txt

6) Julia and python

- Julia and IPython https://github.com/JuliaLang/IJulia.jl

- PyPlot uses the Julia PyCall package to call Python’s matplotlib directly from Julia

7) SPSS and Python is here

8) SPSS and R is as below

- The Essentials for R for Statistics versions 22, 21, 20, and 19 are available here.

- This link will take you to the SourceForge site where the Version 18 Essentials and Plugins are hosted.

9) Using R from Clojure – Incanter

Use embedded R from Clojure and Incanter http://github.com/jolby/rincanter