This is an interview wit Heiko Miertzsch, founder EODA ( http://www.eoda.de/en/). EODA is a cutting edge startup . recently they launched a few innovative products that made me sit up and pay attention. In this interview, Heiko Miertzsch , the founder of eoda talks on the startup journey and the vision of analytics.

DecisionStats (DS)- Describe the journey of your startup eoda. What made you choose R as the platform for your software and training. Name a few turning points and milestones in your corporate journey

Heiko Miertzsch (HM)- eoda was founded in 2010 by Oliver and me. We both have a strong background in analytics and Information Technology industry. So we observed the market a while before starting the business. We saw two trends: First, a lot of new Technologies and Tools for data analysis appeared and second Open Source seemed to become more and more important for several reasons. Just to name one the easiness to share experience and code in a broad and professional community. Disruptive forces seem to change the market and we just don’t want back the wrong horse.

From the beginning on we tested R and we were enthusiastic. We started choosing R for our projects, software development, services and build up a training program for R. We already believed in 2010 that R has a successful future. It was more flexible than other statistic languages, more powerful in respect of the functionality, you could integrate it in an existing environment and much more.

DS- You make both Software products and services. What are the challenges in marketing both?

HM- We even do more: We provide consulting, training, individual software, customizing software and services. It is pure fun for us to go to our customers and say “hey, we can help you solving your analytical problems, no matter what kind of service you want to buy, what kind of infrastructure you use, if you want to learn about forest trees or buy a SaaS solution to predict your customers revenues”. In a certain way we don’t see barriers between these delivery models because we use analytics as our basis. First of all, we focus on the analytical problem of our customers and then we find the ideal solution together with the customer.

DS- Describe your software tableR. How does it work, what is the pricing and what is the benefit to user. Name a few real life examples if available for usage.

HM- Today the process of data collection, analysis and presenting the results is characterized by the use of a heterogeneous software environment with many tools, file formats and manual processing steps. tableR supports the entire process from design a questionnaire, share a structured format with the CAXI software, import the data and doing the analysis and plot the table report with only one single solution. The base report comes with just one click and if you want to go more into detail you can enhance your analysis with your own R code.

tableR is used in a closed beta at the moment and the open beta will in start next weeks.

(It is available at http://www.eoda.de/en/tableR.html)

DS- Describe your software translateR (http://www.eoda.de/en/translateR.html) . How does it work, what is the pricing and what is the benefit to user. Name a few real life examples if available for usage.

HM- Many companies realized the advantages of the open source programming language R. translateR allows a fast and inexpensive migration to R – currently from SPSS code.

The manual migration of complex SPSS® scripts has always been tedious and error-prone. translateR will help here and the task of translating by hand becomes a thing of the past. The beta test of translateR will also start in the next weeks.

DS- How do you think we can use R on the cloud for better analytics?

HM- Well, R seems to bring together the best “Data Scientists” of the world with all their different focuses on different methods, vertical knowledge, technical experience and more. The cloud is a great workplace: It holds the data – a lot of data and it offers a technical platform with computing power. If it succeeds to bring these two aspects together, we could provide a lot of knowledge to solve a lot of problems – with individual and global impact.

DS- What advantages and disadvantages does working on the cloud give to a R user?

HM- In terms of R I don’t see other aspects than in using the cloud in general.

DS- Startup life can be hectic – what do you do to relax.

HM- Oliver and I have both families, so eoda is our time to relax – just fun. I guess we do the same typical things like others, Oliver plays soccer and goes running. I like any kind of endurance sports and go climbing, the first to give the thoughtless space the second to train to focus on a concrete target.

About-

translateR is the new service from German based R specialist eoda, which helps users to translate SPSS® Code to R automatically. translateR is developed in cooperation with the University of Kassel and financially supported by the LOEWE-program of the state Hessen. translateR will be available as a cloud service and as a desktop application.

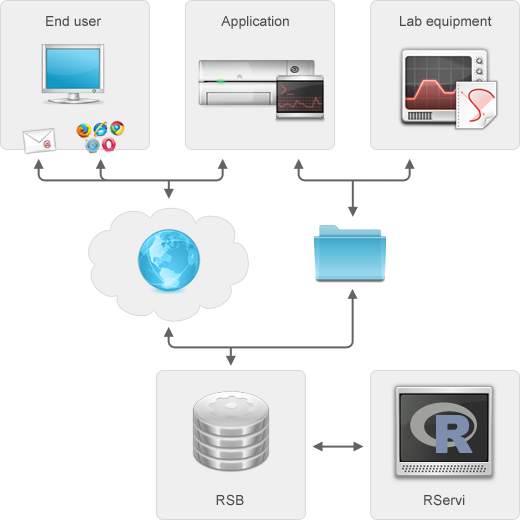

eoda offers consulting, software development and training for analytical and statistical questions. eoda is focused on R and specializes in integrating R into existing software environments.