To help unify and uniform, collobrative work and data management and business models across the enterprise in secure SSL cloud environments- Google Storage has been rolling out some changes (read below)-this also gives you more options on the day Amazon goes ahem down (cough cough) because they didn’t think someone in their data environment could be sympathetic to free data.

——————————————————————————————————————————————————————–

https://groups.google.com/group/gs-announce

And now to the actual update.

We’re making some changes to Google Storage for Developers to make team-based development easier. As part of this work, we are introducing the concept of a project. In preparation for this feature, we will be creating projects for every user and migrating their buckets to it.

What does this mean for you?

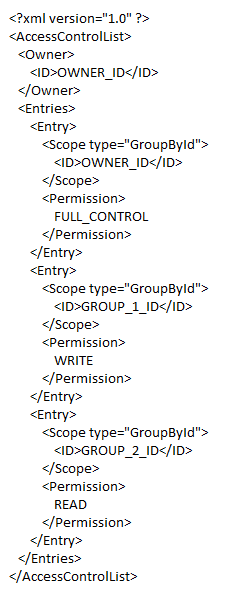

Everything will continue to work as it always has. However, you will notice that if you perform a get-acl operation on any of your buckets, you will see extra ACL entries. These entries correspond to project groups. Each group has only one member – the person who owned the buckets before the bucket migration; no additional rights have been granted to any of your buckets or objects. You should preserve these new ACL grants if you modify bucket ACLs.

An example entry for a modified ACL would look like this:

We’ll be rolling out these changes over the next few days,

http://blog.cloudberrylab.com/2011/04/cloudberry-explorer-for-google-storage.html

Detailed Note on GS-

https://code.google.com/apis/storage/

Google Storage for Developers is a RESTful service for storing and accessing your data on Google’s infrastructure. The service combines the performance and scalability of Google’s cloud with advanced security and sharing capabilities. Highlights include:

Fast, scalable, highly available object store

- All data replicated to multiple U.S. data centers

- Read-your-writes data consistency

- Objects of hundreds of gigabytes in size per request with range-get support

- Domain-scoped bucket namespace

Easy, flexible authentication and sharing

- Key-based authentication

- Authenticated downloads from a web browser

- Individual- and group-level access controls

In addition, Google Storage for Developers offers a web-based interface for managing your storage and GSUtil, an open source command line tool and library. The service is also compatible with many existing cloud storage tools and libraries. With pay-as-you-go pricing, it’s easy to get started and scale as your needs grow.

Google Storage for Developers is currently only available to a limited number of developers. Please sign up to join the waiting list.