On September 28th, 2010, The Document Foundation was announced. The last six months, it feels, have just passed within a short glimpse of time. Not only did we release three LibreOffice versions within three months, have created the LibreOffice-Box DVD image, and brought LibreOffice Portable on its way. We also have announced the LibreOffice Conference for October 2011 and have taken part in lots of events worldwide, with FOSDEM and CeBIT being the most prominent ones.

People follow us at Twitter, Identi.ca, XING, LinkedIn and a Facebook group and fan page, they discuss on our mailing lists with more than 6.000 subscriptions, collaborate in our wiki, get insight on our daily work in our blog, and post and blog themselves. From the very first day, openness, transparency and meritocracy have been shaping the framework we want to work in. Our discussions and decisions take place on a public mailing list, and regularly, we hold phone conferences for the Steering Committee and for the marketing teams, where everyone is invited to join. Our ideas and visions have made their way into our Next Decade Manifesto.

We have joined the Open Invention Network as well as the OpenDoc Society, and just last week have become an SPI-associated project, and we see a wide range of support from all over the world. Not only do Novell and Red Hat support our efforts with developers, but just recently, Canonical, creators of Ubuntu, joined as well. All major Linux distributions deliver LibreOffice with their operating systems, and more follow every day.

One of the most stunning contributions, that still leaves us speechless, is the support that we receive from the community. When we asked for 50,000 € capital stock for a German-based foundation, the community showed their support, appreciation and their power, and not only donated it in just eight days, but up to now has supported us with close to 100,000 €! Another one is that driven by our open, vendor neutral approach, combined with our easy hacks, we have included code contributions from over 150 entirely new developers to the project, alongside localisations from over 50 localizers. The community has developed itself better than we could ever dream of, and first meetings like the project’s weekend or the QA meeting of the Germanophone group are already being organized.

What we have seen now is just the beginning of something very big. The Document Foundation has a vision, and the creation of the foundation in Germany is about to happen soon. LibreOffice has been downloaded over 350,000 times within the first week, and we just counted more than 1,3 million downloads just from our download system — not counting packages directly delivered by Linux distributors, other download sites or DVDs included in magazines and newspapers — supported by 65 mirrors from all over the world, and millions already use and contribute to it worldwide. With our participation in the Google Summer of Code, we will engage more students and young developers to be part of our community. Our improved release schedule will ensure that new features and improvements will make their way to end-users soon, and for testers, we even provide daily builds.

We are so excited by what has been achieved over the last six months, and we are immensely grateful to all those who have supported the project in whatever ways they can. It is an honour to be working with you, to be part of one united community! The future as we are shaping it has just begun, and it will be bright and excellent.

from-

List archive: http://listarchives.documentfoundation.org/www/announce/

Related Articles

- How to easily enable Global Menu support for LibreOffice in Ubuntu 11.04 (omgubuntu.co.uk)

- LibreOffice Ubuntu PPA (icehot.wordpress.com)

- Cleaner code in LibreOffice 3.3.2 (i-programmer.info)

- LibreOffice and openSUSE 11.4 (nowwhatthe.blogspot.com)

- LibreOffice Software Is Here to Stay (pcworld.com)

- LibreOffice: an excellent open source productivity suite (onsoftware.en.softonic.com)

- The Document Foundation Marks Six Months of Freedom (ostatic.com)

2)

2)

5) Pacman/Njam- Clone of the original classic game. Downloadable from

5) Pacman/Njam- Clone of the original classic game. Downloadable from  6)

6)

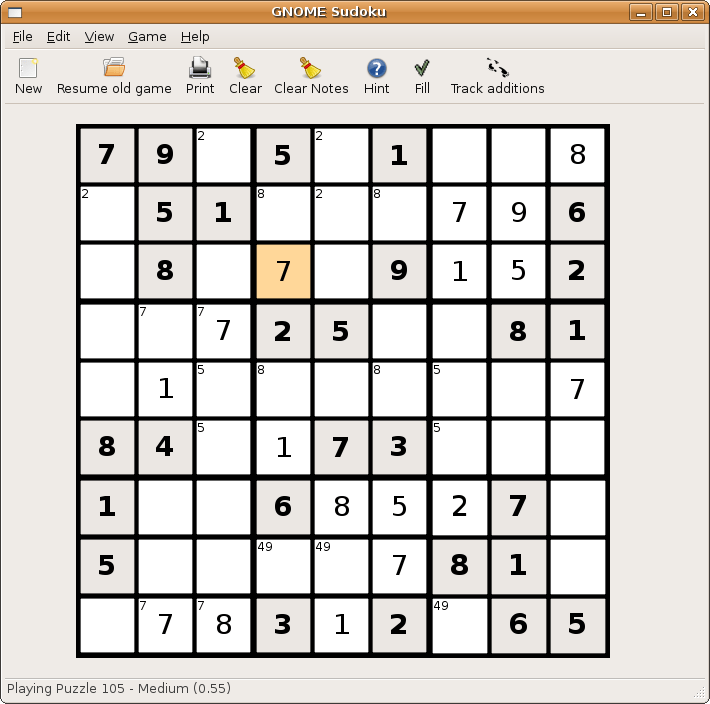

8) Card Games- KPatience has almost 14 card games including solitaire, and free cell.

8) Card Games- KPatience has almost 14 card games including solitaire, and free cell.  9) Sauerbraten -First person shooter with good network play, edit maps capabilities. You can read more here-

9) Sauerbraten -First person shooter with good network play, edit maps capabilities. You can read more here-  10) Tetris-KBlocks Tetris is the classic game. If you like classic slow games- Tetris is the best. and I like the toughest Tetris game -Bastet

10) Tetris-KBlocks Tetris is the classic game. If you like classic slow games- Tetris is the best. and I like the toughest Tetris game -Bastet  Even an xkcd toon for it

Even an xkcd toon for it