Here is an interview with David Katz ,founder of David Katz Consulting (http://www.davidkatzconsulting.com/) and an analyst at the noted firm http://dataspora.com/. He is a featured speaker at Predictive Analytics World http://www.predictiveanalyticsworld.com/sanfrancisco/2011/speakers.php#katz)

Ajay- Describe your background working with analytics . How can we make analytics and science more attractive career options for young students

David- I had an interest in math from an early age, spurred by reading lots of science fiction with mathematicians and scientists in leading roles. I was fortunate to be at Harry and David (Fruit of the Month Club) when they were in the forefront of applying multivariate statistics to the challenge of targeting catalogs and other snail-mail offerings. Later I had the opportunity to expand these techniques to the retail sphere with Williams-Sonoma, who grew their retail business with the support of their catalog mailings. Since they had several catalog titles and product lines, cross-selling presented additional analytic challenges, and with the growth of the internet there was still another channel to consider, with its own dynamics.

After helping to found Abacus Direct Marketing, I became an independent consultant, which provided a lot of variety in applying statistics and data mining in a variety of settings from health care to telecom to credit marketing and education.

Students should be exposed to the many roles that analytics plays in modern life, and to the excitement of finding meaningful and useful patterns in the vast profusion of data that is now available.

Ajay- Describe your most challenging project in 3 decades of experience in this field.

David- Hard to choose just one, but the educational field has been particularly interesting. Partnering with Olympic Behavior Labs, we’ve developed systems to help identify students who are most at-risk for dropping out of school to help target interventions that could prevent dropout and promote success.

Ajay- What do you think are the top 5 trends in analytics for 2011.

David- Big Data, Privacy concerns, quick response to consumer needs, integration of testing and analysis into business processes, social networking data.

Ajay- Do you think techniques like RFM and LTV are adequately utilized by organization. How can they be propagated further.

David- Organizations vary amazingly in how sophisticated or unsophisticated the are in analytics. A key factor in success as a consultant is to understand where each client is on this continuum and how well that serves their needs.

Ajay- What are the various software you have worked for in this field- and name your favorite per category.

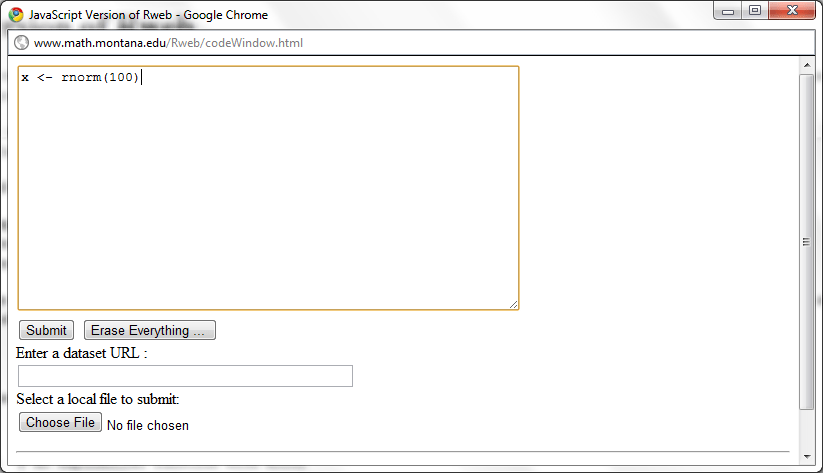

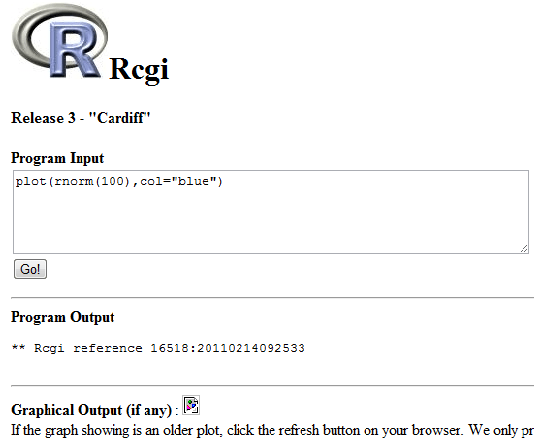

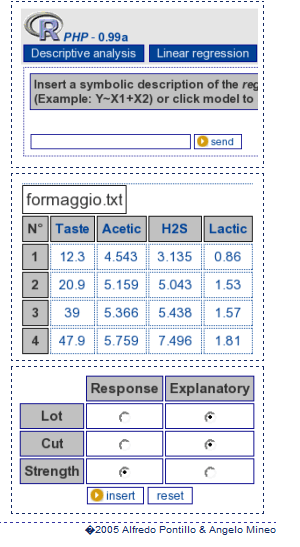

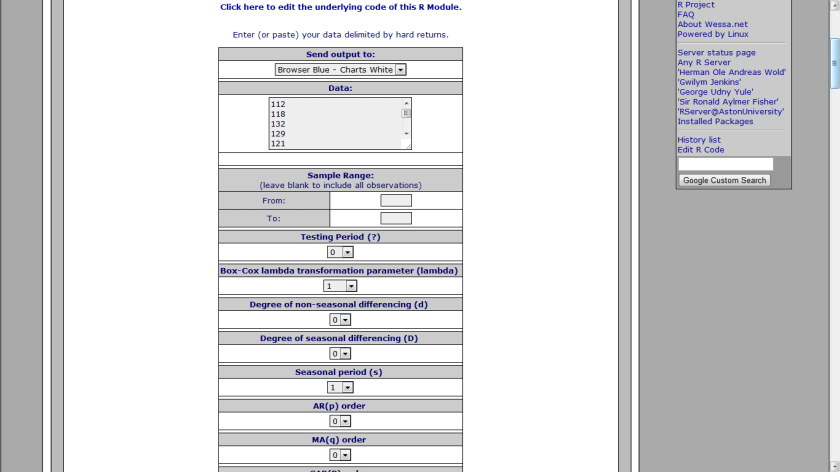

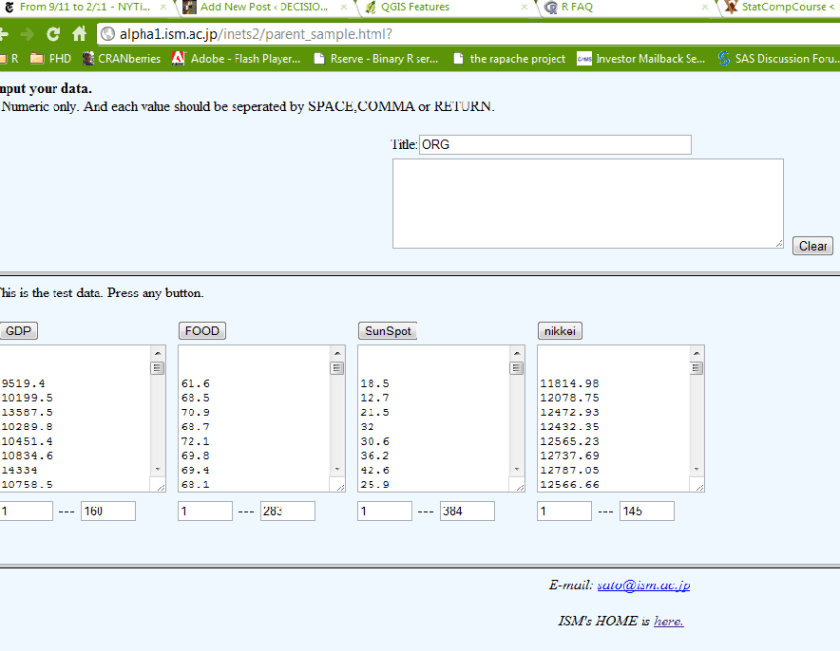

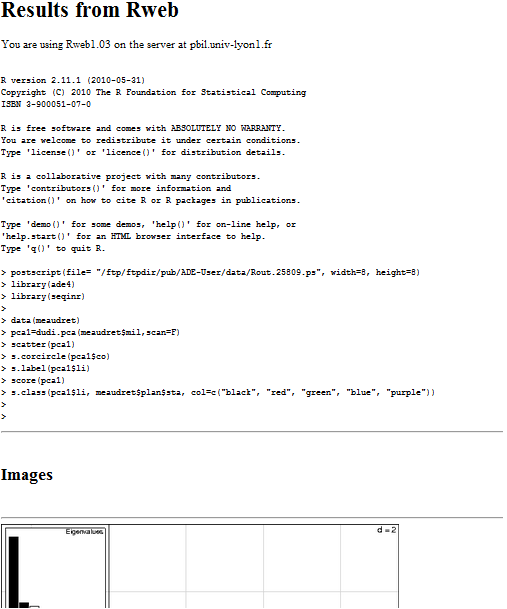

David- I started out using COBOL (that dates me!) then concentrated on SAS for many years. More recently R is my favorite because of its coverage, currency and programming model, and it’s debugging capabilities.

Ajay- Independent consulting can be a strenuous job. What do you do to unwind?

David- Cycling, yoga, meditation, hiking and guitar.

Biography-

David Katz has been in the forefront of applying statistical models and database technology to marketing problems since 1980. He holds a Master’s Degree in Mathematics from the University of California, Berkeley. He is one of the founders of Abacus Direct Marketing and was previously the Director of Database Development for Williams-Sonoma.

He is the founder and President of David Katz Consulting, specializing in sophisticated statistical services for a variety of applications, with a special focus on the Direct Marketing Industry. David Katz has an extensive background that includes experience in all aspects of direct marketing from data mining, to strategy, to test design and implementation. In addition, he consults on a variety of data mining and statistical applications from public health to collections analysis. He has partnered with consulting firms such as Ernst and Young, Prediction Impact, and most recently on this project with Dataspora.

For more on David’s Session in Predictive Analytics World, San Fransisco on (http://www.predictiveanalyticsworld.com/sanfrancisco/2011/agenda.php#day2-16a)

Room: Salon 5 & 6

4:45pm – 5:05pm

Track 2: Social Data and Telecom

Case Study: Major North American Telecom

Social Networking Data for Churn Analysis

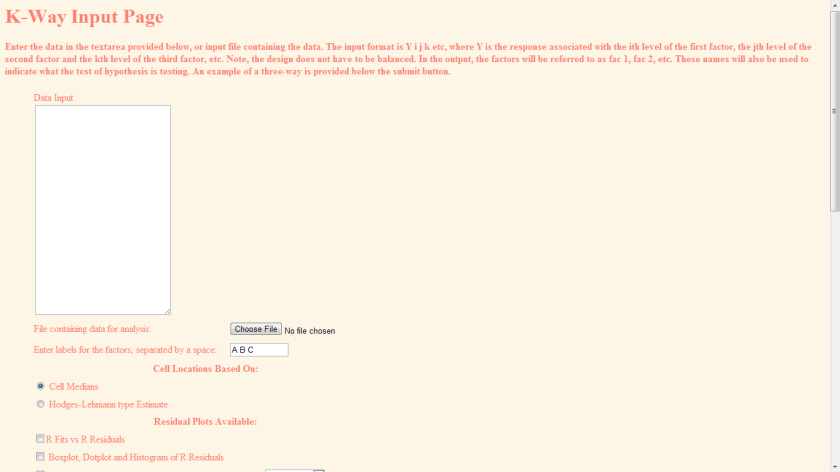

A North American Telecom found that it had a window into social contacts – who has been calling whom on its network. This data proved to be predictive of churn. Using SQL, and GAM in R, we explored how to use this data to improve the identification of likely churners. We will present many dimensions of the lessons learned on this engagement.

Speaker: David Katz, Senior Analyst, Dataspora, and President, David Katz Consulting

Exhibit Hours

Monday, March 14th:10:00am to 7:30pm

Tuesday, March 15th:9:45am to 4:30pm

28.635308

77.224960