I am still testing this out.

But if you know bit more about make and .compile in Ubuntu check out

http://www.gnu.org/software/dap/

I loved the humorous introduction

Dap is a small statistics and graphics package based on C. Version 3.0 and later of Dap can read SBS programs (based on the utterly famous, industry standard statistics system with similar initials – you know the one I mean)! The user wishing to perform basic statistical analyses is now freed from learning and using C syntax for straightforward tasks, while retaining access to the C-style graphics and statistics features provided by the original implementation. Dap provides core methods of data management, analysis, and graphics that are commonly used in statistical consulting practice (univariate statistics, correlations and regression, ANOVA, categorical data analysis, logistic regression, and nonparametric analyses).

Anyone familiar with the basic syntax of C programs can learn to use the C-style features of Dap quickly and easily from the manual and the examples contained in it; advanced features of C are not necessary, although they are available. (The manual contains a brief introduction to the C syntax needed for Dap.) Because Dap processes files one line at a time, rather than reading entire files into memory, it can be, and has been, used on data sets that have very many lines and/or very many variables.

I wrote Dap to use in my statistical consulting practice because the aforementioned utterly famous, industry standard statistics system is (or at least was) not available on GNU/Linux and costs a bundle every year under a lease arrangement. And now you can run programs written for that system directly on Dap! I was generally happy with that system, except for the graphics, which are all but impossible to use, but there were a number of clumsy constructs left over from its ancient origins.

http://www.gnu.org/software/dap/#Sample output

Copyright © 2001, 2002, 2003, 2004 Free Software Foundation, Inc., 51 Franklin Street, Fifth Floor, Boston, MA 02110-1301, USA

sounds too good to be true- GNU /DAP joins WPS workbench and Dulles Open’s Carolina as the third SAS language compiler (besides the now defunct BASS software) see http://en.wikipedia.org/wiki/SAS_language#Controversy

Also see http://en.wikipedia.org/wiki/DAP_(software)

Dap was written to be a free replacement for SAS, but users are assumed to have a basic familiarity with the C programming language in order to permit greater flexibility. Unlike R it has been designed to be used on large data sets.

It has been designed so as to cope with very large data sets; even when the size of the data exceeds the size of the computer’s memory

Related Articles

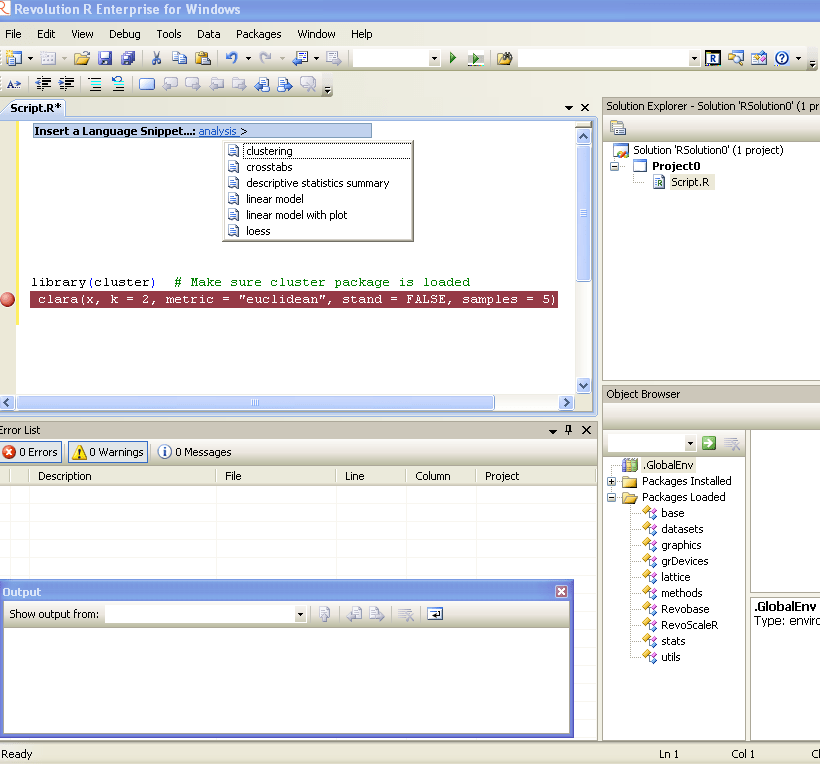

- R courses from Statistics.com (revolutionanalytics.com)

- Categorical Data Analysis for the Behavioral and Social Sciences (psypress.com)

- Skills of a good data miner (zyxo.wordpress.com)

- GNU Octave 3.4 has just been released (gnu.org)

- Revolution R Enterprise 4.2 now available (revolutionanalytics.com)