Here is a great piece of software for data visualization– the public version is free.

And you can use it for Desktop Analytics as well as BI /server versions at very low cost.

About Tableau Software–

http://www.tableausoftware.com/press_release/tableau-massive-growth-hiring-q3-2010

Tableau was named by Software Magazine as the fastest growing software company in the $10 million to $30 million range in the world, and the second fastest growing software company worldwide overall. The ranking stems from the publication’s 28th annual Software 500 ranking of the world’s largest software service providers.

“We’re growing fast because the market is starving for easy-to-use products that deliver rapid-fire business intelligence to everyone. Our customers want ways to unlock their databases and produce engaging reports and dashboards,” said Christian Chabot CEO and co-founder of Tableau.

http://www.tableausoftware.com/about/who-we-are

History in the Making

Put together an Academy-Award winning professor from the nation’s most prestigious university, a savvy business leader with a passion for data, and a brilliant computer scientist. Add in one of the most challenging problems in software – making databases and spreadsheets understandable to ordinary people. You have just recreated the fundamental ingredients for Tableau.

The catalyst? A Department of Defense (DOD) project aimed at increasing people’s ability to analyze information and brought to famed Stanford professor, Pat Hanrahan. A founding member of Pixar and later its chief architect for RenderMan, Pat invented the technology that changed the world of animated film. If you know Buzz and Woody of “Toy Story”, you have Pat to thank.

Under Pat’s leadership, a team of Stanford Ph.D.s got together just down the hall from the Google folks. Pat and Chris Stolte, the brilliant computer scientist, realized that data visualization could produce large gains in people’s ability to understand information. Rather than analyzing data in text form and then creating visualizations of those findings, Pat and Chris invented a technology called VizQL™ by which visualization is part of the journey and not just the destination. Fast analytics and visualization for everyone was born.

While satisfying the DOD project, Pat and Chris met Christian Chabot, a former data analyst who turned into Jello when he saw what had been invented. The three formed a company and spun out of Stanford like so many before them (Yahoo, Google, VMWare, SUN). With Christian on board as CEO, Tableau rapidly hit one success after another: its first customer (now Tableau’s VP, Operations, Tom Walker), an OEM deal with Hyperion (now Oracle), funding from New Enterprise Associates, a PC Magazine award for “Product of the Year” just one year after launch, and now over 50,000 people in 50+ countries benefiting from the breakthrough.

also see http://www.tableausoftware.com/about/leadership

http://www.tableausoftware.com/about/board

—————————————————————————-

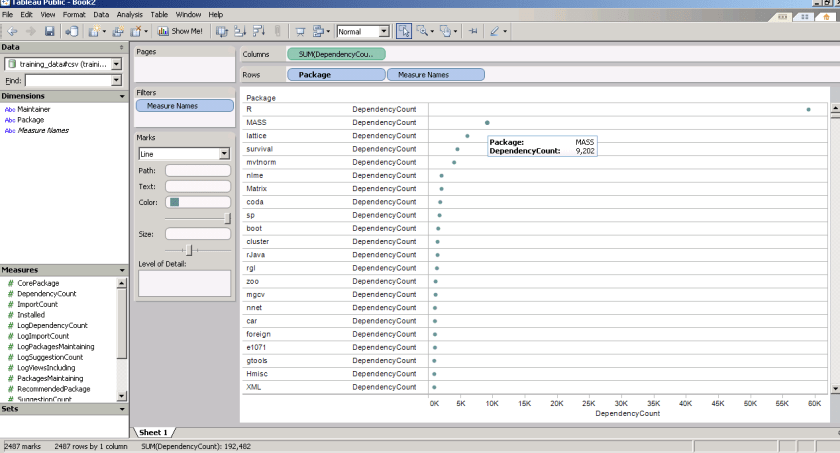

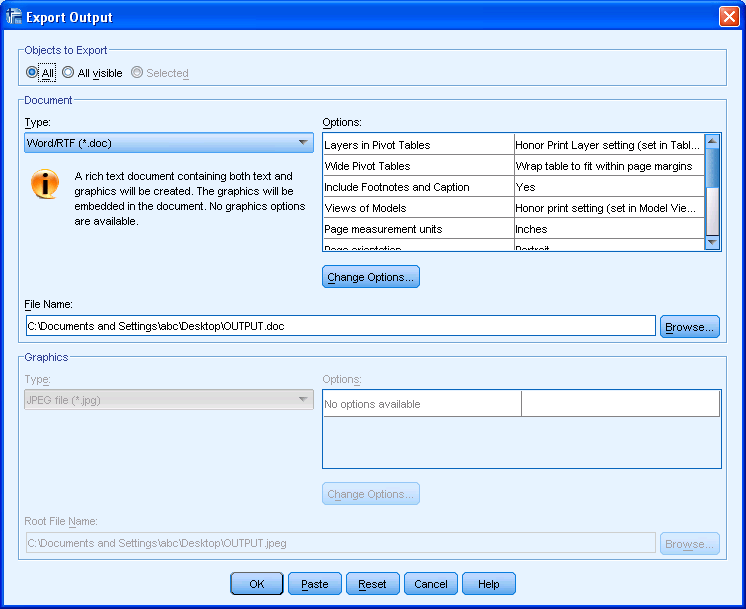

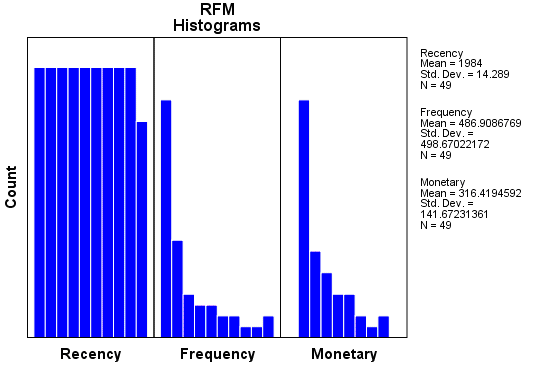

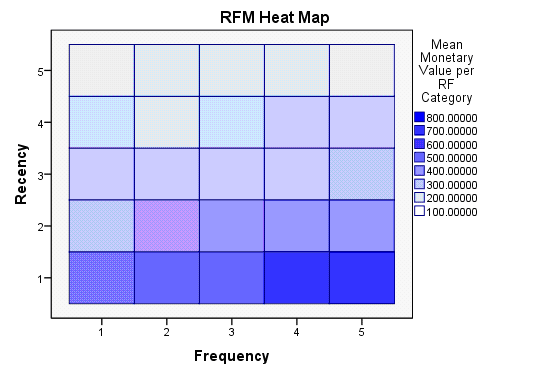

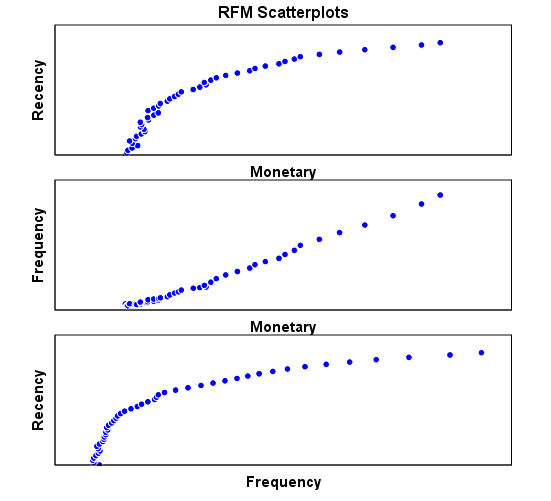

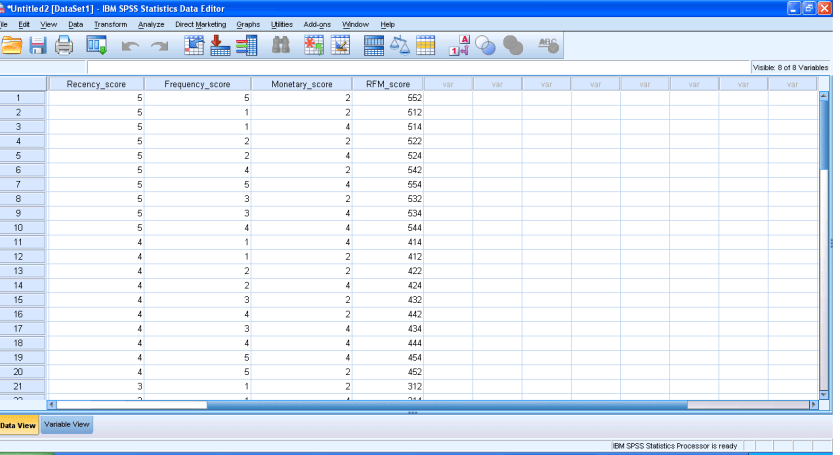

and now a demo I ran on the Kaggle contest data (it is a csv dataset with 95000 rows)

I found Tableau works extremely good at pivoting data and visualizing it -almost like Excel on Steroids. Download the free version here ( I dont know about an academic program (see links below) but software is not expensive at all)

http://buy.tableausoftware.com/

|

|||||||

|

|||||||

|

* Price is per Named User and includes one year of maintenance (upgrades and support). Products are made available as a download immediately after purchase. You may revisit the download site at any time during your current maintenance period to access the latest releases. |

Related Articles

- Online Sales Leader Journey Education Marketing, Inc. Announces New Student Version of Tableau Desktop Professional 5.0 Software (eon.businesswire.com)

- FlowingData is brought to you by… (flowingdata.com)

- Datamark Selects Tableau to Provide Breakthrough Visibility into Education Lead Performance (eon.businesswire.com)

- Tableau Reports Record Growth (xconomy.com)

- Mariner Partners with VIA Intell, LLC to Deliver Visual Intelligence Solutions on Tableau Platform (eon.businesswire.com)

- 4 Ways to Visualize Voter Sentiment for the Midterm Elections (mashable.com)

- September Housing Stats Around the Sound (seattlebubble.com)

- Human-centric analysis (flowingdata.com)

John Ball

John Ball