If you do a Google search for Data Mining Blog- for the past several years one Blog will come on top. data mining blog – Google Search http://bit.ly/kEdPlE

To honor 5 years of Sandro Saitta’s blog (yes thats 5 years!) , we cover an exclusive interview with him where he reveals his unique sauce for cool techie blogging.

Sandro- My first experience with data mining was my master project. I used decision tree to predict pollen concentration for the following week using input data such as wind, temperature and rain. The fact that an algorithm can make a computer learn from experience was really amazing to me. I found it so interesting that I started a PhD in data mining. This time, the field of application was civil engineering. Civil engineers put a lot of sensors on their structure in order to understand how they behave. With all these sensors they generate a lot of data. To interpret these data, I used data mining techniques such as feature selection and clustering. I started my blog, Data Mining Research, during my PhD, to share with other researchers.

I then started applying data mining in the stock market as my first job in industry. I realized the difference between image recognition, where 99% correct classification rate is state of the art, and stock market, where you’re happy with 55%. However, the company ambiance was not as good as I thought, so I moved to consulting. There, I applied data mining in behavioral targeting to increase click-through rates. When you compare the number of customers who click with the ones who don’t, then you really understand what class imbalance mean. A few months ago, I accepted a very good opportunity at SICPA. I’m looking forward to resolving new challenges there.

Ajay- Your blog is the top ranked blog for “data mining blog”. Could you share some tips on better blogging for analytics and technical people

Sandro- It’s always difficult to start a blog, since at the beginning you have no reader. Writing for nobody may seem stupid, but it is not. By writing my first posts during my PhD I was reorganizing my ideas. I was expressing concepts which were not always clear to me. I thus learned a lot and also improved my English level. Of course, it’s still not perfect, but I hope most people can understand me.

Next come the readers. A few dozen each week first. To increase this number, I then started to learn SEO (Search Engine Optimization) by reading books and blogs. I tested many techniques that increased Data Mining Research visibility in the blogosphere. I think SEO is interesting when you already have some content published (which means not at the very beginning of your blog). After a while, once your blog is nicely ranked, the main task is to work on the content of the blog. To be of interest, your content must be particular: original, informative or provocative for example. I also had the chance to have a good visibility thanks to well-known people in the field like Kevin Hillstrom, Gregory Piatetsky-Shapiro, Will Dwinnell / Dean Abbott, Vincent Granville, Matthew Hurst and many others.

Ajay- Whats your favorite statistical software and what are the various softwares that you have worked with.

Could you compare and contrast these software as well.

Sandro- My favorite software at this point is SAS. I worked with it for two years. Once you know the language, you can perform ETL and data mining so easily. It’s also very fast compared to others. There are a lot of tools for data mining, but I cannot think of a tool that is as powerful as SAS and, in the same time, has a high-level programming language behind it.

I also worked with R and Matlab. R is very nice since you have all the up-to-date data mining algorithms implemented. However, working in the memory is not always a good choice, especially for ETL. Matlab is an excellent tool for prototyping. It’s not so fast and certainly not done for ETL, but the price is low regarding all the possibilities for data mining. According to me, SAS is the best choice for ETL and a good choice for data mining. Of course, there is the price.

Ajay- What are your favorite techniques and training resources for learning basics of data mining to say statisticians or business management graduates.

Sandro- I’m the kind of guy who likes to read books. I read data mining books one after the other. The fact that the same concepts are explained differently (and by different people) helps a lot in learning a topic like data mining. Of course, nothing replaces experience in the field. You can read hundreds of books, you will still not be a good practitioner until you really apply data mining in specific fields. My second choice after books is blogs. By reading data mining blogs, you will really see the issues and challenges in the field. It’s still not experience, but we are closer. Finally, web resources and networks such as KDnuggets of course, but also AnalyticBridge and LinkedIn.

Ajay- Describe your hobbies and how they help you ,if at all in your professional life.

Sandro- One of my hobbies is reading. I read a lot of books about data mining, SEO, Google as well as Sci-Fi and Fantasy. I’m a big fan of Asimov by the way. My other hobby is playing tennis. I think I simply use my hobbies as a way to find equilibrium in my life. I always try to find the best balance between work, family, friends and sport.

Ajay- What are your plans for your website for 2011-2012.

Sandro- I will continue to publish guest posts and interviews. I think it is important to let other people express themselves about data mining topics. I will not write about my current applications due to the policies of my current employer. But don’t worry, I still have a lot to write, whether it is technical or not. I will also emphasis more on my experience with data mining, advices for data miners, tips and tricks, and of course book reviews!

Standard Disclosure of Blogging- Sandro awarded me the Peoples Choice award for his blog for 2010 and carried out my interview. There is a lot of love between our respective wordpress blogs, but to reassure our puritan American readers- it is platonic and intellectual.

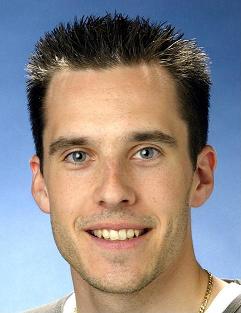

About Sandro S-

Sandro Saitta is a Data Mining Research Engineer at SICPA Security Solutions. He is also a blogger at Data Mining Research (www.dataminingblog.com). His interests include data mining, machine learning, search engine optimization and website marketing.

You can contact Mr Saitta at his Twitter address-

https://twitter.com/#!/dataminingblog

Related articles

- HIGHLIGHTS from REXER Survey :R gives best satisfaction (decisionstats.com)

- Participate in the 2011 Rexer Data Mining Survey (r-bloggers.com)

- KDNuggets Survey on R (decisionstats.com)

- New book on BigData Analytics and Data mining using #Rstats with a GUI (decisionstats.com)

- Solmentum: Solar Meets Data Mining (gigaom.com)

- KDnuggets: R used in 1 in 4 analytics projects (revolutionanalytics.com)