Here is an interview with noted Analytics Consultant and trainer Dean Abbott. Dean is scheduled to take a workshop on Predictive Analytics at PAW (Predictive Analytics World Conference) Oct 18 , 2010 in Washington D.C

Ajay- Describe your upcoming hands on workshop at Predictive Analytics World and how it can help people learn more predictive modeling.

Refer- http://www.predictiveanalyticsworld.com/dc/2010/handson_predictive_analytics.php

Dean- The hands-on workshop is geared toward individuals who know something about predictive analytics but would like to experience the process. It will help people in two regards. First, by going through the data assessment, preparation, modeling and model assessment stages in one day, the attendees will see how predictive analytics works in reality, including some of the pain associated with false starts and mistakes. At the same time, they will experience success with building reasonable models to solve a problem in a single day. I have found that for many, having to actually build the predictive analytics solution if an eye-opener. Seeing demonstrations show the capabilities of a tool, but greater value for an end-user is the development of intuition of what to do at each each stage of the process that makes the theory of predictive analytics real.

Second, they will gain experience using a top-tier predictive analytics software tool, Enterprise Miner (EM). This is especially helpful for those who are considering purchasing EM, but also for those who have used open source tools and have never experienced the additional power and efficiencies that come with a tool that is well thought out from a business solutions standpoint (as opposed to an algorithm workbench).

Ajay- You are an instructor with software ranging from SPSS, S Plus, SAS Enterprise Miner, Statistica and CART. What features of each software do you like best and are more suited for application in data cases.

Dean- I’ll add Tibco Spotfire Miner, Polyanalyst and Unica’s Predictive Insight to the list of tools I’ve taught “hands-on” courses around, and there are at least a half dozen more I demonstrate in lecture courses (JMP, Matlab, Wizwhy, R, Ggobi, RapidMiner, Orange, Weka, RandomForests and TreeNet to name a few). The development of software is a fascinating undertaking, and each tools has its own strengths and weaknesses.

I personally gravitate toward tools with data flow / icon interface because I think more that way, and I’ve tired of learning more programming languages.

Since the predictive analytics algorithms are roughly the same (backdrop is backdrop no matter which tool you use), the key differentiators are

(1) how data can be loaded in and how tightly integrated can the tool be with the database,

(2) how well big data can be handled,

(3) how extensive are the data manipulation options,

(4) how flexible are the model reporting options, and

(5) how can you get the models and/or predictions out.

There are vast differences in the tools on these matters, so when I recommend tools for customers, I usually interview them quite extensively to understand better how they use data and how the models will be integrated into their business practice.

A final consideration is related to the efficiency of using the tool: how much automation can one introduce so that user-interaction is minimized once the analytics process has been defined. While I don’t like new programming languages, scripting and programming often helps here, though some tools have a way to run the visual programming data diagram itself without converting it to code.

Ajay- What are your views on the increasing trend of consolidation and mergers and acquisitions in the predictive analytics space. Does this increase the need for vendor neutral analysts and consultants as well as conferences.

Dean- When companies buy a predictive analytics software package, it’s a mixed bag. SPSS purchasing of Clementine was ultimately good for the predictive analytics, though it took several years for SPSS to figure out what they wanted to do with it. Darwin ultimately disappeared after being purchased by Oracle, but the newer Oracle data mining tool, ODM, integrates better with the database than Darwin did or even would have been able to.

The biggest trend and pressure for the commercial vendors is the improvements in the Open Source and GNU tools. These are becoming more viable for enterprise-level customers with big data, though from what I’ve seen, they haven’t caught up with the big commercial players yet. There is great value in bringing both commercial and open source tools to the attention of end-users in the context of solutions (rather than sales) in a conference setting, which is I think an advantage that Predictive Analytics World has.

As a vendor-neutral consultant, flux is always a good thing because I have to be proficient in a variety of tools, and it is the breadth that brings value for customers entering into the predictive analytics space. But it is very difficult to keep up with the rapidly-changing market and that is something I am weighing myself: how many tools should I keep in my active toolbox.

Ajay- Describe your career and how you came into the Predictive Analytics space. What are your views on various MS Analytics offered by Universities.

Dean- After getting a masters degree in Applied Mathematics, my first job was at a small aerospace engineering company in Charlottesville, VA called Barron Associates, Inc. (BAI); it is still in existence and doing quite well! I was working on optimal guidance algorithms for some developmental missile systems, and statistical learning was a key part of the process, so I but my teeth on pattern recognition techniques there, and frankly, that was the most interesting part of the job. In fact, most of us agreed that this was the most interesting part: John Elder (Elder Research) was the first employee at BAI, and was there at that time. Gerry Montgomery and Paul Hess were there as well and left to form a data mining company called AbTech and are still in analytics space.

After working at BAI, I had short stints at Martin Marietta Corp. and PAR Government Systems were I worked on analytics solutions in DoD, primarily radar and sonar applications. It was while at Elder Research in the 90s that began working in the commercial space more in financial and risk modeling, and then in 1999 I began working as an independent consultant.

One thing I love about this field is that the same techniques can be applied broadly, and therefore I can work on CRM, web analytics, tax and financial risk, credit scoring, survey analysis, and many more application, and cross-fertilize ideas from one domain into other domains.

Regarding MS degrees, let me first write that I am very encouraged that data mining and predictive analytics are being taught in specific class and programs rather than as just an add-on to an advanced statistics or business class. That stated, I have mixed feelings about analytics offerings at Universities.

I find that most provide a good theoretical foundation in the algorithms, but are weak in describing the entire process in a business context. For those building predictive models, the model-building stage nearly always takes much less time than getting the data ready for modeling and reporting results. These are cross-discipline tasks, requiring some understanding of the database world and the business world for us to define the target variable(s) properly and clean up the data so that the predictive analytics algorithms to work well.

The programs that have a practicum of some kind are the most useful, in my opinion. There are some certificate programs out there that have more of a business-oriented framework, and the NC State program builds an internship into the degree itself. These are positive steps in the field that I’m sure will continue as predictive analytics graduates become more in demand.

Biography-

DEAN ABBOTT is President of Abbott Analytics in San Diego, California. Mr. Abbott has over 21 years of experience applying advanced data mining, data preparation, and data visualization methods in real-world data intensive problems, including fraud detection, response modeling, survey analysis, planned giving, predictive toxicology, signal process, and missile guidance. In addition, he has developed and evaluated algorithms for use in commercial data mining and pattern recognition products, including polynomial networks, neural networks, radial basis functions, and clustering algorithms, and has consulted with data mining software companies to provide critiques and assessments of their current features and future enhancements.

Mr. Abbott is a seasoned instructor, having taught a wide range of data mining tutorials and seminars for a decade to audiences of up to 400, including DAMA, KDD, AAAI, and IEEE conferences. He is the instructor of well-regarded data mining courses, explaining concepts in language readily understood by a wide range of audiences, including analytics novices, data analysts, statisticians, and business professionals. Mr. Abbott also has taught both applied and hands-on data mining courses for major software vendors, including Clementine (SPSS, an IBM Company), Affinium Model (Unica Corporation), Statistica (StatSoft, Inc.), S-Plus and Insightful Miner (Insightful Corporation), Enterprise Miner (SAS), Tibco Spitfire Miner (Tibco), and CART (Salford Systems).

35.965000

-83.920000

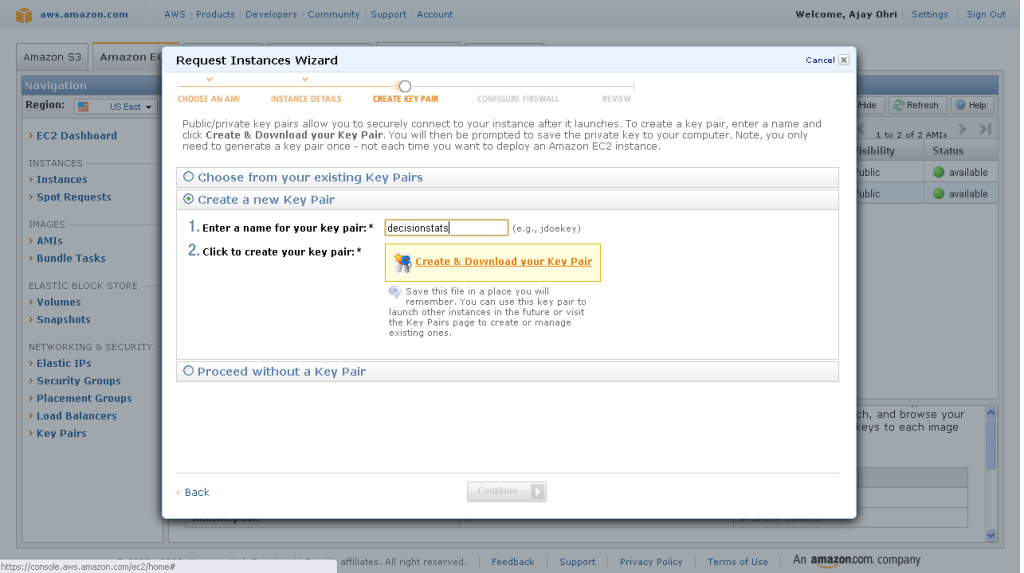

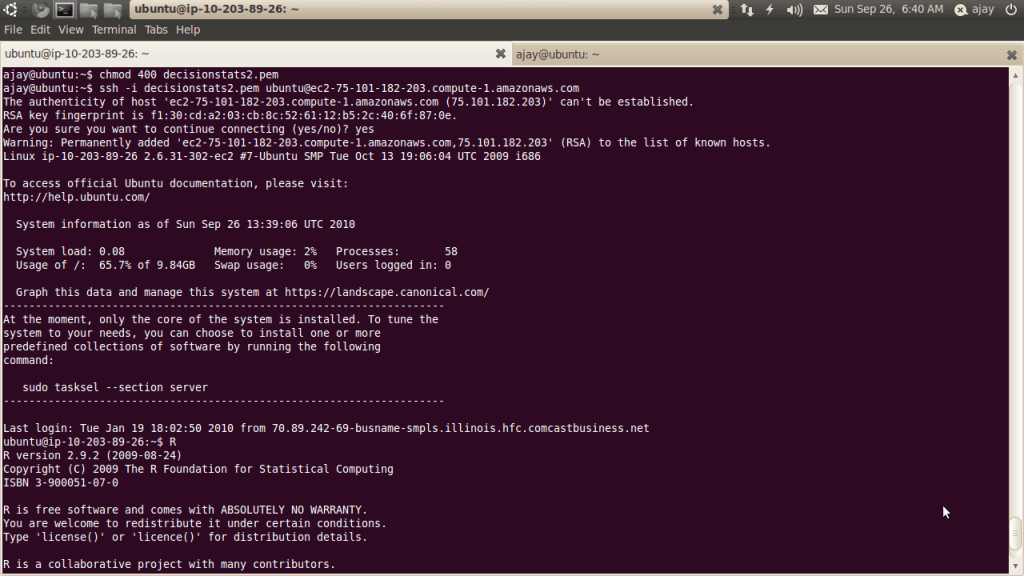

Open the key pair file (or .pem file created earlier) using

Open the key pair file (or .pem file created earlier) using

Enter the new generated password in Remote Desktop

Enter the new generated password in Remote Desktop

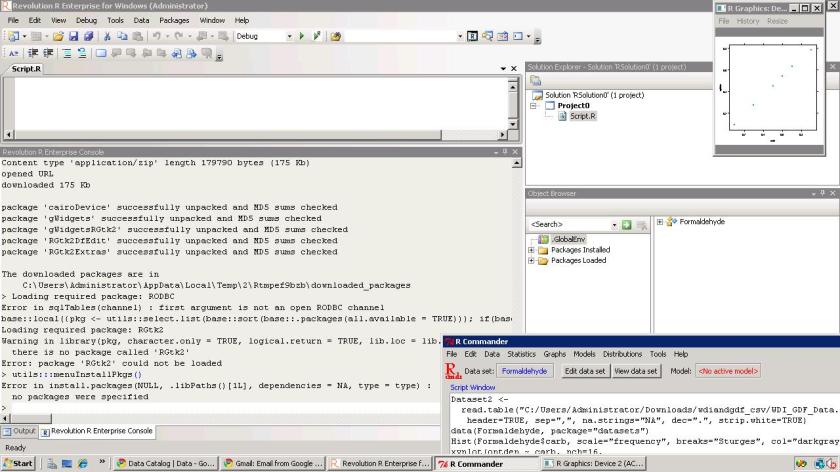

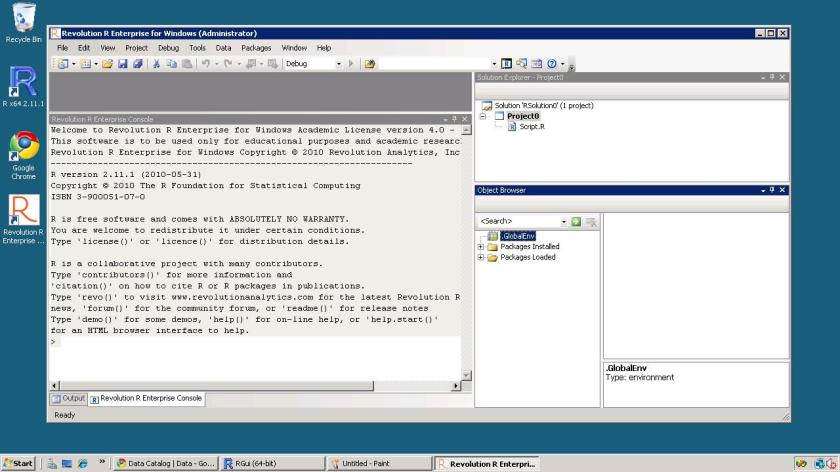

Install packages (I recommend installing R Commander, Rattle and Deducer). Apart from the fact that these GUIs are quite complimentary- they also will install almost all main packages that you need for analysis (as their dependencies) Revolution R installs parallel programming packages by default.

Install packages (I recommend installing R Commander, Rattle and Deducer). Apart from the fact that these GUIs are quite complimentary- they also will install almost all main packages that you need for analysis (as their dependencies) Revolution R installs parallel programming packages by default.