The World Cup is in India, God is in Heaven, and everything is all right with this world.

Tag: India

The impact of currency fluctuations on outsourcing businesses globally

If you have a current offshore team in a different country/currency zone then you may find that the significant cost savings from outsourcing have vanished due to currency fluctuations that occur for reasons like earthquakes, war or oil- something which is outside the core competency of your business corporation. As off shoring companies incur cost in local currencies but gain revenue in American Dollars and Euro (mostly), they pass on these fluctuating costs to their customers but rarely pass along discounts on existing contracts. Sometimes the offshoring contract actually gains from currency fluctuations.The Indian rupee has fluctuated from 43.62 Rupees per USD (04-01-2005) to 48.58 (12-31-2008) to the current value of 44.65.This makes for a volatility component of almost 10 percentage points to the revenue and profit margins of an off shoring vendor. Inflation in India has been growing at 8.5 % and the annual increase in salaries has been around 10-15 % for the past few years. Offshoring vendors have been known to cut back on quality in recruitment when costs have risen historically, and the current attrition rate in Indian ITES is almost 17%.

Related Articles

- Tata Consultancy Rises to a Record After Profit Jumps (businessweek.com)

- India’s Outsourcing Revenue Buoyant, Says Nasscom (pcworld.com)

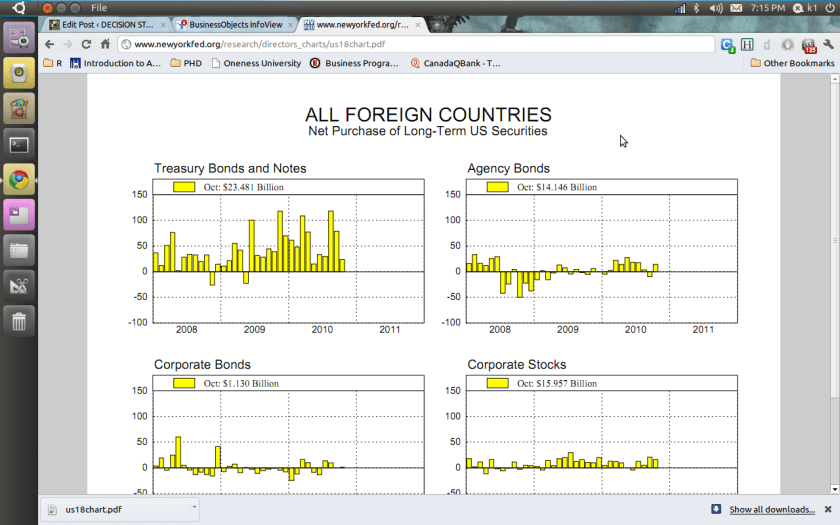

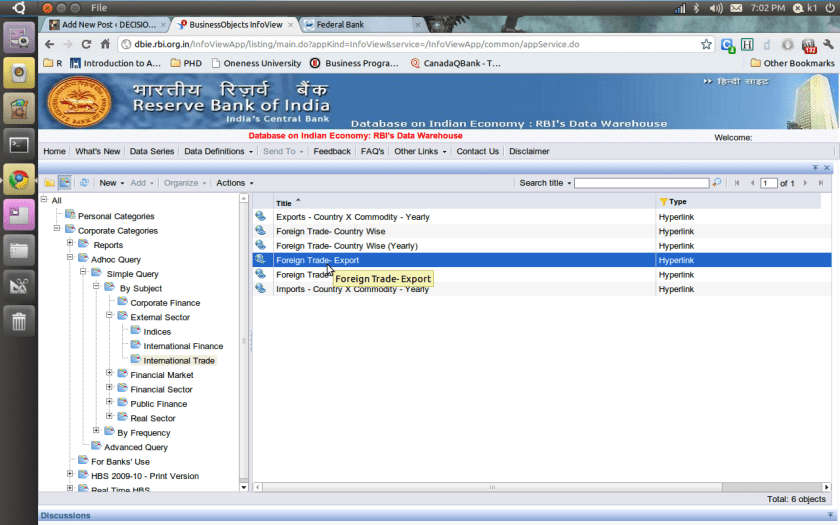

Data Visualization: Central Banks

Trying to compare the transparency of central banks via the data visualization of two very different central banks.

One is Reserve Bank of India and the other is Federal Reserve Bank of New York

Here are some points-

1) The federal bank gives you a huge clutter of charts to choose from and sometimes gives you very difficult to understand charts.

see http://www.newyorkfed.org/research/global_economy/usecon_charts.html

and http://www.newyorkfed.org/research/directors_charts/us18chart.pdf

2) The Reserve bank of India choose Business Objects and gives you a proper drilldown kind of graph and tables. ( thats a lot of heavy metal and iron ore China needs from India 😉 😉

Foreign Trade – Export Time-line: ALL

|

Source : DGCI & S, Ministry of Commerce & Industry, GoI

You can see the screenshots of the various visualization tools of the New York Fed Reserve Bank and Indian Reserve Bank- if the US Fed is serious about cutting the debt maybe it should start publishing better visuals

Related Articles

- Iron-Ore Miners Raise Prices (online.wsj.com)

- Rival bidders join forces to take over key iron ore deposit in Canadian Arctic (mnn.com)

- UPDATE 5-Floods knock BHP coal output; iron ore hits record (reuters.com)

- BHP quarterly iron ore hits record, floods knock coal (reuters.com)

- Swaps with foreign cen banks total $70 million – NY Fed (reuters.com)

- Foreign central banks’ U.S. debt holdings fall – Fed (reuters.com)

- The U.S. FED: Role Model for Brazil’s Central Bank: Estadao, Brazil (themoderatevoice.com)

- Data Visualization References (dashboardspy.wordpress.com)

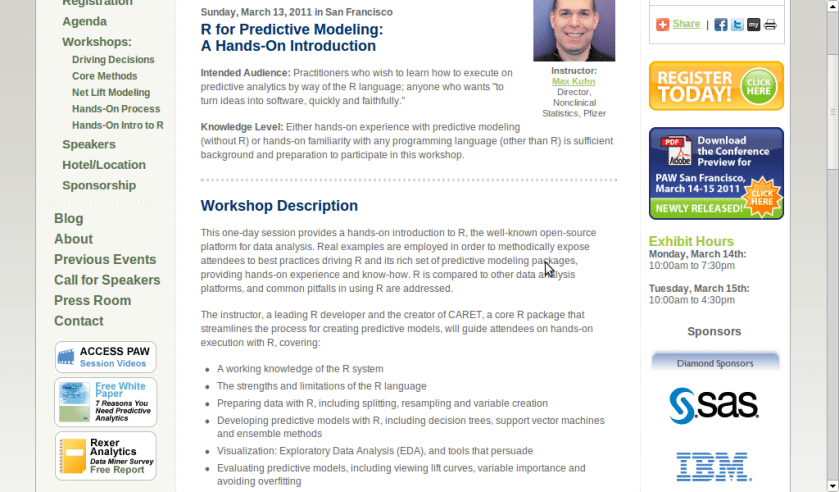

R for Predictive Modeling:Workshop

A workshop on using R for Predictive Modeling, by the Director, Non Clinical Stats, Pfizer. Interesting Bay Area Event- part of next edition of Predictive Analytics World

Sunday, March 13, 2011 in San Francisco

R for Predictive Modeling:

A Hands-On Introduction

Intended Audience: Practitioners who wish to learn how to execute on predictive analytics by way of the R language; anyone who wants “to turn ideas into software, quickly and faithfully.”

Knowledge Level: Either hands-on experience with predictive modeling (without R) or hands-on familiarity with any programming language (other than R) is sufficient background and preparation to participate in this workshop.

Workshop Description

This one-day session provides a hands-on introduction to R, the well-known open-source platform for data analysis. Real examples are employed in order to methodically expose attendees to best practices driving R and its rich set of predictive modeling packages, providing hands-on experience and know-how. R is compared to other data analysis platforms, and common pitfalls in using R are addressed.

The instructor, a leading R developer and the creator of CARET, a core R package that streamlines the process for creating predictive models, will guide attendees on hands-on execution with R, covering:

- A working knowledge of the R system

- The strengths and limitations of the R language

- Preparing data with R, including splitting, resampling and variable creation

- Developing predictive models with R, including decision trees, support vector machines and ensemble methods

- Visualization: Exploratory Data Analysis (EDA), and tools that persuade

- Evaluating predictive models, including viewing lift curves, variable importance and avoiding overfitting

Hardware: Bring Your Own Laptop

Each workshop participant is required to bring their own laptop running Windows or OS X. The software used during this training program, R, is free and readily available for download.

Attendees receive an electronic copy of the course materials and related R code at the conclusion of the workshop.

Schedule

- Workshop starts at 9:00am

- Morning Coffee Break at 10:30am – 11:00am

- Lunch provided at 12:30 – 1:15pm

- Afternoon Coffee Break at 2:30pm – 3:00pm

- End of the Workshop: 4:30pm

Instructor

Max Kuhn, Director, Nonclinical Statistics, Pfizer

Max Kuhn is a Director of Nonclinical Statistics at Pfizer Global R&D in Connecticut. He has been apply models in the pharmaceutical industries for over 15 years.

He is a leading R developer and the author of several R packages including the CARET package that provides a simple and consistent interface to over 100 predictive models available in R.

Mr. Kuhn has taught courses on modeling within Pfizer and externally, including a class for the India Ministry of Information Technology.

http://www.predictiveanalyticsworld.com/sanfrancisco/2011/r_for_predictive_modeling.php

Related Articles

- In-Depth Hands-on Workshops Delivered By Analytics Experts and Leading Practitioners at Predictive Analytics World March 13-17, 2011, San Francisco, California (prweb.com)

- Rapid Insight Provides Low-Cost Options for Desktop Data Transformation and Predictive Modeling (customerthink.com)

- An R interface to the Google Prediction API (revolutionanalytics.com)

- THE NEW NEXT: TrendsSpotting’s Trend Prediction Model (trendsspotting.com)

- JMP Launches Global Online Store Powered by e-academy, Inc. (prweb.com)

- Drug’s likelihood of causing birth defects predicted by model (physorg.com)

Interview Ajay Ohri Decisionstats.com with DMR

From-

http://www.dataminingblog.com/data-mining-research-interview-ajay-ohri/

Here is the winner of the Data Mining Research People Award 2010: Ajay Ohri! Thanks to Ajay for giving some time to answer Data Mining Research questions. And all the best to his blog, Decision Stat!

Data Mining Research (DMR): Could you please introduce yourself to the readers of Data Mining Research?

Ajay Ohri (AO): I am a business consultant and writer based out of Delhi- India. I have been working in and around the field of business analytics since 2004, and have worked with some very good and big companies primarily in financial analytics and outsourced analytics. Since 2007, I have been writing my blog at http://decisionstats.com which now has almost 10,000 views monthly.

All in all, I wrote about data, and my hobby is also writing (poetry). Both my hobby and my profession stem from my education ( a masters in business, and a bachelors in mechanical engineering).

My research interests in data mining are interfaces (simpler interfaces to enable better data mining), education (making data mining less complex and accessible to more people and students), and time series and regression (specifically ARIMAX)

In business my research interests software marketing strategies (open source, Software as a service, advertising supported versus traditional licensing) and creation of technology and entrepreneurial hubs (like Palo Alto and Research Triangle, or Bangalore India).

DMR: I know you have worked with both SAS and R. Could you give your opinion about these two data mining tools?

AO: As per my understanding, SAS stands for SAS language, SAS Institute and SAS software platform. The terms are interchangeably used by people in industry and academia- but there have been some branding issues on this.

I have not worked much with SAS Enterprise Miner , probably because I could not afford it as business consultant, and organizations I worked with did not have a budget for Enterprise Miner.

I have worked alone and in teams with Base SAS, SAS Stat, SAS Access, and SAS ETS- and JMP. Also I worked with SAS BI but as a user to extract information.

You could say my use of SAS platform was mostly in predictive analytics and reporting, but I have a couple of projects under my belt for knowledge discovery and data mining, and pattern analysis. Again some of my SAS experience is a bit dated for almost 1 year ago.

I really like specific parts of SAS platform – as in the interface design of JMP (which is better than Enterprise Guide or Base SAS ) -and Proc Sort in Base SAS- I guess sequential processing of data makes SAS way faster- though with computing evolving from Desktops/Servers to even cheaper time shared cloud computers- I am not sure how long Base SAS and SAS Stat can hold this unique selling proposition.

I dislike the clutter in SAS Stat output, it confuses me with too much information, and I dislike shoddy graphics in the rendering output of graphical engine of SAS. Its shoddy coding work in SAS/Graph and if JMP can give better graphics why is legacy source code preventing SAS platform from doing a better job of it.

I sometimes think the best part of SAS is actually code written by Goodnight and Sall in 1970’s , the latest procs don’t impress me much.

SAS as a company is something I admire especially for its way of treating employees globally- but it is strange to see the rest of tech industry not following it. Also I don’t like over aggression and the SAS versus Rest of the Analytics /Data Mining World mentality that I sometimes pick up when I deal with industry thought leaders.

I think making SAS Enterprise Miner, JMP, and Base SAS in a completely new web interface priced at per hour rates is my wishlist but I guess I am a bit sentimental here- most data miners I know from early 2000’s did start with SAS as their first bread earning software. Also I think SAS needs to be better priced in Business Intelligence- it seems quite cheap in BI compared to Cognos/IBM but expensive in analytical licensing.

If you are a new stats or business student, chances are – you may know much more R than SAS today. The shift in education at least has been very rapid, and I guess R is also more of a platform than a analytics or data mining software.

I like a lot of things in R- from graphics, to better data mining packages, modular design of software, but above all I like the can do kick ass spirit of R community. Lots of young people collaborating with lots of young to old professors, and the energy is infectious. Everybody is a CEO in R ’s world. Latest data mining algols will probably start in R, published in journals.

Which is better for data mining SAS or R? It depends on your data and your deadline. The golden rule of management and business is -it depends.

Also I have worked with a lot of KXEN, SQL, SPSS.

DMR: Can you tell us more about Decision Stats? You have a traffic of 120′000 for 2010. How did you reach such a success?

AO: I don’t think 120,000 is a success. Its not a failure. It just happened- the more I wrote, the more people read.In 2007-2008 I used to obsess over traffic. I tried SEO, comments, back linking, and I did some black hat experimental stuff. Some of it worked- some didn’t.

In the end, I started asking questions and interviewing people. To my surprise, senior management is almost always more candid , frank and honest about their views while middle managers, public relations, marketing folks can be defensive.

Social Media helped a bit- Twitter, Linkedin, Facebook really helped my network of friends who I suppose acted as informal ambassadors to spread the word.

Again I was constrained by necessity than choices- my middle class finances ( I also had a baby son in 2007-my current laptop still has some broken keys ![]() – by my inability to afford traveling to conferences, and my location Delhi isn’t really a tech hub.

– by my inability to afford traveling to conferences, and my location Delhi isn’t really a tech hub.

The more questions I asked around the internet, the more people responded, and I wrote it all down.

I guess I just was lucky to meet a lot of nice people on the internet who took time to mentor and educate me.

I tried building other websites but didn’t succeed so i guess I really don’t know. I am not a smart coder, not very clever at writing but I do try to be honest.

Basic economics says pricing is proportional to demand and inversely proportional to supply. Honest and candid opinions have infinite demand and an uncertain supply.

DMR: There is a rumor about a R book you plan to publish in 2011 ![]() Can you confirm the rumor and tell us more?

Can you confirm the rumor and tell us more?

AO: I just signed a contract with Springer for ” R for Business Analytics”. R is a great software, and lots of books for statistically trained people, but I felt like writing a book for the MBAs and existing analytics users- on how to easily transition to R for Analytics.

Like any language there are tricks and tweaks in R, and with a focus on code editors, IDE, GUI, web interfaces, R’s famous learning curve can be bent a bit.

Making analytics beautiful, and simpler to use is always a passion for me. With 3000 packages, R can be used for a lot more things and a lot more simply than is commonly understood.

The target audience however is business analysts- or people working in corporate environments.

Brief Bio-

Ajay Ohri has been working in the field of analytics since 2004 , when it was a still nascent emerging Industries in India. He has worked with the top two Indian outsourcers listed on NYSE,and with Citigroup on cross sell analytics where he helped sell an extra 50000 credit cards by cross sell analytics .He was one of the very first independent data mining consultants in India working on analytics products and domestic Indian market analytics .He regularly writes on analytics topics on his web site www.decisionstats.com and is currently working on open source analytical tools like R besides analytical software like SPSS and SAS.

Related Articles

- Skills of a good data miner (zyxo.wordpress.com)

- Data Mining with WEKA (r-bloggers.com)

- How Data Mining Can Help You Score on the First Date (volokh.com)

- Upcoming webinar on investigative analytics (dbms2.com)

- IBM SPSS 19 Now Available to the Global Academic Community via e-academy’s OnTheHub eStore (prweb.com)

Visiting Vaisno Devi

Just back from a pilgrimage or a hike of 25 kilometers —http://en.wikipedia.org/wiki/Vaishno_Devi

Vaishno Devi Mandir (Hindi: वैष्णोदेवी मन्दिर) is one of the holiest Hindu temples dedicated to Shakti, located in the hills of Vaishno Devi, Jammu and Kashmir, India. In Hinduism, Vaishno Devi, also known as Mata Rani and Vaishnavi, is a manifestation of the Mother Goddess.

The temple is near the town of Katra, in the Reasi district in the state of Jammu and Kashmir. It is one of the most revered places of worship in Northern India. The shrine is at an altitude of 5200 feet and a distance of approximately 14 kilometres (8.4 miles) from Katra.[1] Approximately 8 million pilgrims (yatris) visit the temple every year[2]

Related Articles

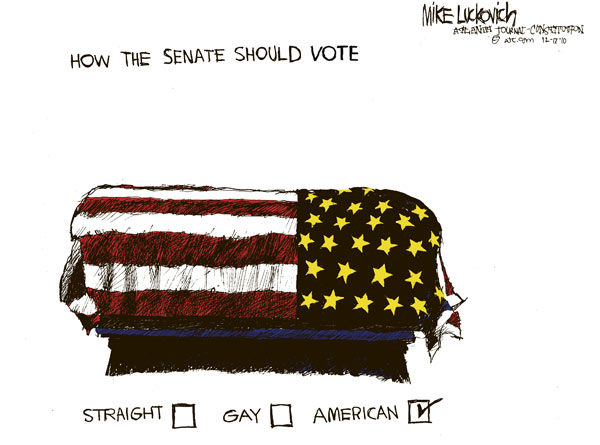

Top Cartoonists:Updated

Here is a list of cartoonists I follow- I sometimes think they make more sense than all the news media combined.

1) Mike Luckovich He is a Pulitzer Prize winning cartoonist for AJC at http://blogs.ajc.com/mike-luckovich/

I love his political satire-sometimes not his politics- though he is a liberal (surprisingly most people from creative arts tend to be liberal- guess because they support and need welfare more, 🙂 ) Since I am in India- I call myself a conservative (when filing taxes) or liberal (when drinking er tea)

2) Hugh Mcleod- of Gaping Void is very different from Mike above, in the way an abstract painter would be from a classical

artist. I like his satire on internet, technology and personal favorite – social media consultants. Hugh casts a critical eye on the world of tech and is an immensely successful artist- probably the Andy Warhol of this genre in a generation.

3) Doug Savage of Savage Chickens http://www.savagechickens.com/ has a great series of funny cartoons based on chickens drawn on Post it notes. While his drawing is less abstract than Hugh’s above, he sometimes touches an irreverent note more like Hugh than anyone else.

![]()

4) Professor Jorge Cham of Phd Comics http://www.phdcomics.com/comics.php is probably the most read comic in grad school – and probably the only cartoonist with a Phd I know of.

5) Scott Adams of Dilbert http://www.dilbert.com/ is probably the first “non kid stuff” cartoonist I started reading-in fact I once wrote to him asking for advice on my poetry to his credit- he replied with a single ” Best of Luck email”

They named our email server in Lucknow, UP, India for him (in my business school at http://iiml.ac.in ) Probably the best of corporate toon humor. Maybe they should make the Dilbert movie yet.

6) Randall Munroe of xkcd.com

XKCD is geek cartooning at its best.

For catching up with the best toons in a week, the best is Time.com ‘s weekly list at http://www.time.com/time/cartoonsoftheweek

It is the best collection of political cartoons.

Related Articles

- An Obama Presidency May Be Rough Going for Political Cartoonists [Obama Era] (gawker.com)

- Palling Around With Monuments [This Thing Looks Like That Thing] (gawker.com)

- the microaudience: the mot likely way to make money on the internet (gapingvoid.com)

- Cartoon(ist) of the Week – Joel Pett (underthelobsterscope.wordpress.com)

- Sweden suicide bombings: I’m a constant target, says cartoonist – Telegraph.co.uk (news.google.com)

- Indy cartoonist elated to find torrents of his work (boingboing.net)

- Six Cartoonists Tour Afghanistan w/USO (waronterrornews.typepad.com)

- Dilbert & Medicine (ivor-kovic.com)

- Nigerian Cartoonist Tayo Fatunla (theworld.org)