Friday Toons- As promised

Related Articles

- “Ailes admits Fox hires Nazis and propagandists” and related posts (feeds.dailykos.com)

Friday Toons- As promised

Here is a set of very nice, screenshot enabled tutorials from SAP BI. They are a bit outdated (3 years old) but most of it is quite relevant- especially from a Tutorial Design Perspective –

Most people would rather see screenshot based step by step powerpoints, than cluttered or clever presentations , or even videos that force you to sit like a TV zombie. Unfortunately most tutorial presentations I see especially for BI are either slides with one or two points, that abruptly shift to “concepts” or videos that are atleast more than 10 minutes long. That works fine for scripting tutorials or hands on workshops, but cannot be reproduced for later instances of study.

The mode of tutorials especially for GUI software can vary, it may be Slideshare, Scribd, Google Presentation,Microsoft Powerpoint but a step by step screenshot by screenshot tutorial is much better for understanding than commando line jargon/ Youtub Videos presentations, or Powerpoint with Points.

Have a look at these SAP BI 7 slideshares

and

Speaking of BI, the R Package called Brew is going to brew up something special especially combined with R Apache. However I wish R Apache, or R Web, or RServe had step by step install screenshot tutorials to increase their usage in Business Intelligence.

I tried searching for JMP GUI Tutorials too, but I believe putting all your content behind a registration wall is not so great. Do a Pareto Analysis of your training material, surely you can share a couple more tutorials without registration. It also will help new wanna-migrate users to get a test and feel for the installation complexities as well as final report GUI.

Here is an interview with James Dixon the founder of Pentaho, self confessed Chief Geek and CTO. Pentaho has been growing very rapidly and it makes open source Business Intelligence solutions- basically the biggest chunk of enterprise software market currently.

Here is an interview with James Dixon the founder of Pentaho, self confessed Chief Geek and CTO. Pentaho has been growing very rapidly and it makes open source Business Intelligence solutions- basically the biggest chunk of enterprise software market currently.

Ajay- How would you describe Pentaho as a BI product for someone who is completely used to traditional BI vendors (read non open source). Do the Oracle lawsuits over Java bother you from a business perspective?

James-

Pentaho has a full suite of BI software:

* ETL: Pentaho Data Integration

* Reporting: Pentaho Reporting for desktop and web-based reporting

* OLAP: Mondrian ROLAP engine, and Analyzer or Jpivot for web-based OLAP client

* Dashboards: CDF and Dashboard Designer

* Predictive Analytics: Weka

* Server: Pentaho BI Server, handles web-access, security, scheduling, sharing, report bursting etc

We have all of the standard BI functionality.

The Oracle/Java issue does not bother me much. There are a lot of software companies dependent on Java. If Oracle abandons Java a lot resources will suddenly focus on OpenJDK. It would be good for OpenJDK and might be the best thing for Java in the long term.

Ajay- What parts of Pentaho’s technology do you personally like the best as having an advantage over other similar proprietary packages.

Describe the latest Pentaho for Hadoop offering and Hadoop/HIVE ‘s advantage over say Map Reduce and SQL.

James- The coolest thing is that everything is pluggable:

* ETL: New data transformation steps can be added. New orchestration controls (job entries) can be added. New perspectives can be added to the design UI. New data sources and destinations can be added.

* Reporting: New content types and report objects can be added. New data sources can be added.

* BI Server: Every factory, engine, and layer can be extended or swapped out via configuration. BI components can be added. New visualizations can be added.

This means it is very easy for Pentaho, partners, customers, and community member to extend our software to do new things.

In addition every engine and component can be fully embedded into a desktop or web-based application. I made a youtube video about our philosophy: http://www.youtube.com/watch?v=uMyR-In5nKE

Our Hadoop offerings allow ETL developers to work in a familiar graphical design environment, instead of having to code MapReduce jobs in Java or Python.

90% of the Hadoop use cases we hear about are transformation/reporting/analysis of structured/semi-structured data, so an ETL tool is perfect for these situations.

Using Pentaho Data Integration reduces implementation and maintenance costs significantly. The fact that our ETL engine is Java and is embeddable means that we can deploy the engine to the Hadoop data nodes and transform the data within the nodes.

Ajay- Do you think the combination of recession, outsourcing,cost cutting, and unemployment are a suitable environment for companies to cut technology costs by going out of their usual vendor lists and try open source for a change /test projects.

Jamie- Absolutely. Pentaho grew (downloads, installations, revenue) throughout the recession. We are on target to do 250% of what we did last year, while the established vendors are flat in terms of new license revenue.

Ajay- How would you compare the user interface of reports using Pentaho versus other reporting software. Please feel free to be as specific.

James- We have all of the everyday, standard reporting features covered.

Over the years the old tools, like Crystal Reports, have become bloated and complicated.

We don’t aim to have 100% of their features, because we’d end us just as complicated.

The 80:20 rule applies here. 80% of the time people only use 20% of their features.

We aim for 80% feature parity, which should cover 95-99% of typical use cases.

Ajay- Could you describe the Pentaho integration with R as well as your relationship with Weka. Jaspersoft already has a partnership with Revolution Analytics for RevoDeployR (R on a web server)-

Any R plans for Pentaho as well?

James- The feature set of R and Weka overlap to a small extent – both of them include basic statistical functions. Weka is focused on predictive models and machine learning, whereas R is focused on a full suite of statistical models. The creator and main Weka developer is a Pentaho employee. We have integrated R into our ETL tool. (makes me happy 🙂 )

(probably not a good time to ask if SAS integration is done as well for a big chunk of legacy base SAS/ WPS users)

About-

As “Chief Geek” (CTO) at Pentaho, James Dixon is responsible for Pentaho’s architecture and technology roadmap. James has over 15 years of professional experience in software architecture, development and systems consulting. Prior to Pentaho, James held key technical roles at AppSource Corporation (acquired by Arbor Software which later merged into Hyperion Solutions) and Keyola (acquired by Lawson Software). Earlier in his career, James was a technology consultant working with large and small firms to deliver the benefits of innovative technology in real-world environments.

Name an industry in which top level executives are mostly white males, new recruits are mostly male (white or Indian/Chinese), women are primarily shunted into publicity relationships, social media or marketing.

Statistical Computing And Business Intelligence are the white man’s last stand to preserve an exclusive club of hail fellow well met and lets catch up after drinks culture. Newer startups are the exception in the business intelligence world , but a whiter face helps (so do an Indian or Chinese male) to attract a mostly male white venture capital industry.

I have earlier talked about technology being totally dominated by Asian males at grad student level and ASA membership almost not representing minorities like blacks and yes women- but this is about corporate culture in the traditional BI world.

If you are connected to the BI or Stat Computing world, who would you rather hire AND who have you actually hired- with identical resumes

White Male or White Female or Brown Indian Male/Female or Yellow Male/Female or Black Male or Black Female

How many Black Grad Assistants do you see in tech corridors- (Nah- it is easier to get a hard working Chinese /Indian- who smiles and does a great job at $12/hour)

How many non- Asian non white Authors do you see in technology and does that compare to pie chart below

Note_ 2010 Census numbers arent available for STEM, and I was unable to find ethnic background for various technology companies, because though these numbers are collected for legal purposes, they are not publicly shared.

Any technology company which has more than 40% women , or more than 10% blacks would be fairly representative to the US population. Anecdotal evidence suggests European employment for minorities is worse (especially for Asians) but better for women.

Any data sources to support/ refute these hypothesis are welcome for purposes of scientific inquiry.

Here is a great piece of software for data visualization– the public version is free.

And you can use it for Desktop Analytics as well as BI /server versions at very low cost.

About Tableau Software–

http://www.tableausoftware.com/press_release/tableau-massive-growth-hiring-q3-2010

Tableau was named by Software Magazine as the fastest growing software company in the $10 million to $30 million range in the world, and the second fastest growing software company worldwide overall. The ranking stems from the publication’s 28th annual Software 500 ranking of the world’s largest software service providers.

“We’re growing fast because the market is starving for easy-to-use products that deliver rapid-fire business intelligence to everyone. Our customers want ways to unlock their databases and produce engaging reports and dashboards,” said Christian Chabot CEO and co-founder of Tableau.

http://www.tableausoftware.com/about/who-we-are

Put together an Academy-Award winning professor from the nation’s most prestigious university, a savvy business leader with a passion for data, and a brilliant computer scientist. Add in one of the most challenging problems in software – making databases and spreadsheets understandable to ordinary people. You have just recreated the fundamental ingredients for Tableau.

The catalyst? A Department of Defense (DOD) project aimed at increasing people’s ability to analyze information and brought to famed Stanford professor, Pat Hanrahan. A founding member of Pixar and later its chief architect for RenderMan, Pat invented the technology that changed the world of animated film. If you know Buzz and Woody of “Toy Story”, you have Pat to thank.

Under Pat’s leadership, a team of Stanford Ph.D.s got together just down the hall from the Google folks. Pat and Chris Stolte, the brilliant computer scientist, realized that data visualization could produce large gains in people’s ability to understand information. Rather than analyzing data in text form and then creating visualizations of those findings, Pat and Chris invented a technology called VizQL™ by which visualization is part of the journey and not just the destination. Fast analytics and visualization for everyone was born.

While satisfying the DOD project, Pat and Chris met Christian Chabot, a former data analyst who turned into Jello when he saw what had been invented. The three formed a company and spun out of Stanford like so many before them (Yahoo, Google, VMWare, SUN). With Christian on board as CEO, Tableau rapidly hit one success after another: its first customer (now Tableau’s VP, Operations, Tom Walker), an OEM deal with Hyperion (now Oracle), funding from New Enterprise Associates, a PC Magazine award for “Product of the Year” just one year after launch, and now over 50,000 people in 50+ countries benefiting from the breakthrough.

also see http://www.tableausoftware.com/about/leadership

http://www.tableausoftware.com/about/board

—————————————————————————-

and now a demo I ran on the Kaggle contest data (it is a csv dataset with 95000 rows)

I found Tableau works extremely good at pivoting data and visualizing it -almost like Excel on Steroids. Download the free version here ( I dont know about an academic program (see links below) but software is not expensive at all)

http://buy.tableausoftware.com/

|

|||||||

|

|||||||

|

* Price is per Named User and includes one year of maintenance (upgrades and support). Products are made available as a download immediately after purchase. You may revisit the download site at any time during your current maintenance period to access the latest releases. |

I am putting together a list of top 500 Blogs on –

Some additional points-

And after all that noise- you can see Kush’s Blog –http://www.kushohri.com/

I am currently playing/ trying out RApache- one more excellent R product from Vanderbilt’s excellent Dept of Biostatistics and it’s prodigious coder Jeff Horner.

I really liked the virtual machine idea- you can download a virtual image of Rapache and play with it- .vmx is easy to create and great to share-

Basically using R Apache (with an EC2 on backend) can help you create customized dashboards, BI apps, etc all using R’s graphical and statistical capabilities.

What’s R Apache?

As per

http://biostat.mc.vanderbilt.edu/wiki/Main/RapacheWebServicesReport

Rapache embeds the R interpreter inside the Apache 2 web server. By doing this, Rapache realizes the full potential of R and its facilities over the web. R programmers configure appache by mapping Universal Resource Locaters (URL’s) to either R scripts or R functions. The R code relies on CGI variables to read a client request and R’s input/output facilities to write the response.

One advantage to Rapache’s architecture is robust multi-process management by Apache. In contrast to Rserve and RSOAP, Rapache is a pre-fork server utilizing HTTP as the communications protocol. Another advantage is a clear separation, a loose coupling, of R code from client code. With Rserve and RSOAP, the client must send data and R commands to be executed on the server. With Rapache the only client requirements are the ability to communicate via HTTP. Additionally, Rapache gains significant authentication, authorization, and encryption mechanism by virtue of being embedded in Apache.

Existing Demos of Architechture based on R Apache-

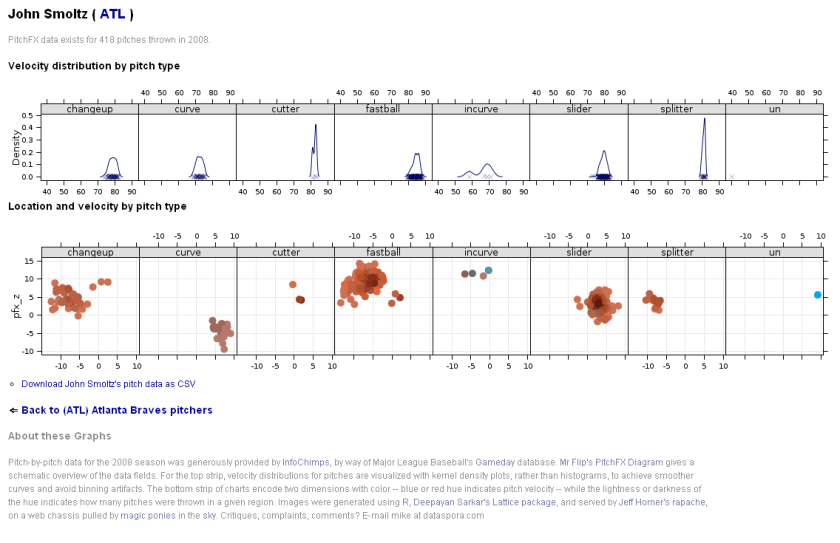

3. http://data.vanderbilt.edu/rapache/bbplot For baseball results – a demo of a query based web dashboard system- very good BI feel.

Whats coming next in R Apache?

You can download version 1.1.10 of rApache now. There

are only two significant changes and you don’t have to edit your

apache config or change any code (just recompile rApache and

reinstall):

1) Error reporting should be more informative. both when you

accidentally introduce errors in the Apache config, and when your code

introduces warnings and errors from web requests.

I’ve struggled with this one for awhile, not really knowing what

strategy would be best. Basically, rApache hooks into the R I/O layer

at such a low level that it’s hard to capture all warnings and errors

as they occur and introduce them to the user in a sane manner. In

prior releases, when ROutputErrors was in effect (either the apache

directive or the R function) one would typically see a bunch of grey

boxes with a red outline with a title of RApache Warning/Error!!!.

Unfortunately those grey boxes could contain empty lines, one line of

error, or a few that relate to the lines in previously displayed

boxes. Really a big uninformative mess.

The new approach is to print just one warning box with the title

“”Oops!!! <b>rApache</b> has something to tell you. View source and

read the HTML comments at the end.” and then as the title implies you

can read the HTML comment located at the end of the file… after the

closing html. That way, you’re actually reading how R would present

the warnings and errors to you as if you executed the code at the R

command prompt. And if you don’t use ROutputErrors, the warning/error

messages are printed in the Apache log file, just as they were before,

but nicer 😉

2) Code dispatching has changed so please let me know if I’ve

introduced any strange behavior.

This was necessary to enhance error reporting. Prior to this release,

rApache would use R’s C API exclusively to build up the call to your

code that is then passed to R’s evaluation engine. The advantage to

this approach is that it’s much more efficient as there is no parsing

involved, however all information about parse errors, files which

produced errors, etc. were lost. The new approach uses R’s built-in

parse function to build up the call and then passes it of to R. A

slight overhead, but it should be negligible. So, if you feel that

this approach is too slow OR I’ve introduced bugs or strange behavior,

please let me know.

FUTURE PLANS

I’m gaining more experience building Debian/Ubuntu packages each day,

so hopefully by some time in 2011 you can rely on binary releases for

these distributions and not install rApache from source! Fingers

crossed!

Development on the rApache 1.1 branch will be winding down (save bug

fix releases) as I transition to the 1.2 branch. This will involve

taking out a small chunk of code that defines the rApache development

environment (all the CGI variables and the functions such as

setHeader, setCookie, etc) and placing it in its own R package…

unnamed as of yet. This is to facilitate my development of the ralite

R package, a small single user cross-platform web server.

The goal for ralite is to speed up development of R web applications,

take out a bit of friction in the development process by not having to

run the full rApache server. Plus it would allow users to develop in

the rApache enronment while on windows and later deploy on more

capable server environments. The secondary goal for ralite is it’s use

in other web server environments (nginx and IIS come to mind) as a

persistent per-client process.

And finally, wiki.rapache.net will be the new www.rapache.net once I

translate the manual over… any day now.

From –http://biostat.mc.vanderbilt.edu/wiki/Main/JeffreyHorner

Not convinced ?- try the demos above.