Category: SAS

Protected: SAS Institute lawsuit against WPS Episode 2 The Clone Wars

Interview : R For Stata Users

Here is an interview with Bob Muenchen , author of ” R For SAS and SPSS Users” and co-author with Joe Hilbe of ” R for Stata Users”.

Here is an interview with Bob Muenchen , author of ” R For SAS and SPSS Users” and co-author with Joe Hilbe of ” R for Stata Users”.

Stata is a marvelous software package. Its syntax is well designed, concise and easy to learn. However R offers Stata users advantages in two key areas: education and analysis.

Regarding education, R is quickly becoming the universal language of data analysis. Books, journal articles and conference talks often include R code because it’s a powerful language and everyone can run it. So R has become an essential part of the education of data analysts, statisticians and data miners.

Regarding analysis, R offers a vast array of methods that R users have written. Next to R, Stata probably has more useful user-written add-ons than any other analytic software. The Statistical Software Components collection at Boston College’s Department of Economics is quite impressive (http://ideas.repec.org/s/boc/bocode.html), containing hundreds of useful additions to Stata. However, R’s collection of add-ons currently contains 3,680 packages, and more are being added every week. Stata users can access these fairly easily by doing their data management in Stata, saving a Stata format data set, importing it into R and running what they need. Working this way, the R program may only be a few lines long.

There are many good books on R, but as I learned the language I found myself constantly wondering how each concept related to the packages I already knew. So in this book we describe R first using Stata terminology and then using R terminology. For example, when introducing the R data frame, we start out saying that it’s just like a Stata data set: a rectangular set of variables that are usually numeric with perhaps one or two character variables. Then we move on to say that R also considers it a special type of “list” which constrains all its “components” to be equal in length. That then leads into entirely new territory.

The entire book is laid out to make learning easy for Stata users. The names used in the table of contents are Stata-based. The reader may look up how to “collapse” a data set by a grouping variable to find that one way R can do that is with the mysteriously named “tapply” function. A Stata user would never have guessed to look for that name

I didn’t have enough in-depth knowledge of Stata to pull this off by myself, so I was pleased to get Joe Hilbe as a co-author. Joe is a giant in the world of Stata. He wrote several of the Stata commands that ship with the product including glm, logistic and manova. He was also the first editor of the Stata Technical Bulletin, which later turned into the Stata Journal. I have followed his work from his days as editor of the statistical software reviews section in the journal The American Statistician. There he not only edited but also wrote many of the reviews which I thoroughly enjoyed reading over the years. If you don’t already know Stata, his review of Stata 9.0 is still good reading (November 1, 2005, 59(4): 335-348).

Describe the relationship between Stata and R and how it is the same or different from SAS / SPSS and R.

This is a very interesting question. I pointed out in R for SAS and SPSS Users that SAS and SPSS are structured very similarly while R is totally different. Stata, on the other hand, has many similarities to R. Here I’ll quote directly from the book:

• Both include rich programming languages designed for writing new analytic methods, not just a set of prewritten commands.

• Both contain extensive sets of analytic commands written in their own languages.

• The pre-written commands in R, and most in Stata, are visible and open for you to change as you please.

• Both save command or function output in a form you can easily use as input to further analysis.

• Both do modeling in a way that allows you to readily apply your models for tasks such as making predictions on new data sets. Stata calls these postestimation commands and R calls them extractor functions.

• In both, when you write a new command, it is on an equal footing with commands written by the developers. There are no additional “Developer’s Kits” to purchase.

• Both have legions of devoted users who have written numerous extensions and who continue to add the latest methods many years before their competitors.

• Both can search the Internet for user-written commands and download them automatically to extend their capabilities quickly and easily.

• Both hold their data in the computer’s main memory, offering speed but limiting the amount of data they can handle.

Can the book be used by a R user for learning Stata

That’s certainly not ideal. The sections that describe the relationship between the two languages would be good to know and all the example programs are presented in both R and Stata form. However, we spend very little time explaining the Stata programs while going into the R ones step by step. That said, I continue to receive e-mails from R experts who learned SAS or SPSS from R for SAS and SPSS Users, so it is possible.

Describe the response to your earlier work R for SAS and SPSS users and if any new editions is forthcoming.

I am very pleased with the reviews for R for SAS and SPSS Users. You can read them all, even the one really bad one, at http://r4stats.com. We incorporated all the advice from those reviews into R for Stata Users, so we hope that this book will be well received too.

The second edition to R for SAS and SPSS Users is due to the publisher by the end of February, so it will be in the bookstores by sometime in April 2011, if all goes as planned. I have a list of thirty new topics to add, and those won’t all fit. I have some tough decisions to make!

The Popularity of Data Analysis Software

Here is a nice page by Bob Muenchen (author of “R for SAS and SPSS” and “R for Stata” books)

It is available at http://r4stats.com/popularity and uses a variety of methods, including Google Insights, Page Rank, Link analysis, as well as information from Rexer Analytics and KDNuggets.

I believe the following two graphs sum it all up:

1 Number of Jobs at Monster.com using keywords

2 Google Scholar’s analysis of academic papers

Despite R’s Rapid Growth which is clearly evident, in terms of jobs as well as publications, it lags behind SAS and SPSS. So if you are a corporate user or an academic user, it makes sense to have more than one skill just to be sure. What do you think? Is learning R mutually exclusive and completely exhaustive from learning SAS or SPSS. See http://r4stats.com/popularity for the complete analysis by Bob Muenchen

Also it shows the tremendous opportunity for companies like Revolution Analytics and XL Solutions ( http://www.experience-rplus.com/ ) as the potential for growth is clearly evident.

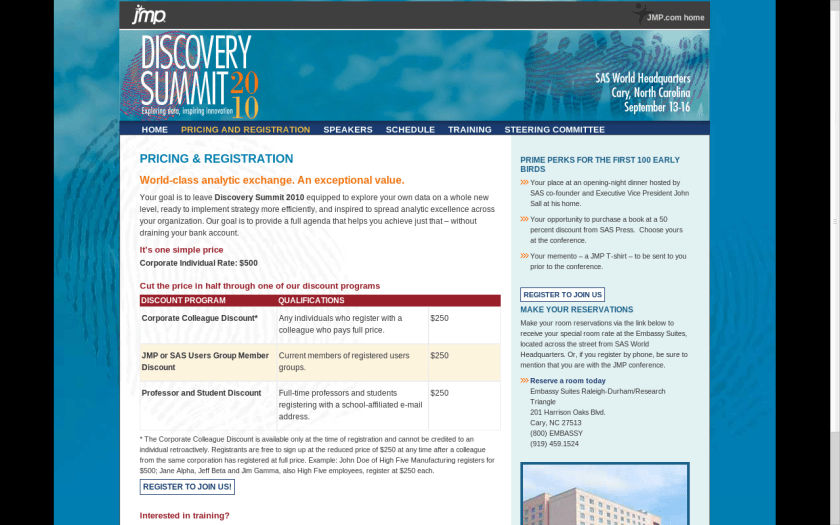

JMP Discovery Summit

SAS Early Days

From Anthony Barr, creator of SAS language at

http://www.barrsystems.com/about_us/the_company/sas_history.asp

and http://en.wikipedia.org/wiki/SAS_(software)#Early_history_of_SAS

A fascinating Proc by Proc read of who created what in those days. Quite easily some of the best work was coded in the 1970’s by Sall, Goodnight and Barr et al.

SAS Related History

SAS Beginnings talk at NCSU April 21, 2010

Sept 1962 – May 1963 Began assistantship with North Carolina State University Computing Center. I was assigned to work with the Statistics Department. Created general analysis of variance program controlled by analysis of variance language similar to the notation of Kendal. Program was written on IBM 1410 assembler. Dr. A. Grandage, author of IBM 650 analysis of variance programs, advised on Analysis of Variance calculations. “Statistical programs for the IBM 650-Part I, Communications of the ACM, Volume 2, Issue 8”

June – Aug 1963 Summer fellowship in Physical Oceanography, Woods Hole Oceanographic Institute Sept 1963 – May 1964 Resumed assistantship with North Carolina State Computing Center. Wrote multiple regression program with a compiler that generated machine code for transforming data. Dr. A. Grandage advised on the Doolittle procedure for inverting matrices. June 1964 – May 1966 Employed with IBM Federal Systems Division at the Pentagon, Washington. DC. I was assigned to work with the National Military Command Center, the information processing branch of the Joint Chiefs of Staff.

Project: Rewrite and enhance the Formatted File System, a generalized data based management system for retrieval and report writing.

Implemented three of the five major components: retrieval, sorting, and file update.

Innovated the idea of a uniform Lexical Analyzer for all languages in the system with a uniform method of handling all error messages within the system.

With the experience in this environment, I saw the power of the self-defining file for providing overall structure to the information processing world.

It became obvious that I could put statistical procedures in the same formatted file framework. At the same time, manuals for PL/1 appeared in the IBM library. The Lexical design of PL/l was an improvement over that used in the Formatted File System.

June 1966 I was recruited by North Carolina State University Statistics Department to rewrite analysis of variance and regression programs for the IBM 360. I saw this as an opportunity to develop the Statistical Analysis System (SAS).

I wrote the analysis of variance program while independently developing the SAS software for inputting and transforming data.

Sept 1966 Presented conceptual ideas of SAS to members of the Committee on Statistical Software of the University Statisticians of Southeast Experiment Station (USSERS). The meeting was held in Athens, GA. Individuals present: Frank Verlinden, North Carolina State University

Anthony J. Barr, North Carolina State University

Walt Drapula, Mississippi State University

Jim Fortson, University of Georgia

January 1968 Jim Goodnight and I cooperated in putting his regression program into SAS. This procedure was invaluable to pharmaceutical and agricultural scientists in analysis of experiments with missing data.

Barr:

Developed language for describing regression and analysis of variance model, and preprocessor for creating dummy variablesGoodnight:

Developed regression and statistical routines that made practical the analysis of variance methodology within the regression frameworkAugust 1972 Release of 1972 version of SAS. This was the first release to achieve wide distribution. SAS was now recognized as a major system in statistical computing. Credits for SAS 72 as described in SAS 76 Users Guide:

Anthony J. Barr

Language translator; data management and supervisor; ANOVA, DUNCAN, FACTOR, GUTTMAN, INBREED, LATTICE, NESTED, PLAN, PRINT, RANK, SORT, SPEARMANJames H. Goodnight

CANCORR, CORR, DISCRIM, MEANS, PLOT, PROBIT, REGR, RSQUARE, RQUE, STANDARD, STEPWISE.Jolayne W. Service

“A User’s Guide to the Statistical Analysis System”Carroll G. Perkins

HARVEY, HIST, PRTPCH: A Guide to the Supplementary Procedures Library for the Statistical Analysis System37,000 total lines of code with distribution:

- Barr ………………….65%

- Goodnight …………..32%

- Others…………………3%

I had developed and implemented the language, data management, and interface to operating system.

June 1973 – May 1976 I rewrote the internals of SAS: Data Management, report writing and the compiler. John Sall joined us in 1973 (approx.).

June 1976 Release of 1976 version of SAS. The 76 version was a functionally complete system for statistical computing and business data analysis.

I wrote the systems portion of the software.

Credits in the SAS 1976 manual:

Anthony J. Barr

Language translator; data management and supervisor; GUTTMAN, NESTED, PRINT, SORTJames H. Goodnight

ANOVA, CLUSTER, DISCRIM, GLM. MEANS, NEIGHBOR, NLIN, PROBIT, RSQUARE, STANDARD, STEPWISE, TTEST, VARCOMPJohn P. Sall

AUTOREG, BMDP, CONTENTS, CORR, DUNCAN, EDITOR, FACTOR, FREQ, MATRIX, OPTIONS, PLAN, RANK, SA572, SCORE, SPECTRA, SYSREG, function libraryJane T. Helwig

“A User’s Guide to SAS 76”Carroll G. Perkins (consultant)

CONVERT, SCATTER67,000 total lines of code with distribution:

- Barr ……………………35%

- Goodnight …………….18%

- Sall……………………..43%

June 1976 SAS Institute, Inc. was incorporated. Principals and percentage of ownership:

- Anthony J. Barr ……..40%

- James H. Goodnight ..35%

- John Sall ……………..17%

- Jane Helwig ……………8%

January 1979 I resigned from SAS Institute Copyright © 2006 Anthony J. Barr