It’s quite handy especially who spend a lot of time on email and on phone- the GmailPhone

Try it in case you havent.

It’s quite handy especially who spend a lot of time on email and on phone- the GmailPhone

Try it in case you havent.

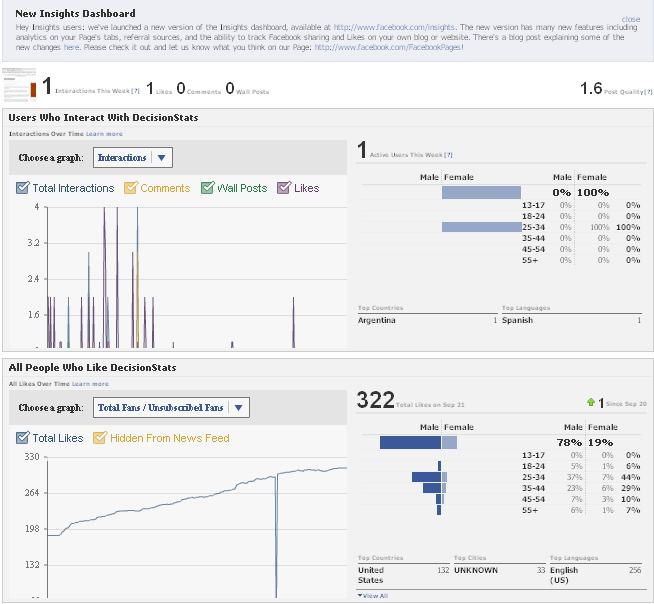

People sceptical of any analytical value of Facebook should see the nice embedded analytics, which is a close rival and even more to Google Analytics for websites. It has recently been updated as well.

It is right there on the button called Insights on left margin of your Facebook Page

Like for the Facebook Page

http://facebook.com/Decisionstats

You can also use Export Data function to run customized analytical and statistical testing on your Corporate Page.

Older View———————————————————————————-

Older View———————————————————————————-

see screenshot of Demographics of 213 Decisionstats fans on Facebook ( FB doesnot allow individual views but only aggregate views for Privacy Reasons)

Here is a comparison of Windows Azure instances vs Amazon compute instances

Developers have the ability to choose the size of VMs to run their application based on the applications resource requirements. Windows Azure compute instances come in four unique sizes to enable complex applications and workloads.

| Compute Instance Size | CPU | Memory | Instance Storage | I/O Performance |

|---|---|---|---|---|

| Small | 1.6 GHz | 1.75 GB | 225 GB | Moderate |

| Medium | 2 x 1.6 GHz | 3.5 GB | 490 GB | High |

| Large | 4 x 1.6 GHz | 7 GB | 1,000 GB | High |

| Extra large | 8 x 1.6 GHz | 14 GB | 2,040 GB | High |

Standard Rates:

Windows Azure

Source –

and

http://www.microsoft.com/windowsazure/windowsazure/

Amazon EC2 has more options though——————————-

http://aws.amazon.com/ec2/pricing/

| Standard On-Demand Instances | Linux/UNIX Usage | Windows Usage |

|---|---|---|

| Small (Default) | $0.085 per hour | $0.12 per hour |

| Large | $0.34 per hour | $0.48 per hour |

| Extra Large | $0.68 per hour | $0.96 per hour |

| Micro On-Demand Instances | Linux/UNIX Usage | Windows Usage |

| Micro | $0.02 per hour | $0.03 per hour |

| High-Memory On-Demand Instances | ||

| Extra Large | $0.50 per hour | $0.62 per hour |

| Double Extra Large | $1.00 per hour | $1.24 per hour |

| Quadruple Extra Large | $2.00 per hour | $2.48 per hour |

| High-CPU On-Demand Instances | ||

| Medium | $0.17 per hour | $0.29 per hour |

| Extra Large | $0.68 per hour | $1.16 per hour |

| Cluster Compute Instances | ||

| Quadruple Extra Large | $1.60 per hour | N/A* |

* Windows is not currently available for Cluster Compute Instances. |

||

http://aws.amazon.com/ec2/instance-types/

Instances of this family are well suited for most applications.

Small Instance – default*

1.7 GB memory

1 EC2 Compute Unit (1 virtual core with 1 EC2 Compute Unit)

160 GB instance storage (150 GB plus 10 GB root partition)

32-bit platform

I/O Performance: Moderate

API name: m1.small

Large Instance

7.5 GB memory

4 EC2 Compute Units (2 virtual cores with 2 EC2 Compute Units each)

850 GB instance storage (2×420 GB plus 10 GB root partition)

64-bit platform

I/O Performance: High

API name: m1.large

Extra Large Instance

15 GB memory

8 EC2 Compute Units (4 virtual cores with 2 EC2 Compute Units each)

1,690 GB instance storage (4×420 GB plus 10 GB root partition)

64-bit platform

I/O Performance: High

API name: m1.xlarge

Instances of this family provide a small amount of consistent CPU resources and allow you to burst CPUcapacity when additional cycles are available. They are well suited for lower throughput applications and web sites that consume significant compute cycles periodically.

Micro Instance

613 MB memory

Up to 2 EC2 Compute Units (for short periodic bursts)

EBS storage only

32-bit or 64-bit platform

I/O Performance: Low

API name: t1.micro

Instances of this family offer large memory sizes for high throughput applications, including database and memory caching applications.

High-Memory Extra Large Instance

17.1 GB of memory

6.5 EC2 Compute Units (2 virtual cores with 3.25 EC2 Compute Units each)

420 GB of instance storage

64-bit platform

I/O Performance: Moderate

API name: m2.xlarge

High-Memory Double Extra Large Instance

34.2 GB of memory

13 EC2 Compute Units (4 virtual cores with 3.25 EC2 Compute Units each)

850 GB of instance storage

64-bit platform

I/O Performance: High

API name: m2.2xlarge

High-Memory Quadruple Extra Large Instance

68.4 GB of memory

26 EC2 Compute Units (8 virtual cores with 3.25 EC2 Compute Units each)

1690 GB of instance storage

64-bit platform

I/O Performance: High

API name: m2.4xlarge

Instances of this family have proportionally more CPU resources than memory (RAM) and are well suited for compute-intensive applications.

High-CPU Medium Instance

1.7 GB of memory

5 EC2 Compute Units (2 virtual cores with 2.5 EC2 Compute Units each)

350 GB of instance storage

32-bit platform

I/O Performance: Moderate

API name: c1.medium

High-CPU Extra Large Instance

7 GB of memory

20 EC2 Compute Units (8 virtual cores with 2.5 EC2 Compute Units each)

1690 GB of instance storage

64-bit platform

I/O Performance: High

API name: c1.xlarge

Instances of this family provide proportionally high CPU resources with increased network performance and are well suited for High Performance Compute (HPC) applications and other demanding network-bound applications. Learn more about use of this instance type for HPC applications.

Cluster Compute Quadruple Extra Large Instance

23 GB of memory

33.5 EC2 Compute Units (2 x Intel Xeon X5570, quad-core “Nehalem” architecture)

1690 GB of instance storage

64-bit platform

I/O Performance: Very High (10 Gigabit Ethernet)

API name: cc1.4xlarge

Also http://www.microsoft.com/en-us/sqlazure/default.aspx

offers SQL Databases as a service with a free trial offer

If you are into .Net /SQL big time or too dependent on MS, Azure is a nice option to EC2 http://www.microsoft.com/windowsazure/offers/popup/popup.aspx?lang=en&locale=en-US&offer=COMPARE_PUBLIC

Updated- I just got approved for Google Storage so am adding their info- though they are in Preview (and its free right now) 🙂

https://code.google.com/apis/storage/docs/overview.html

Google Storage for Developers offers a rich set of features and capabilities:

Read the Getting Started Guide to learn more about the service.

Note: Google Storage for Developers does not support Google Apps accounts that use your company domain name at this time.

Google Storage for Developers pricing is based on usage.

While not quite Salesforce.com, a promising start for the first ERP Google App at https://www.google.com/enterprise/marketplace/viewListing?productListingId=5759+8485502070963042532

An interesting development-maybe there could be some statistical or BI apps on Google App Marketplace soon 😉

Apparently it is true as per the Register, but details in a paper next month- It is called Google Caffeine.

http://www.theregister.co.uk/2010/09/09/google_caffeine_explained/

Caffeine expands on BigTable to create a kind of database programming model that lets the company make changes to its web index without rebuilding the entire index from scratch. “[Caffeine] is a database-driven, Big Table–variety indexing system,” Lipkovitz tells The Reg, saying that Google will soon publish a paper discussing the system. The paper, he says, will be delivered next month at the USENIX Symposium on Operating Systems Design and Implementation (OSDI).

and interestingly

MapReduce, he says, isn’t suited to calculations that need to occur in near real-time.

MapReduce is a sequence of batch operations, and generally, Lipkovits explains, you can’t start your next phase of operations until you finish the first. It suffers from “stragglers,” he says. If you want to build a system that’s based on series of map-reduces, there’s a certain probability that something will go wrong, and this gets larger as you increase the number of operations. “You can’t do anything that takes a relatively short amount of time,” Lipkovitz says, “so we got rid of it.”

With Caffeine, Google can update its index by making direct changes to the web map already stored in BigTable. This includes a kind of framework that sits atop BigTable, and Lipkovitz compares it to old-school database programming and the use of “database triggers.”

but most importantly

In 2004, Google published research papers on GFS and MapReduce that became the basis for the open source Hadoop platform now used by Yahoo!, Facebook, and — yes — Microsoft. But as Google moves beyond GFS and MapReduce, Lipokovitz stresses that he is “not claiming that the rest of the world is behind us.”

But oh no!

“We’re in business of making searches useful,” he says. “We’re not in the business of selling infrastructure

But I say why not- Search is good and advertising is okay

There is more (not evil) money in infrastructure (of big data) as there is in advertising. But the advertising guys disagree

While reading across the internet I came across Microsoft’s version to MapReduce called Dryad- which has been around for some time, but has not generated quite the buzz that Hadoop or MapReduce are doing.

http://research.microsoft.com/en-us/projects/dryadlinq/

DryadLINQDryadLINQ is a simple, powerful, and elegant programming environment for writing large-scale data parallel applications running on large PC clusters.

Overview

New! An academic release of Dryad/DryadLINQ is now available for public download.

The goal of DryadLINQ is to make distributed computing on large compute cluster simple enough for every programmers. DryadLINQ combines two important pieces of Microsoft technology: the Dryad distributed execution engine and the .NET Language Integrated Query (LINQ).

Dryad provides reliable, distributed computing on thousands of servers for large-scale data parallel applications. LINQ enables developers to write and debug their applications in a SQL-like query language, relying on the entire .NET library and using Visual Studio.

DryadLINQ translates LINQ programs into distributed Dryad computations:

- C# and LINQ data objects become distributed partitioned files.

- LINQ queries become distributed Dryad jobs.

- C# methods become code running on the vertices of a Dryad job.

DryadLINQ has the following features:

- Declarative programming: computations are expressed in a high-level language similar to SQL

- Automatic parallelization: from sequential declarative code the DryadLINQ compiler generates highly parallel query plans spanning large computer clusters. For exploiting multi-core parallelism on each machine DryadLINQ relies on the PLINQ parallelization framework.

- Integration with Visual Studio: programmers in DryadLINQ take advantage of the comprehensive VS set of tools: Intellisense, code refactoring, integrated debugging, build, source code management.

- Integration with .Net: all .Net libraries, including Visual Basic, and dynamic languages are available.

Conciseness: the following line of code is a complete implementation of the Map-Reduce computation framework in DryadLINQ:

and http://research.microsoft.com/en-us/projects/dryad/

DryadThe Dryad Project is investigating programming models for writing parallel and distributed programs to scale from a small cluster to a large data-center.

Overview

New! An academic release of DryadLINQ is now available for public download.

Dryad is an infrastructure which allows a programmer to use the resources of a computer cluster or a data center for running data-parallel programs. A Dryad programmer can use thousands of machines, each of them with multiple processors or cores, without knowing anything about concurrent programming.

The Structure of Dryad Jobs

A Dryad programmer writes several sequential programs and connects them using one-way channels. The computation is structured as a directed graph: programs are graph vertices, while the channels are graph edges. A Dryad job is a graph generator which can synthesize any directed acyclic graph. These graphs can even change during execution, in response to important events in the computation.

Dryad is quite expressive. It completely subsumes other computation frameworks, such as Google’s map-reduce, or the relational algebra. Moreover, Dryad handles job creation and management, resource management, job monitoring and visualization, fault tolerance, re-execution, scheduling, and accounting.

The Dryad Software Stack

As a proof of Dryad’s versatility, a rich software ecosystem has been built on top Dryad:

- SSIS on Dryad executes many instances of SQL server, each in a separate Dryad vertex, taking advantage of Dryad’s fault tolerance and scheduling. This system is currently deployed in a live production system as part of one of Microsoft’s AdCenter log processing pipelines.

- DryadLINQ generates Dryad computations from the LINQ Language-Integrated Query extensions to C#.

- The distributed shell is a generalization of the pipe concept from the Unix shell in three ways. If Unix pipes allow the construction of one-dimensional (1-D) process structures, the distributed shell allows the programmer to build 2-D structures in a scripting language. The distributed shell generalizes Unix pipes in three ways:

- It allows processes to easily connect multiple file descriptors of each process — hence the 2-D aspect.

- It allows the construction of pipes spanning multiple machines, across a cluster.

- It virtualizes the pipelines, allowing the execution of pipelines with many more processes than available machines, by time-multiplexing processors and buffering results.

- Several languages are compiled to distributed shell processes. PSQL is an early version, recently replaced with Scope.

Publications

Dryad: Distributed Data-Parallel Programs from Sequential Building Blocks

Michael Isard, Mihai Budiu, Yuan Yu, Andrew Birrell, and Dennis Fetterly

European Conference on Computer Systems (EuroSys), Lisbon, Portugal, March 21-23, 2007Video of a presentation on Dryad at the Google Campus, given by Michael Isard, Nov 1, 2007.

Also interesting to read-

he basic computational model we decided to adopt for Dryad is the directed-acyclic graph (DAG). Each node in the graph is a computation, and each edge in the graph is a stream of data traveling in the direction of the edge. The amount of data on any given edge is assumed to be finite, the computations are assumed to be deterministic, and the inputs are assumed to be immutable. This isn’t by any means a new way of structuring a distributed computation (for example Condor had DAGMan long before Dryad came along), but it seemed like a sweet spot in the design space given our other constraints.

So, why is this a sweet spot? A DAG is very convenient because it induces an ordering on the nodes in the graph. That makes it easy to design scheduling policies, since you can define a node to be ready when its inputs are available, and at any time you can choose to schedule as many ready nodes as you like in whatever order you like, and as long as you always have at least one scheduled you will continue to make progress and never deadlock. It also makes fault-tolerance easy, since given our determinism and immutability assumptions you can backtrack as far as you want in the DAG and re-execute as many nodes as you like to regenerate intermediate data that has been lost or is unavailable due to cluster failures.

from

http://blogs.msdn.com/b/dryad/archive/2010/07/23/why-does-dryad-use-a-dag.aspx

While reading across the internet I came across Microsoft’s version to MapReduce called Dryad- which has been around for some time, but has not generated quite the buzz that Hadoop or MapReduce are doing.

http://research.microsoft.com/en-us/projects/dryadlinq/

DryadLINQDryadLINQ is a simple, powerful, and elegant programming environment for writing large-scale data parallel applications running on large PC clusters.

Overview

New! An academic release of Dryad/DryadLINQ is now available for public download.

The goal of DryadLINQ is to make distributed computing on large compute cluster simple enough for every programmers. DryadLINQ combines two important pieces of Microsoft technology: the Dryad distributed execution engine and the .NET Language Integrated Query (LINQ).

Dryad provides reliable, distributed computing on thousands of servers for large-scale data parallel applications. LINQ enables developers to write and debug their applications in a SQL-like query language, relying on the entire .NET library and using Visual Studio.

DryadLINQ translates LINQ programs into distributed Dryad computations:

- C# and LINQ data objects become distributed partitioned files.

- LINQ queries become distributed Dryad jobs.

- C# methods become code running on the vertices of a Dryad job.

DryadLINQ has the following features:

- Declarative programming: computations are expressed in a high-level language similar to SQL

- Automatic parallelization: from sequential declarative code the DryadLINQ compiler generates highly parallel query plans spanning large computer clusters. For exploiting multi-core parallelism on each machine DryadLINQ relies on the PLINQ parallelization framework.

- Integration with Visual Studio: programmers in DryadLINQ take advantage of the comprehensive VS set of tools: Intellisense, code refactoring, integrated debugging, build, source code management.

- Integration with .Net: all .Net libraries, including Visual Basic, and dynamic languages are available.

Conciseness: the following line of code is a complete implementation of the Map-Reduce computation framework in DryadLINQ:

and http://research.microsoft.com/en-us/projects/dryad/

DryadThe Dryad Project is investigating programming models for writing parallel and distributed programs to scale from a small cluster to a large data-center.

Overview

New! An academic release of DryadLINQ is now available for public download.

Dryad is an infrastructure which allows a programmer to use the resources of a computer cluster or a data center for running data-parallel programs. A Dryad programmer can use thousands of machines, each of them with multiple processors or cores, without knowing anything about concurrent programming.

The Structure of Dryad Jobs

A Dryad programmer writes several sequential programs and connects them using one-way channels. The computation is structured as a directed graph: programs are graph vertices, while the channels are graph edges. A Dryad job is a graph generator which can synthesize any directed acyclic graph. These graphs can even change during execution, in response to important events in the computation.

Dryad is quite expressive. It completely subsumes other computation frameworks, such as Google’s map-reduce, or the relational algebra. Moreover, Dryad handles job creation and management, resource management, job monitoring and visualization, fault tolerance, re-execution, scheduling, and accounting.

The Dryad Software Stack

As a proof of Dryad’s versatility, a rich software ecosystem has been built on top Dryad:

- SSIS on Dryad executes many instances of SQL server, each in a separate Dryad vertex, taking advantage of Dryad’s fault tolerance and scheduling. This system is currently deployed in a live production system as part of one of Microsoft’s AdCenter log processing pipelines.

- DryadLINQ generates Dryad computations from the LINQ Language-Integrated Query extensions to C#.

- The distributed shell is a generalization of the pipe concept from the Unix shell in three ways. If Unix pipes allow the construction of one-dimensional (1-D) process structures, the distributed shell allows the programmer to build 2-D structures in a scripting language. The distributed shell generalizes Unix pipes in three ways:

- It allows processes to easily connect multiple file descriptors of each process — hence the 2-D aspect.

- It allows the construction of pipes spanning multiple machines, across a cluster.

- It virtualizes the pipelines, allowing the execution of pipelines with many more processes than available machines, by time-multiplexing processors and buffering results.

- Several languages are compiled to distributed shell processes. PSQL is an early version, recently replaced with Scope.

Publications

Dryad: Distributed Data-Parallel Programs from Sequential Building Blocks

Michael Isard, Mihai Budiu, Yuan Yu, Andrew Birrell, and Dennis Fetterly

European Conference on Computer Systems (EuroSys), Lisbon, Portugal, March 21-23, 2007Video of a presentation on Dryad at the Google Campus, given by Michael Isard, Nov 1, 2007.

Also interesting to read-

he basic computational model we decided to adopt for Dryad is the directed-acyclic graph (DAG). Each node in the graph is a computation, and each edge in the graph is a stream of data traveling in the direction of the edge. The amount of data on any given edge is assumed to be finite, the computations are assumed to be deterministic, and the inputs are assumed to be immutable. This isn’t by any means a new way of structuring a distributed computation (for example Condor had DAGMan long before Dryad came along), but it seemed like a sweet spot in the design space given our other constraints.

So, why is this a sweet spot? A DAG is very convenient because it induces an ordering on the nodes in the graph. That makes it easy to design scheduling policies, since you can define a node to be ready when its inputs are available, and at any time you can choose to schedule as many ready nodes as you like in whatever order you like, and as long as you always have at least one scheduled you will continue to make progress and never deadlock. It also makes fault-tolerance easy, since given our determinism and immutability assumptions you can backtrack as far as you want in the DAG and re-execute as many nodes as you like to regenerate intermediate data that has been lost or is unavailable due to cluster failures.

from

http://blogs.msdn.com/b/dryad/archive/2010/07/23/why-does-dryad-use-a-dag.aspx