It seems there is a little known component called SAS Toolkit that enables you to create customized SAS commands.

[tweetmeme=”decisionstats”]

I am still trying to find actual usage of this software but it basically can be used to create additional customization in SAS. The price is reportedly 12000 USD a year for the Tool Kit but academics could be encouraged to write thesis or projects in newer algols using standard SAS discounting. In addition there is no licensing constraint as of now to reselling your customized sas algol ( but check with Cary,NC or http://www.sas.com on this before you go ahead and develop)

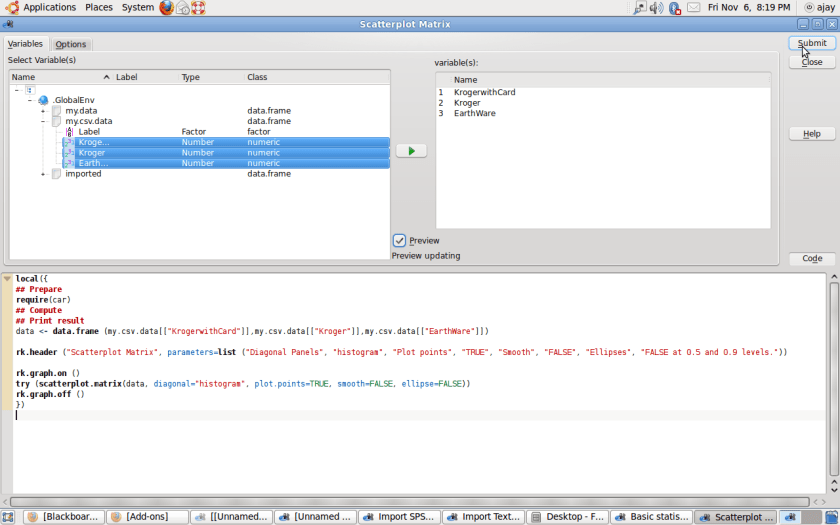

So if you have an existing R package (with open source) and someone wants to port it to SAS language or SAS software, they can simply use the SAS Toolkit to transport the algorithm ( which to my knowledge are mostly open in R). Specific instances are graphics, Hmisc, Pl.ier or even lattice and clustering (like mclust) packages. or maybe even license it.

Citation-http://www.sas.com/products/toolkit/index.html

SAS/TOOLKIT® SAS/TOOLKIT software enables you to write your own customized SAS procedures (including graphics procedures), informats, formats, functions (including IML and DATA step functions), CALL routines, and database engines in several languages including C, FORTRAN, PL/I, and IBM assembler. SAS Procedures A SAS procedure is a program that interfaces with the SAS System to perform a given action. The SAS System provides services to the procedure such as:

- statement processing

- data set management

- memory allocation

SAS Informats, Formats, Functions, and CALL Routines (IFFCs) You can use SAS/TOOLKIT software to write your own SAS informats, formats, functions, and CALLroutines in the same choice of languages: C, FORTRAN, PL/I, and IBM assembler. Like procedures, user-written functions and CALL routines add capabilities to the SAS System that enable you to tailor the system to your site’s specific needs. Many of the same reasons for writing procedures also apply to writing SAS formats and CALL routines. SAS/TOOLKIT Software and PROC FORMAT You may wonder why you should use SAS/TOOLKIT software to create user-written formats and informats when base SAS software includes PROC FORMAT. SAS/TOOLKIT software enables you to create formats and informats that perform more than the simple table lookup functions provided by the FORMAT procedure. When you write formats and informats with SAS/TOOLKIT software, you can do the following:

- assign values according to an algorithm instead of looking up a value in a table.

- look up values in a Database to assign formatted values.

Writing a SAS IFFC

The routines you are most likely to use when writing an IFFC perform the following tasks:

- provide a mechanism to interface with functions that are already written at your site

- use algorithms to implement existing programs

- handle problems specific to the SAS environment, such as missing values.

SAS Engines SAS engines allow data to be presented to the SAS System so it appears to be a standard SAS data set. Engines supplied by SAS Institute consist of a large number of subroutines, all of which are called by the portion of the SAS System known as the engine supervisor.

However, with SAS/TOOLKIT software, an additional level of software, the engine middle-manager simplifies how you write your user-written engine. An Engine versus a Procedure To process data from an external file, you can write either an engine or a SAS procedure. In general, it is a good idea to implement data extraction mechanisms as procedures instead of engines. If your applications need to read most or all of a data file, you should consider creating a procedure—-but if they need random access to the file, you should consider creating an engine. Writing SAS Engines When you write an engine, you must include in your program a prescribed set of routines to perform the various tasks required to access the file and interact with the SAS System. These routines:

- open and close the data set

- obtain information about variables

- provide information about an external file or database

- read and write observations.

In addition, your program uses several structures defined by the SAS System for storing information needed by the engine and the SAS System. The SAS System interacts with your engine through the SAS engine middle-manager.

Using the USERPROC Procedure Before you run your grammar, procedure, IFFC, or engine, use SAS/TOOLKIT software’s USERPROC procedure.

- For grammars, the USERPROC procedure produces a grammar function.

- For procedures, IFFCs, and engines, the USERPROC procedure produces a program constants object file, which is necessary for linking all of the compiled object files into an executable module.

Compile and link the output of PROC USERPROC with the SAS System so that the system can access the procedure, IFFC, or engine when a user invokes it.

Using User-Written Procedures, IFFCs, and Engines After you have created a SAS procedure, IFFC, or engine, you need to tell the SAS System where to find the module in order to run it. You can store your executable modules in any appropriate library. Before you invoke the SAS System, use operating system control language to specify the fileref SASLIB for the directory or load library where your executables are stored. When you invoke the SAS System and use the name of your procedure, IFFC, or engine, the SAS System checks its own libraries first and then looks in the SASLIB library for a module with that name.

Debugging Capabilities The TLKTDBG facility allows you to obtain debug information concerning SAS routines called by your code, and works with any of the supported programming languages. You can turn this facility on and off without having to recompile or relink your code. Debug messages are sent to the SAS log. In addition to the SAS/TOOLKIT internal debugger, the C language compiler used to create your extension to the SAS System can be used to debug your program.

The SAS/C Compiler, the VMS Compiler, and the dbx debugger for AIX can all be used. NOTE: SAS/TOOLKIT software is used to develop procedures, IFFCs, and engines. Users do not need to license SAS/TOOLKIT software to run procedures developed with the software

SAS/C Compiler

March 2008 Level B support is effective beginning January 1, 2008 until December 31, 2009.March 2005 The SAS/C and SAS/C++ compiler and runtime components are reclassified as

SAS Retired products for z/OS, VM/ESA and cross-compiler platforms. SAS has no plans to develop or deliver a new release of the SAS/C product.

The SAS/C and SAS/C++ family of products provides a versatile development environment for IBM zSeries® and System/390® processors. Enhancements and product features for SAS/C 7.50F include support for z/Architecture instructions and 64-bit addressing, IEEE floating-point, C99 math library and a number of C++ language enhancements and extensions. The SAS/C runtime library, optimizer and debugging environments have been updated and enhanced to fully support the breadth of C/C++ 64-bit addressing, IEEE and C++ product features.

Finally, the SAS/C and SAS/C++ 7.50.06 Cross-compiler products for Windows, Linux, Solaris and Aix incorporate the same enhancements and features that are provided with SAS/C and SAS/C++ 7.50F for z/OS.

Also see- http://support.sas.com/kb/15/647.html