An interview with Carole Jesse, an experienced Analytics professional in SAS, JMP , analytics and Risk Management.

Ajay- Describe your career in science from school to now.

Carole- Truthfully, my career in science started in 7th grade. Hey, I know this is further back in time than you intended the question to go! However, something significant happened that year that pretty much set me on the path that I am still on today. I discovered Algebra. Up to that point in time, I was an average student in ‘arithmetic’. Algebra introduced LETTERS into the mix with numbers, in the simplest of ways that we have all seen: ‘Solve for x in the equation x+2=5’. That was something I could get behind, AND I excelled at it immediately. Without mathematical excellence, efforts in learning science can fall apart. Mathematics is everywhere!

I spent the rest of my secondary education consuming all the math and science that I could get. By the time I entered college I had already been exposed to pre-calculus and physics and was actually surprised by those in my college Freshman courses who had not seen anti-derivatives, memorized the quotient rule, or worked an inclined plane friction problem before.

My goal as an undergraduate was to become a Veterinarian. The beauty of a pre-Vet curriculum is that it is pretty much like pre-Med, rigorous and broad in the sciences. In my first two years of undergraduate work, I was exposed to more Chemistry, more Mathematics, more Physics, along with things like Genetics, Biology, even the Plant and Animal Sciences. Although I did not stick with my pursuit of Veterinary Medicine, it laid a solid foundation that has served me very well in the strangest of places.

I consider myself a Mathematician/Statistician due to my academic degrees in those areas, first a BS in Mathematics/Physics at the University of Wisconsin followed by a MS in Statistics at Montana State University. In between the BS and MS I also dabbled briefly in Electrical Engineering at the University of Minnesota.

Since academia, it is my breadth in ALL sciences which has allowed me to be very fluid in straddling diverse industries: from High Volume Manufacturing of Consumer Products, to Nuclear Energy, to Semiconductor Manufacturing/Packaging, to Financial Services, to Health Care. I succeed at business problem solving in these industries by applying my Statistical Methods knowledge, coupled with business acumen and peripheral understanding of the technologies used. I have worked closely with scientists and engineers, and could enter THEIR world speaking THEIR language, which was an aid in getting to these solutions quickly.

I can not place enough emphasis on the importance of exposure to a broad range of sciences, and as early as possible, for anyone who wants to be involved in Advanced Analytics and Business Intelligence. As a manager, I look closely at candidates for these diverse sorts of backgrounds.

Ajay- I find the number of computer scientists and analysts to be overwhelmingly male despite this being a lucrative profession. Do you think that BI and Analytics are male dominated? How can the trend be re-shaped?

Carole- Welcome to my world! All kidding aside, yes that has been my observation as well. While I am not versed in the specifics of actual gender statistics in Computer Science and Advanced Analytics versus other fields, based on my years in and around these fields, there does appear to be a bias.

This is not due to a lack of capability or interest in these fields on the part of women. I believe it is more due to the long history of cultural norms and negative social messages that perhaps push woman away from these fields. The messages can be subtle, but if you pay close attention, you will see them. Being one of 10 females in an undergraduate engineering class of 150 students has a message right there. Even though these 10 women were able to make entry to the class, the pressure of being a minority, whether gender based or otherwise, can be a powerful influencer in remaining there.

In my own experience, I have encountered frequent judgments where I was made to feel “good at math” was an unacceptable trait for a woman to have. It is important to note that these judgments have been delivered equally by men AND women. So I think until both genders develop higher expectations of women in the hard science areas, the trends will continue. It has been decades since my 7th grade introduction to algebra, but it appears the negative social messages regarding girls in math and science are still present today. Otherwise there would be no need (i.e. no market) for books like Danica McKellar’s “Math Doesn’t Suck,” and the follow-up “Kiss My Math,” both aimed at battling these negative messages at the middle school level.

As to how I have battled these cultural expectations, I developed a thick skin. I have also learned to expect excellence from myself even when a teacher, or a peer, or a boss may have had lower expectations for me than for a male counterpart. Sort of a John Mayer “Who Says” type of attitude. Who says I can’t do Math and Science. Watch me.

Ajay- How would you explain Risk Management using software to a class of graduate students in mathematics and statistics?

Carole- There are many areas of Risk Management. My specific experience has been on the Credit Risk Management and Fraud Risk Management sides in a couple of industries. For credit risk in financial services, typically there is a specific department whose role is to quantify and predict credit risk. Not just for the current portfolio, but for new products as well. Various methodologies are utilized, ranging from summarization of portfolio characteristics that have a known relationship to default to using historical data to build out predictive models for production implementation.

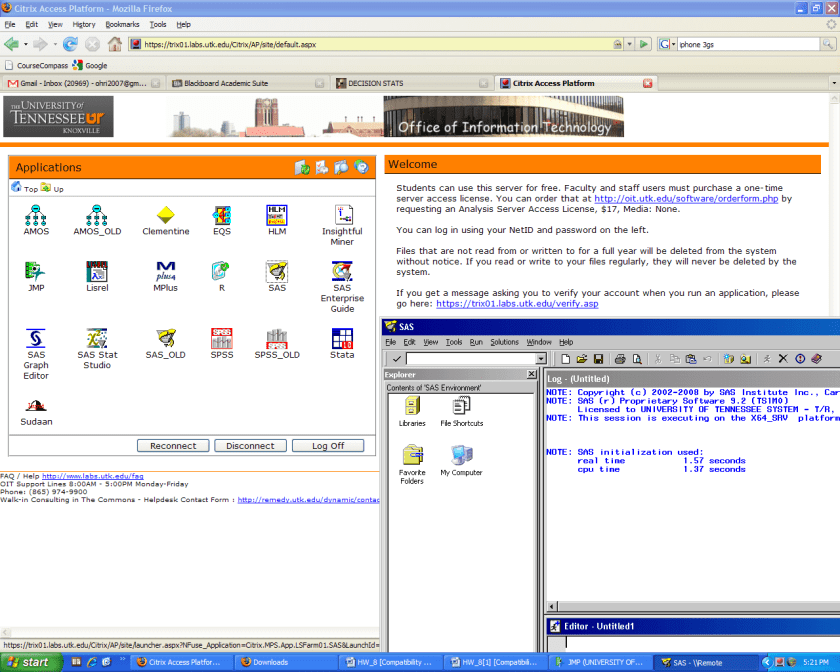

Key skills needed here are good understanding of the business, solid statistical methods knowledge, and computing skills. As far as the computing /software skills needed, there are three main categories 1) query and preparation of data, 2) model building and validation, and 3) model implementation. The actual tools will likely differ across these categories.

For example, 1) might be tackled with SAS®, Business Objects, or straight SQL;

2) requires a true modeling package or coding language like SAS®, SPSS, R, etc; and lastly

3) is the trickiest, as implementation can have many system limitations, but SAS® or C++ are often seen at implementation.

Ajay- Describe some of your most challenging and most exciting projects over the years.

Carole- I have been very fortunate to have many challenges and good projects in every role I have been in, but as I look back today, some things that stand out the most were in ‘high tech’. By virtue of being high tech, there is no fear of technology, and it is fast-paced and ever evolving to the next generation of product.

I spent seven years in the Semiconductor industry during the 90’s at Micron Technology, Intel, and Motorola. At the beginning of that window, we left the “486 processor” world, and during that window we spanned the realm of “Pentium processors.” Moore’s Law dominated all of this. To stay competitive all of these companies embraced statistical methods to help speed up development time.

At one point, I supported a group of about 10 R&D engineers in the Design and Analysis of their process improvement and simplification experiments. This afforded me exposure to much of the leading edge research the team was working on.

I recall one project with the goal of optimizing capacitance via surface roughness of the capacitor structures. In addition to all the science involved at the manufacturing step, what made this so interesting was the difficulty in measuring capacitance at the point in the process where film roughness was introduced. All we had were surface images after this step. The semiconductor wafers had to pass through several more process steps to get to the point where capacitance could actually be measured. All of this provided challenges around the design of the experiment and the data handling and analysis.

By working closely with both the process engineer and the process technician I was able to gather the image files off the image tool that were taken from the experimental runs. I used SAS® (yes, another shameless plug for my favorite software) to process the images using Fast Fourier Transforms. Subsequently, the transformed data was correlated to the capacitance in the analysis of the experimental results. Finding the “sweet spot” for capacitance, as driven by surface roughness, provided a huge leap for this process technology team.

The challenges of today are much different than they were in the 90s. In the more recent years, I have been working with transactional data related to financial services or health care claims. The challenges manifest themselves in the sheer volume of the data. In the last decade in particular most industries have been able to put the infrastructures in place to gather and store massive amounts of data related to their businesses. The challenge of turning this data into meaningful actionable information has been equally exciting as using Fast Fourier Transforms on image processing to optimize capacitance!

Currently I am working with an Oracle database where one table in the schema has 250 million records and a couple hundred fields. I refer to this as a “Pushing Tera” situation, since this one table is close to a Terabyte in size. As far as storing the data, that is not a big deal, but working with data this large or larger is the challenge.

Different skill sets are needed here beyond those of just an analyst, data miner, or statistician. These VLDB situations have morphed me into a bit of an IT person.

- How do you efficiently query such large databases? An inefficient SQL query will not be a bother in a situation where the database is small. But when the database is large, SQL efficiency is key. Many skills needed for industry are not necessarily taught in academia, but rather get picked up along the way, like Unix and SQL. I now write efficient SQL code, but many poorly written jobs gave their lives so that I could learn these efficiencies!

- Eventually I will need to organize this data into an application specific format and put data security controls around the process. Again, is this Advanced Analytics? Not really, it is more of an MIS role. The newness in these challenges keeps me excited about my work.

Ajay- How important do you think work life balance is for people in this profession? What do you do to chill out?

Carole- I don’t think the work-life balance is any more or less important to the decision science professionals than it is to any other profession really. I have friends in many other professions like Law, Nursing, Financial Planning, etc. with the same work-life balance struggles.

We live in a busy culture that includes more and more demands placed on us professionally. Let’s face it, most of us are care-takers to someone besides ourselves. It might be a spouse, or a child, or a dog, or even an elderly parent. Therefore, a total focus on work is bound to upset the work-life balance for most of us.

My biggest struggle comes in the form of balancing the two sides of my brain. That may sound weird, but one thing you have to agree with is that all of this is pretty “Left Brained”: mathematics, statistics, business intelligence, computing, etc.

To balance this out, and tap into my Right Brain, I like to dabble in the arts to some extent. Don’t get me wrong, I am not an artist! But that doesn’t mean I can’t draw on creativity in the artistic sense. For example, this past summer I took a course on Adobe Photoshop and Illustrator at Minneapolis College of Art and Design. This provided the best of both worlds, combining software and art! In addition to learning how to remove Cindy Crawford’s mole (yes, we did this), there were some very useful projects. One of my course projects was creating my customized Twitter background. An endeavor like this provides me a ‘chilling out’ factor from the normal work world. I know of many other Left Brain leaners that do similar things, like playing a musical instrument, or painting, etc. This is another reason why I took up digital photography: more visual arts.

Volunteer work has a balancing effect too. I try to give back to the community when I can. Swinging a hammer at Habitat for Humanity, or doing record keeping for an Animal Rescue organization, are things I have participated in.

And if none of this works, I enjoy cooking for my family and friends, and plying them with wine!

Ajay- What are you views on:

Carole- Data Quality

I’d have to say I am for data Quality! Who isn’t? But the reality is that data is dirty. That “Pushing Tera” Oracle table I mentioned earlier, well it turns out it has some issues. And it is incumbent upon me to determine the quality of that data before attempting to do anything analytical with it. One place in industry where value enhancement are needed: database administrators with business knowledge. It seems that more times than not, even if there was a business savvy DBA they may have moved on, leaving the consumers of that data (that would be me) to fend for themselves. There is some debate over which philosopher said “Know thyself.” Today’s job challenge is to “Know thy data” or perhaps “Value those that know thy data.”

B) Predictive Analytics for Fraud Monitoring

There is a huge market for analytics in fraud detection and prevention. But it is not for the faint of heart. Insiders, at least in Mortgage and Health Care, are the typical perpetrators of lucrative fraud. These insiders know how the industry processes work and they exploit this. As soon as one loophole is discovered and patched, fraudsters are looking for another loophole to exploit. This makes the task of predictive analytics different for Fraud than other areas where underlying patterns are probably more stable. Any methodology used here must have “turn on a dime” features built in, if possible. With economic conditions as they are, fraud detection/monitoring will remain important and challenging field.

Biography

Carole Jesse has been applying statistical methods and advanced analytics in a variety of industries for the last 20 years. Her career spans High Volume Manufacturing of Consumer Products, Nuclear Energy, Semiconductor Manufacturing/Packaging, Financial Services, and Health Care. Applications have ranged from Design and Analysis of Experiments to Credit Risk Prediction to Fraud Pattern Recognition. Carole holds a B.S. in Mathematics from the University of Wisconsin and a M.S. in Statistics from Montana State University, as well as several professional certifications. All the opinions expressed here are her own, and not those of her employers: past, present, or future. (Although her dog Angie may have had some influence.) Ms. Jesse currently lives and works in Minneapolis, Minnesota.

1) Describe your career in science from school to now.

Truthfully, my career in science started in 7th grade. Hey, I know this is further back in time than you intended the question to go! However, something significant happened that year that pretty much set me on the path that I am still on today. I discovered Algebra. Up to that point in time, I was an average student in ‘arithmetic’. Algebra introduced LETTERS into the mix with numbers, in the simplest of ways that we have all seen: ‘Solve for x in the equation x+2=5’. That was something I could get behind, AND I excelled at it immediately. Without mathematical excellence, efforts in learning science can fall apart. Mathematics is everywhere!

I spent the rest of my secondary education consuming all the math and science that I could get. By the time I entered college I had already been exposed to pre-calculus and physics and was actually surprised by those in my college Freshman courses who had not seen anti-derivatives, memorized the quotient rule, or worked an inclined plane friction problem before.

My goal as an undergraduate was to become a Veterinarian. The beauty of a pre-Vet curriculum is that it is pretty much like pre-Med, rigorous and broad in the sciences. In my first two years of undergraduate work, I was exposed to more Chemistry, more Mathematics, more Physics, along with things like Genetics, Biology, even the Plant and Animal Sciences. Although I did not stick with my pursuit of Veterinary Medicine, it laid a solid foundation that has served me very well in the strangest of places.

I consider myself a Mathematician/Statistician due to my academic degrees in those areas, first a BS in Mathematics/Physics at the University of Wisconsin followed by a MS in Statistics at Montana State University. In between the BS and MS I also dabbled briefly in Electrical Engineering at the University of Minnesota.

Since academia, it is my breadth in ALL sciences which has allowed me to be very fluid in straddling diverse industries: from High Volume Manufacturing of Consumer Products, to Nuclear Energy, to Semiconductor Manufacturing/Packaging, to Financial Services, to Health Care. I succeed at business problem solving in these industries by applying my Statistical Methods knowledge, coupled with business acumen and peripheral understanding of the technologies used. I have worked closely with scientists and engineers, and could enter THEIR world speaking THEIR language, which was an aid in getting to these solutions quickly.

I can not place enough emphasis on the importance of exposure to a broad range of sciences, and as early as possible, for anyone who wants to be involved in Advanced Analytics and Business Intelligence. As a manager, I look closely at candidates for these diverse sorts of backgrounds.

2) I find the number of computer scientists and analysts to be overwhelmingly male despite this being a lucrative profession. Do you think that BI and Analytics are male dominated? How can the trend be re-shaped?

Welcome to my world! All kidding aside, yes that has been my observation as well. While I am not versed in the specifics of actual gender statistics in Computer Science and Advanced Analytics versus other fields, based on my years in and around these fields, there does appear to be a bias.

This is not due to a lack of capability or interest in these fields on the part of women. I believe it is more due to the long history of cultural norms and negative social messages that perhaps push woman away from these fields. The messages can be subtle, but if you pay close attention, you will see them. Being one of 10 females in an undergraduate engineering class of 150 students has a message right there. Even though these 10 women were able to make entry to the class, the pressure of being a minority, whether gender based or otherwise, can be a powerful influencer in remaining there.

In my own experience, I have encountered frequent judgments where I was made to feel “good at math” was an unacceptable trait for a woman to have. It is important to note that these judgments have been delivered equally by men AND women. So I think until both genders develop higher expectations of women in the hard science areas, the trends will continue. It has been decades since my 7th grade introduction to algebra, but it appears the negative social messages regarding girls in math and science are still present today. Otherwise there would be no need (i.e. no market) for books like Danica McKellar’s “Math Doesn’t Suck,” and the follow-up “Kiss My Math,” both aimed at battling these negative messages at the middle school level.

As to how I have battled these cultural expectations, I developed a thick skin. I have also learned to expect excellence from myself even when a teacher, or a peer, or a boss may have had lower expectations for me than for a male counterpart. Sort of a John Mayer “Who Says” type of attitude. Who says I can’t do Math and Science. Watch me.

3) How would you explain Risk Management using software to a class of graduate students in mathematics and statistics?

There are many areas of Risk Management. My specific experience has been on the Credit Risk Management and Fraud Risk Management sides in a couple of industries. For credit risk in financial services, typically there is a specific department whose role is to quantify and predict credit risk. Not just for the current portfolio, but for new products as well. Various methodologies are utilized, ranging from summarization of portfolio characteristics that have a known relationship to default to using historical data to build out predictive models for production implementation. Key skills needed here are good understanding of the business, solid statistical methods knowledge, and computing skills. As far as the computing /software skills needed, there are three main categories 1) query and preparation of data, 2) model building and validation, and 3) model implementation. The actual tools will likely differ across these categories. For example, 1) might be tackled with SAS®, Business Objects, or straight SQL; 2) requires a true modeling package or coding language like SAS®, SPSS, R, etc; and lastly 3) is the trickiest, as implementation can have many system limitations, but SAS® or C++ are often seen at implementation.

4) Describe some of your most challenging and most exciting projects over the years.

I have been very fortunate to have many challenges and good projects in every role I have been in, but as I look back today, some things that stand out the most were in ‘high tech’. By virtue of being high tech, there is no fear of technology, and it is fast-paced and ever evolving to the next generation of product.

I spent seven years in the Semiconductor industry during the 90’s at Micron Technology, Intel, and Motorola. At the beginning of that window, we left the “486 processor” world, and during that window we spanned the realm of “Pentium processors.” Moore’s Law dominated all of this. To stay competitive all of these companies embraced statistical methods to help speed up development time.

At one point, I supported a group of about 10 R&D engineers in the Design and Analysis of their process improvement and simplification experiments. This afforded me exposure to much of the leading edge research the team was working on.

I recall one project with the goal of optimizing capacitance via surface roughness of the capacitor structures. In addition to all the science involved at the manufacturing step, what made this so interesting was the difficulty in measuring capacitance at the point in the process where film roughness was introduced. All we had were surface images after this step. The semiconductor wafers had to pass through several more process steps to get to the point where capacitance could actually be measured. All of this provided challenges around the design of the experiment and the data handling and analysis.

By working closely with both the process engineer and the process technician I was able to gather the image files off the image tool that were taken from the experimental runs. I used SAS® (yes, another shameless plug for my favorite software) to process the images using Fast Fourier Transforms. Subsequently, the transformed data was correlated to the capacitance in the analysis of the experimental results. Finding the “sweet spot” for capacitance, as driven by surface roughness, provided a huge leap for this process technology team.

The challenges of today are much different than they were in the 90s. In the more recent years, I have been working with transactional data related to financial services or health care claims. The challenges manifest themselves in the sheer volume of the data. In the last decade in particular most industries have been able to put the infrastructures in place to gather and store massive amounts of data related to their businesses. The challenge of turning this data into meaningful actionable information has been equally exciting as using Fast Fourier Transforms on image processing to optimize capacitance!

Currently I am working with an Oracle database where one table in the schema has 250 million records and a couple hundred fields. I refer to this as a “Pushing Tera” situation, since this one table is close to a Terabyte in size. As far as storing the data, that is not a big deal, but working with data this large or larger is the challenge.

Different skill sets are needed here beyond those of just an analyst, data miner, or statistician. These VLDB situations have morphed me into a bit of an IT person.

- How do you efficiently query such large databases? An inefficient SQL query will not be a bother in a situation where the database is small. But when the database is large, SQL efficiency is key. Many skills needed for industry are not necessarily taught in academia, but rather get picked up along the way, like Unix and SQL. I now write efficient SQL code, but many poorly written jobs gave their lives so that I could learn these efficiencies!

- Eventually I will need to organize this data into an application specific format and put data security controls around the process. Again, is this Advanced Analytics? Not really, it is more of an MIS role. The newness in these challenges keeps me excited about my work.

5) How important do you think work life balance is for people in this profession? What do you do to chill out?

I don’t think the work-life balance is any more or less important to the decision science professionals than it is to any other profession really. I have friends in many other professions like Law, Nursing, Financial Planning, etc. with the same work-life balance struggles.

We live in a busy culture that includes more and more demands placed on us professionally. Let’s face it, most of us are care-takers to someone besides ourselves. It might be a spouse, or a child, or a dog, or even an elderly parent. Therefore, a total focus on work is bound to upset the work-life balance for most of us.

My biggest struggle comes in the form of balancing the two sides of my brain. That may sound weird, but one thing you have to agree with is that all of this is pretty “Left Brained”: mathematics, statistics, business intelligence, computing, etc.

To balance this out, and tap into my Right Brain, I like to dabble in the arts to some extent. Don’t get me wrong, I am not an artist! But that doesn’t mean I can’t draw on creativity in the artistic sense. For example, this past summer I took a course on Adobe Photoshop and Illustrator at Minneapolis College of Art and Design. This provided the best of both worlds, combining software and art! In addition to learning how to remove Cindy Crawford’s mole (yes, we did this), there were some very useful projects. One of my course projects was creating my customized Twitter background. An endeavor like this provides me a ‘chilling out’ factor from the normal work world. I know of many other Left Brain leaners that do similar things, like playing a musical instrument, or painting, etc. This is another reason why I took up digital photography: more visual arts.

Volunteer work has a balancing effect too. I try to give back to the community when I can. Swinging a hammer at Habitat for Humanity, or doing record keeping for an Animal Rescue organization, are things I have participated in.

And if none of this works, I enjoy cooking for my family and friends, and plying them with wine!

6) What are you views on:

A) Data Quality

I’d have to say I am for data Quality! Who isn’t? But the reality is that data is dirty. That “Pushing Tera” Oracle table I mentioned earlier, well it turns out it has some issues. And it is incumbent upon me to determine the quality of that data before attempting to do anything analytical with it. One place in industry where value enhancement are needed: database administrators with business knowledge. It seems that more times than not, even if there was a business savvy DBA they may have moved on, leaving the consumers of that data (that would be me) to fend for themselves. There is some debate over which philosopher said “Know thyself.” Today’s job challenge is to “Know thy data” or perhaps “Value those that know thy data.”

B) Predictive Analytics for Fraud Monitoring

There is a huge market for analytics in fraud detection and prevention. But it is not for the faint of heart. Insiders, at least in Mortgage and Health Care, are the typical perpetrators of lucrative fraud. These insiders know how the industry processes work and they exploit this. As soon as one loophole is discovered and patched, fraudsters are looking for another loophole to exploit. This makes the task of predictive analytics different for Fraud than other areas where underlying patterns are probably more stable. Any methodology used here must have “turn on a dime” features built in, if possible. With economic conditions as they are, fraud detection/monitoring will remain important and challenging field.