Here is an in depth interview with Peter J Thomas, one of Europe’s top Business Intelligence expert and influential thought leaders. Peter talks about BI tools, data quality, science careers, cultural transformation and BI and the key focus areas.

I am a firm believer that the true benefits of BI are only realised when it leads to cultural transformation. -Peter James Thomas

Ajay- Describe about your early career including college to the present.

Peter –I was an all-rounder academically, but at the time that I was taking public exams in the 1980s, if you wanted to pursue a certain subject at University, you had to do related courses between the ages of 16 and 18. Because of this, I dropped things that I enjoyed such as English and ended up studying Mathematics, Further Mathematics, Chemistry and Physics. This was not because I disliked non-scientific subjects, but because I was marginally fonder of the scientific ones. In a way it is nice that my current blogging allows me to use language more.

The culmination of these studies was attending Imperial College in London to study for a BSc in Mathematics. Within the curriculum, I was more drawn to Pure Mathematics and Group Theory in particular, and so went on to take an MSc in these areas. This was an intercollegiate course and I took a unit at each of King’s College and Queen Mary College, but everything else was still based at Imperial. I was invited to stay on to do a PhD. It was even suggested that I might be able to do this in two years, given my MSc work, but I decided that a career in academia was not for me and so started looking at other options.

As sometimes happens a series of coincidences and a slice of luck meant that I joined a technology start-up, then called Cedardata, late in 1988; my first role was as a Trainee Analyst / Programmer. Cedardata was one of the first organisations to offer an Accounting system based on a relational database platform; something that was then rather novel, at least in the commercial arena. The RDBMS in question was Oracle version 5, running on VAX VMS – later DEC Ultrix and a wide variety of other UNIX flavours. Our input screens were written in SQL*Forms 2 – later Oracle Forms – and more complex processing logic and reports were in Pro*C; this was before PL/SQL. Obviously this environment meant that I had to become very conversant with SQL*Plus and C itself.

When I joined Cedardata, they had 10 employees, 3 customers and annual revenue of just £50,000 ($80,000). By the time I left the company eight years later, it had grown dramatically to having a staff of 250, over 300 clients in a wide range of industries and sales in excess of £12 million ($20 million). It had also successfully floatated on the main London Stock Exchange. When a company grows that quickly the same thing tends to happen to its employees.

Cedardata was probably the ideal environment for me at the time; an organisation that grew rapidly, offering new opportunities and challenges to its employees; that was fiercely meritocratic; and where narrow, but deep, technical expertise was encouraged to be rounded out by developing more general business acumen, a customer-focused attitude and people-management skills. I don’t think that I would have learnt as much, or progressed anything like as quickly in any other type of organisation.

It was also at Cedardata that I had my first experience of the class of applications that later became known as Business Intelligence tools. This was using BusinessObjects 3.0 to write reports, cross-tabs and graphs for a prospective client, the UK Foreign and Commonwealth Office (State Department). The approach must have worked as we beat Oracle Financials in a play-off to secure the multi-million pound account.

During my time at Cedardata, I rose to become an executive and filled a number of roles including Head of Development and also Assistant to the MD / Head of Product Strategy. Spending my formative years in an organisation where IT was the business and where the customer was King had a profound impact on me and has influenced my subsequent approach to IT / Business alignment.

Ajay- How would you convince young people to take maths and science more? What advice would you give to policy makers to promote more maths and science students?

Peter- While I have used little of my Mathematics directly in my commercial career, the approach to problem-solving that it inculcated in me has been invaluable. On arriving at University, it was something of a shock to be presented with Mathematical problems where you couldn’t simply look up the method of solution in a textbook and apply it to guarantee success. Even in my first year I had to grapple with challenges where you had no real clue where to start. Instead what worked, at least most of the time, was immersing yourself in the general literature, breaking down the problem into more manageable chunks, trying different techniques – sometimes quite recherché ones – to make progress, occasionally having an insight that provides a short-cut, but more often succeeding through dogged determination. All of that sounds awfully like the approach that has worked for me in a business context.

Having said that, I was not terribly business savvy as a student. I didn’t take Mathematics because I thought that it would lead to a career, I took it because I was fascinated by the subject. As I mentioned earlier, I enjoyed learning about a wide range of things, but Science seemed to relate to the most fundamental issues. Mathematics was both the framework that underpinned all of the Sciences and also offered its own world where astonishing an beautiful results could be found, independent of any applicability; although it has to be said that there are few braches of Mathematics that have not be applied somewhere or other.

I think you either have this appreciation of Science and Mathematics or you don’t and that this happens early on.

Certainly my interest was supported by my parents and a variety of teachers, but a lot of it arose from simply reading about Cosmology, or Vulcanism, or Palaeontology. I watched a YouTube of Steven Jay Gould recently saying that when he was a child in the 1950s all children were “in” to Dinosaurs, but that he actually got to make a career out of it. Maybe all children aren’t “in” to dinosaurs in the same way today, perhaps the mystery and sense of excitement has gone.

In the UK at least there appears to be less and less people taking Science and Mathematics. I am not sure what is behind this trend. I read pieces that suggest that Science and Maths are viewed as being “hard” subjects, and people opt for “easier” alternatives. I think creative writing is one of the hardest things to do, so I’m not sure where this perspective comes from.

Perhaps some things that don’t help are the twin images of the Scientist as a white-coated boffin and the Mathematician as a chalk-covered recluse, neither of whom have much of a grasp on the world beyond their narrow discipline. While of course there is a modicum of truth in these stereotypes, they are far from being wholly accurate in my experience.

Perhaps Science has fallen off of the pedestal that it was placed on in the 1950s and 1960s. Interest in Science had been spurred by a range of inventions that had improved people’s lives and often made the inventors a lot of money. Science was seen as the way to a better tomorrow, a view reinforced by such iconic developments as the discovery of the structure of DNA, our ever deepening insight about sub-atomic physics and the unravelling of many mysteries of the Universe. These advances in pure science were supported by feats of scientific / engineering achievement such as the Apollo space programme. The military importance of Science was also put into sharp relief by the Manhattan Project; something that also maybe sowed the seeds for later disenchantment and even fear of the area.

The inevitable fallibility of some Scientists and some scientific projects burst the bubble. High-profile problems included the Thalidomide tragedy and the outcry, however ill-informed, about genetically modified organisms. Also the poster child of the scientific / engineering community was laid low by the Challenger disaster. On top of this, living with the scientifically-created threat of mutually-assured destruction probably began to change the degree of positivity with which people viewed Science and Scientists. People arrived at the realisation that Science cannot address every problem; how much effort has gone into finding a cure for cancer for example?

In addition, in today’s highly technological world, the actual nuts and bolts of how things work are often both hidden and mysterious. While people could relatively easily understand how a steam engine works, how many have any idea about how their iPod functions? Technology has become invisible and almost unimportant, until it stops working.

I am a little wary of Governments fixing issues such as these, which are the result of major generational and cultural trends. Often state action can have unintended and perverse results. Society as a whole goes through cycles and maybe at some future point Science and Mathematics will again be viewed as interesting areas to study; I certainly hope so. Perhaps the current concerns about climate change will inspire a generation of young people to think more about technological ways to address this and interest them in pertinent Sciences such as Meteorology and Climatology.

Ajay-. How would you rate the various tools within the BI industry like in a SWOT analysis (briefly and individually)?

Peter- I am going to offer a Politician’s reply to this. The really important question in BI is not which tool is best, but how to make BI projects successful. While many an unsuccessful BI manager may blame the tool or its vendor, this is not where the real issues lie.

I firmly believe that successful BI rests on four mutually reinforcing pillars:

- understand the questions the business needs to answer,

- understand the data available,

- transform the data to meet the business needs and

- embed the use of BI in the organisation’s culture.

If you get these things right then you can be successful with almost any of the excellent BI tools available in the marketplace. If you get any one of them wrong, then using the paragon of BI tools is not going to offer you salvation.

I think about BI tools in the same way as I do the car market. Not so many years ago there were major differences between manufacturers.

The Japanese offered ultimate reliability, but maybe didn’t often engage the spirit.

The Germans prided themselves on engineering excellence, slanted either in the direction of performance or luxury, but were not quite as dependable as the Japanese.

The Italians offered out-and-out romance and theatre, with mechanical integrity an afterthought.

The French seemed to think that bizarrely shaped cars with wheels as thin as dinner plates were the way forward, but at least they were distinctive.

The Swedes majored on a mixture of safety and aerospace cachet, but sometimes struggled to shift their image of being boring.

The Americans were still in the middle of their love affair with the large and the rugged, at the expense of convenience and value-for-money.

Stereotypically, my fellow-countrymen majored on agricultural charm, or wooden-panelled nostalgia, but struggled with the demands of electronics.

Nowadays, the quality and reliability of cars are much closer to each other. Most manufacturers have products with similar features and performance and economy ratings. If we take financial issues to one side, differences are more likely to related to design, or how people perceive a brand. Today the quality of a Ford is not far behind that of a Toyota. The styling of a Honda can be as dramatic as an Alfa Romeo. Lexus and Audi are playing in areas previously the preserve of BMW and Mercedes and so on.

To me this is also where the market for BI tools is at present. It is relatively mature and the differences between product sets are less than before.

Of course this doesn’t mean that the BI field will not be shaken up by some new technology or approach (in-memory BI or SaaS come to mind). This would be the equivalent of the impact that the first hybrid cars had on the auto market.

However, from the point of view of implementations, most BI tools will do at least an adequate job and picking one should not be your primary concern in a BI project.

Ajay- SAS Institute Chief Marketing Officer, Jim Davis (interviewed with this blog) points to the superiority of business analytics rather than business intelligence as an over hyped term. What numbers, statistics and graphs would you quote rather than semantics to help re direct those perceptions?

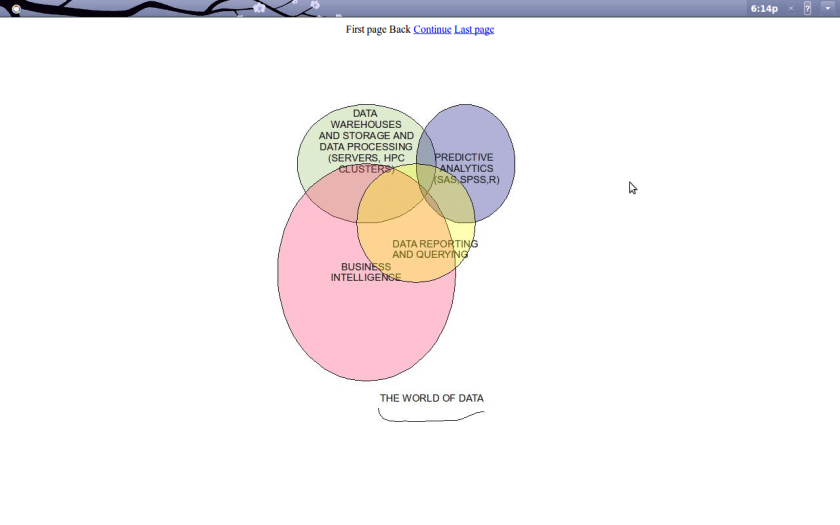

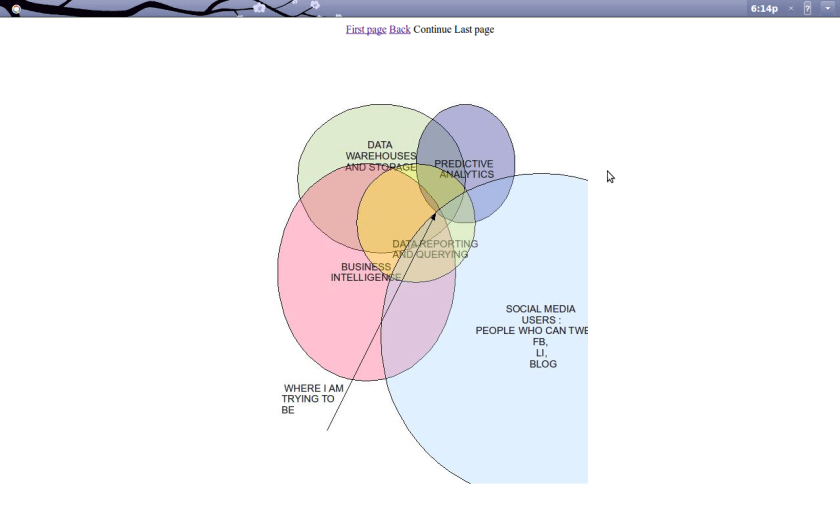

I myself use SAS,SPSS, R and find the decision management capabilities as James Taylor calls Decision Management much better enabled than by simple ETL tools or reporting and aggregating graphs tools in many BI tools.

Peter- I have expended quite a lot of energy and hundreds of words on this subject. If people are interested in my views, which are rather different to those of Jim Davis, then I’d suggest that they read them in a series of articles starting with Business Analytics vs Business Intelligence [URL http://peterthomas.wordpress.com/2009/03/28/business-analytics-vs-business-intelligence/ ].

I will however offer some further thoughts and to do this I’ll go back to my car industry analogy. In a world where cars are becoming more and more comparable in terms of their reliability, features, safety and economy, things like styling, brand management and marketing become more and more important.

As the true differences between BI vendors narrow, expect more noise to be made by marketing departments about how different their products are.

I have no problem in acknowledging SAS as a leader in Business Analytics, too many people I respect use their tools for me to think otherwise. However, I think a better marketing strategy for them would be to stick to the many positives of their own products. If they insist on continuing to trash competitors, then it would make sense for them to do this in a way that couldn’t be debunked by a high school student after ten seconds’ reflection.

Ajay- In your opinion what is the average RoI that a small, large medium enterprise gets by investing in a business intelligence platform. What advice would you give to such firms (separately) to help them make their minds?

Peter- The question is pretty much analogous to “What are the benefits of opening an office in China?” the answer is going to depend on what the company does; what their overall strategy is and how a China operation might complement this; whether their products and services are suitable for the Chinese market; how their costs, quality and features compare to local competitors; and whether they have cracked markets closer to home already.

To put things even more prosaically, “How long is a piece of string?”

Taking to one side the size and complexity of an organisation, BI projects come in all shapes and sizes.

Personally I have led Enterprise-wide, all-pervasive BI projects which have had a profound impact on the company. I have also seen well-managed and successful BI projects targeted on a very narrow and specific area.

The former obviously cost more than the latter, but the benefits are commensurately greater. In fact I would argue that the wider a BI project is spread, the greater its payback. Maybe lessons can be learnt and confidence built in an initial implementation to a small group, but to me the real benefit of BI is realised when it touches everything that a company does.

This is not based on a self-interested boosting of BI. To me if what we want to do is take better business decisions, then the greater number of such decisions that are impacted, the better that this is for the organisation.

Also there are some substantial up-front investments required for BI. These would include: building the BI team; establishing the warehouse and a physical architecture on which to deliver your application. If these can be leveraged more widely, then costs come down.

The same point can be made about the intellectual property that a successful BI team develops. This is one reason why I am a fan of the concept of BI Competency Centres [URL http://peterthomas.wordpress.com/2009/05/11/business-intelligence-competency-centres/ ].

I have been lucky enough to contribute to an organisation turning round from losing hundreds of millions of dollars to recording profits of twice that magnitude. When business managers cite BI as a major factor behind such a transformation, then this is clearly a technology that can be used to dramatic effect.

Nevertheless both estimating the potential impact of BI and measuring its actual effectiveness are non-trivial activities. A number of different approaches can be taken, some of which I cover in my article:

Measuring the benefits of Business Intelligence [URL http://peterthomas.wordpress.com/2009/02/26/measuring-the-benefits-of-business-intelligence/ ]. As ever there is no single recipe for success.

Ajay-. Which BI tool/ code are you most comfortable with and what are its salient points?

Peter –Although I have been successful with elements of the IBM-Cognos toolset and think that this has many strong points, not least being relatively user-friendly, I think I’ll go back to my earlier comments about this area being much less important than many others for the success of a BI project.

Ajay -How do you think cloud computing will change BI? What percentage of BI budgets go to data quality and what is eventual impact of data quality on results?

Peter –I think that the jury is still out on cloud computing and BI. By this I do not mean that cloud computing will not have an impact, but rather that it remains unclear what this impact will actually be.

Given the maturity of the market, my suspicion is that the BI equivalent of a Google is not going to emerge from nowhere. There are many excellent BI start-ups in this space and I have been briefed by quite a few of them.

However, I think the future of cloud computing in BI is likely to be determined by how the likes of IBM-Cognos, SAP-BusinessObjects and Oracle-Hyperion embrace the area.

Having said this, one of the interesting things in computing is how easy it is to misjudge the future and perhaps there is a potential titan of cloud BI currently gestating in the garage so beloved of IT mythology.

On data quality, I have never explicitly split out this component of a BI effort. Rather data quality has been an integral part of what we have done. Again I have taken a four-pillared approach:

- improve how the data is entered;

- make sure your interfaces aren’t the problem;

- check how the data has been entered / interfaced;

- and don’t suppress bad data in your BI.

The first pillar consists of improved validation in front-end systems – something that can be facilitated by the BI team providing master data to them – and also a focus on staff training, stressing the importance to the organisation of accurately recording certain data fields.

The second pillar is more to do with the general IT Architecture and how this relates to the Information Architecture, again master data has a role to play, but so does ensuring that the IT culture is one in which different teams collaborate well and are concerned about what happens to data when it leaves “their” systems.

The third pillar is the familiar world of after-the-fact data quality reports and auditing, something that is necessary, but not sufficient, for success in data quality.

Finally there is what I think can be one of the most important pillars; ensuring that the BI system takes a warts-and-all approach to data. This means that bad data is highlighted, rather than being suppressed. In turn this creates pressure for the problems to be addressed where they arise and creates a virtuous circle.

For those who might be interested in this area, I expand on it more in Using BI to drive improvements in data quality [URL http://peterthomas.wordpress.com/2009/02/11/using-bi-to-drive-improvements-in-data-quality/ ].

Ajay- You are well known with England’s rock climbing and boulder climbing community. A fun question- what is the similarity between a BI implementation/project and climbing a big boulder.

Peter –I would have to offer two minor clarifications.

First it is probably my partner who is better known in climbing circles, via here blog [URL http://77jenn.blogspot.com/ ] and articles and reviews that she has written for the climbing press; though I guess I can take credit for most of the photos and videos.

Second, particularly given the fact that a lot of our climbing takes place in Wales, I should acknowledge the broader UK climbing community and also mention our most mountainous region of Scotland.

Despite what many inhabitants of Sheffield might think to the contrary, there is life beyond Stanage Edge [URL http://en.wikipedia.org/wiki/Stanage ].

I have written about the determination and perseverance that are required to get to the top of a boulder, or indeed to the top of any type of climb [URL http://peterthomas.wordpress.com/2009/03/31/perseverance/ ].

I think those same qualities are necessary for any lengthy, complex project. I am a firm believer that the true benefits of BI are only realised when it leads to cultural transformation. Certainly the discipline of change management has many parallels with rock climbing. You need a positive attitude and a strong belief in your ultimate success, despite the inevitable setbacks. If one approach doesn’t yield fruit then you need to either fine-tune or try something radically different.

I suppose a final similarity is the feeling that you get having completed a climb, particularly if it is at the limit of your ability and has taken a long time to achieve. This is one of both elation and deep satisfaction, but is quickly displaced by a desire to find the next challenge.

This is something that I have certainly experienced in business life and I think the feelings will be familiar to many readers.

Biography-

Peter Thomas has led all-pervasive, Business Intelligence and Cultural Transformation projects serving the needs of 500+ users in multiple business units and service departments across 13 European and 5 Latin American countries. He has also developed Business Intelligence strategies for operations spanning four continents. His BI work has won two industry awards including “Best Enterprise BI Implementation”, from Cognos in 2006 and “Best use of IT in Insurance”, from Financial Sector Technology in 2005. Peter speaks about success factors in both Business Intelligence and the associated Change Management at seminars across both Europe and North America and writes about these areas and many other aspects of business, technology and change on his blog [URL http://peterthomas.wordpress.com ].

But with a dedicated effort, you should expect order-of-magnitude improvements and, as a direct result, an enormous boost in your ability to manage risk, steer a course through the crisis, and get back on the growth curve.

But with a dedicated effort, you should expect order-of-magnitude improvements and, as a direct result, an enormous boost in your ability to manage risk, steer a course through the crisis, and get back on the growth curve.

Jim Harris is the Blogger-in-Chief here at Obsessive-Compulsive Data Quality (OCDQ), which is an independent blog offering a vendor-neutral perspective on data quality.

Jim Harris is the Blogger-in-Chief here at Obsessive-Compulsive Data Quality (OCDQ), which is an independent blog offering a vendor-neutral perspective on data quality.